Understanding Contextual Vectors: How They Work

Deciphering Contextual Vectors: Their Functionality and Significance Unraveling the realm of data science and AI, the power of contextual vectors becomes undeniable due to their role in […]

Deciphering Contextual Vectors: Their Functionality and Significance

Unraveling the realm of data science and AI, the power of contextual vectors becomes undeniable due to their role in language models and machine learning.

They are a fundamental pillar in modern systems, enabling enhanced file embedding and improved functioning of word2vec models.

Let’s delve into the intricate world of contextual vectors, their evolution, applications, and the challenges they pose in various domains.

Stay engaged as we set off on an enlightening journey that discloses the enigmatic world of contextual vectors.

Key Takeaways

- Contextual Vectors Play a Crucial Role in Enhancing AI Systems’ Understanding of Human Language, Driving Significant Digital Business Components Such as Natural Language Processing and Computer Vision

- The Formation and Utilization of Contextual Domains, Facilitated by Word Embedding Tools Like Word2Vec, Contribute to the Advancement of AI Systems Simulating Human Conversational Patterns and Are Essential for Semantic SEO Practices

- Contextual Vectors Offer a Mathematical Representation of Words, Capturing Semantically Significant Patterns and Aiding in SEO Efforts Focused on Topical Authority, Backlink Profiling, and Keyword Optimization

- Contextual Vectors Have a Wide Range of Applications Beyond Text, Including Splitting .Json Files for SEO Practices, Speaker Diarization for Audio Transcript Accuracy, and Organizing Digital Academic Content

- While Contextual Vectors Have Limitations in Detecting Nuances of Human Language and Require Extensive Training Resources and Computational Power, Their Integration Into AI Applications and SEO Services Holds Great Promise for the Future

Introduction to Contextual Vectors

Contextual vectors, an intricate subject in the field of machine learning, play an instrumental role in determining the semantic meaning of words. They collaborate with AI systems to enhance their understanding of human language. This understanding is paramount as it drives key digital business components, such as Natural Language Processing and computer vision.

When it comes to language models such as Google BERT or OpenAI’s engaging ChatGPT, the relevance of contextual vectors becomes apparent. By assisting these models in recognizing the tone, sentiment, and underlying subtleties of words, they ensure that AI communicates as humanely as possible. This fusion of AI and semantics cultivates a new dawn in technological progression, Semantic SEO.

A fundamental aspect of Semantic SEO that sometimes goes unnoticed is the formation and utilization of contextual domains. By using a word embedding tool like Word2Vec to create a vector representation of terms, sophisticated software systems can effectively discern context data. The ability to interpret such data is a massive stride towards AI systems simulating human conversational patterns.

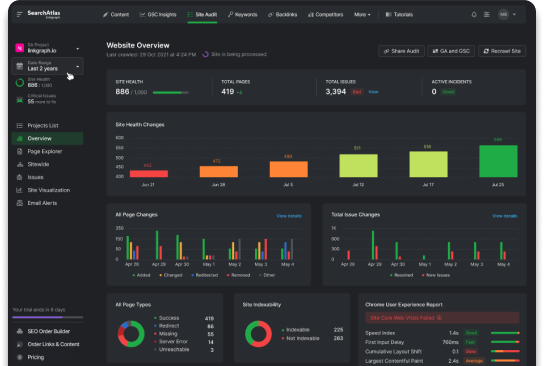

Among the various aids available for SEO services, Search Atlas by LinkGraph peaks with its efficacious algorithms. Its core operations in strengthening topical authority, link building, and backlink profiling provide unmatched results. Search Atlas harnesses the essence of entities, LSI, and embraces the proficiency of machine learning, to deliver top-tier link building services.

The Origin and Evolution of Contextual Vectors

The advent of contextual vectors is deeply rooted in the innovations in data science and machine learning. As the demand for more intelligent, discerning, and human-like AI systems grew, so did the need for a mechanism to interpret language in a way that aligned with human comprehension. Amongst the pioneering advancements in the latter part of the 20th century came the development of contextual vectors.

The journey of contextual vectors can be traced back to the introduction of Word2Vec, a groundbreaking word embedding method. Word2Vec’s genius lay in its ability to represent words as vectors in a multi-dimensional space, thereby establishing relational semantics. This revolutionized machine comprehension of languages and set the stage for the era of contextual vectors.

Following this, came the era of ‘vector databases’ and ‘encoders’:

- Vector databases – repositories, where vector representations of text were stored. These helped AI systems facilitate quick semantic comparisons and associations.

- Encoders – the conversional tools used to translate plain text into vector format, effectively creating a bridge between human language and machine language.

Now, diverse concepts from natural language processing to machine learning, like Google Passage Ranking or Google’s BERT algorithm, blend with contextual vectors. Immersed in the rise of Semantic SEO, link building, or content lengthing, they’re vital for tools like Search Atlas by LinkGraph, an application designed to strengthen topical authority and other SEO services.

How Contextual Vectors Function in Various Contexts

Contextual vectors play a vital role in varied AI-dependent domains by offering a mathematical representation of words. This representation helps to capture semantically significant patterns, such as the concept of ‘finiteness’ in training examples for machine learning. Contextual vectors can assign ‘finiteness’, a property that describes whether or not an element or set has a bounded nature.

In SEO efforts, the influence of contextual vectors is fairly significant. SEO experts often turn to semantic SEO practices that heavily rely on topical authority, backlink profiling, and choosing the right keywords for SEO. The rich, contextual vector database provided by tools like Search Atlas by LinkGraph offers a solid foundation for such practices, ushering in high-quality SEO results.

The digital mechanism of contextual vectors goes beyond text and applies to versatile file extensions, including the increasingly popular .json. For instance, in splitting up .json files or embedding them as per SEO practices, contextual vectors come into play. Their vector representation schema can break down and distribute complex file names across the web with suave precision, resulting in more efficient SEO operations.

As part of Natural Language Processing tasks, contextual vectors assist in speaker diarization – separating an audio file’s speakers and creating transcriptions with preciseness. They can accurately process different speakers’ overlapping streams, just as in real conversation, minimizing any noise occurrence in the transcript. This aspect is crucial in AI applications like Google’s River Bank project or new-age technological ambition like computer vision.

Significance and Impact of Contextual Vectors on Data Processing

When it comes to data processing, contextual vectors are more than just a supplement, they are a necessity. Their principal role lies in creating meaningful layers of semantic information from raw data, thereby bridging the gap between the human language and machine language codes. Providing a broader context to mere data points, they revolutionize the very essence of data interpretation.

In the realm of Google Scholar, contextual vectors expedite the access and interpretation of voluminous scholarly content. They enable precise collation of relevant information, thereby making the digital academic landscape more organized and user-friendly. This neatly sewn digital academic quilt owes its existence to the robust seamstress, the contextual vectors.

Within the bounds of privacy policy formulations and webpage context data, the contribution of contextual vectors cannot be overstated. By accurately recognizing and classifying different webpage entities, these vectors aid AI in discerning the relevant content for creating privacy policies. This precision ensures valuable security endorsements for digital footprints across various platforms.

Finally, in digital environments like Reddit or Drupal, their role in organizing, classifying, and interlinking numerous Topic-Based Content System (TBCS) strands is significant. By enabling machines to comprehend the context and relation between diverse subjects, contextual vectors essentially structure the semantic web. They bind its disparate elements into a cohesive whole, making the internet a more accessible and understandable entity.

Potential Limitations and Challenges in Using Contextual Vectors

While the advent of contextual vectors has largely smoothed the intricacies of semantic associativity for AI systems, certain limitations persist. One primary concern is the difficulty in detecting nuances of human language. Despite their intricate structure and advanced capabilities, contextual vectors might falter when dealing with the connotations, symbolisms, or idioms inherent in human language.

Moreover, the training resources required for creating contextual vectors pose a substantial challenge. The creation of robust and useful vectors requires vast, diverse, and high-quality training datasets. Collecting and maintaining such extensive datasets can prove to be a daunting and resource-intensive task.

Another limitation often faced in harnessing the potential of contextual vectors relates to computational costs. The intensive mathematical computation involved in generating and using vector representations often requires high processing power. This could render the system inefficient, particularly for large-scale applications involving vast multilingual datasets.

From an SEO perspective, despite their vast potential, the integration of contextual vectors into size-specific practices can be problematic. For instance, the task of incorporating context into short-form content or optimizing the content length as part of On-Page SEO can prove tricky. Overcoming these challenges would need innovative SEO solutions that tactfully blend conventional practices with the power of contextual vectors.

Innovative Applications and Future Prospects of Contextual Vectors

The future of contextual vectors in AI applications is nothing short of promising. Novel applications which were previously considered challenges are now viewable under the innovative lens that contextual vectors offer. For instance, advancements in computer vision can now leverage these vectors for image recognition tasks with increased accuracy and depth of understanding.

This vector technology’s innovative prowess is also being explored in the realm of Machine Translation (MT). By bridging the nuances of different languages and establishing a robust semantic mapping, contextual vectors are elevating the quality of MT. This results in translations that are not only accurate but culturally sensitive, cognizant, and contextual.

The potential impact of contextual vectors is even more stark in the domain of SEO services. The integration of advanced tools like Search Atlas by LinkGraph, with link building, and other SEO services, paves the way for SEO practices that are data-driven, precise, and effective. This combination could potentially revolutionize methods of increasing contextual domain authority and managing backlink profiles.

Given their promising prospects, it is evident that the innovation in contextual vectors will be instrumental in determining the future of AI. As the quest continues for AI models that can truly embody human conversational patterns beyond just text, the role of contextual vectors will undoubtedly be pivotal. The journey of unveiling the full potential of these vectors in AI, machine learning, and SEO services has just begun.

Frequently Asked Questions

What exactly are contextual vectors and how do they differ from traditional vectors?

Contextual vectors, also known as contextual embeddings, are a type of word representation used in natural language processing (NLP). Unlike traditional vectors, which represent words as fixed, static values, contextual vectors take into consideration the surrounding context of a word.

This means that the meaning of a word can vary depending on its neighboring words and the overall context of the sentence. Traditional vectors, such as word embeddings, treat each word as an independent entity and assign a fixed vector representation to it.

This approach is often based on the statistical distributional properties of words, where words that frequently appear together in similar contexts are represented by similar vectors. However, this method may not capture the nuances and complexities of language, as it fails to consider the dynamic nature of word meanings.

In contrast, contextual vectors, which have gained popularity with the introduction of transformer models like BERT, capture the contextual information by leveraging the power of deep learning. These models are trained on vast amounts of text data and learn to generate contextualized word embeddings.

By considering the entire sentence or paragraph, contextual vectors can better capture the meaning and semantic relationships between words. One major advantage of contextual vectors is their ability to handle word ambiguity. The same word can have different meanings depending on the sentence it appears in.

Contextual embeddings take into account the broader context of the word, allowing for more accurate representation of polysemous words. For example, the word “bank” can refer to a financial institution or the side of a river, and a contextual vector model would be able to differentiate between these meanings based on the surrounding words.

In summary, contextual vectors differ from traditional vectors in that they consider the contextual information of words and capture the dynamic nature of language. This approach allows for more accurate and nuanced word representations in NLP tasks, enabling models to better understand the meaning and context of words in natural language.

How have contextual vectors evolved over time and what advancements have been made in their functionality?

Contextual vectors have greatly evolved over time, undoubtedly transforming the way language models comprehend and generate text. The introduction of word embeddings, such as Word2Vec, was a pivotal moment in the field of natural language processing (NLP).

These models assigned a static representation to each word, making it easier to interpret semantic relationships between them. However, the limitation of these early word embeddings was their inability to capture the nuanced meaning of words in different contexts.

To overcome this challenge, contextual vectors were introduced, with the most notable breakthrough being the advent of transformer models. Transformers, such as BERT (Bidirectional Encoder Representations from Transformers), have revolutionized NLP by capturing contextual information in a more effective manner.

These models analyze surrounding words and consider the order in which they appear, providing a word representation that is tailored to its contextual usage. Advancements in contextual vectors have also led to major improvements in various NLP tasks.

For example, sentiment analysis and text classification have benefited from the enhanced understanding of word context. Instead of relying solely on individual words, contextual vectors enable models to grasp the overall meaning of a sentence and make more accurate predictions. Moreover, contextual vectors have played a significant role in machine translation.

With the ability to comprehend the context of words, translation models can produce more accurate and coherent translations, even for complex sentence structures. Further advancements in contextual vector models include fine-tuning techniques.

By fine-tuning pre-trained models on specific tasks or domains, their functionality can be optimized for more specialized applications. This allows for better performance in tasks such as question-answering, named entity recognition, and language generation.

In conclusion, contextual vectors have evolved over time and contributed greatly to advancing NLP. With the introduction of transformer models like BERT and the ability to capture contextual information, language models now have a deeper understanding of language and can generate more accurate and contextually-appropriate text.

These advancements have not only improved the performance of various NLP tasks but have also paved the way for the development of more sophisticated and powerful language models in the future.

Can you provide examples of how contextual vectors are used in different contexts such as natural language processing and machine translation?

Contextual vectors play a crucial role in various applications, such as Natural Language Processing (NLP) and Machine Translation (MT), by capturing the meaning and context of words and phrases within a given language. In NLP, contextual vectors enable the understanding and interpretation of text by considering the surrounding words and phrases.

One notable example is the use of contextual vectors in language models like BERT (Bidirectional Encoder Representations from Transformers). BERT utilizes a transformer architecture to leverage both left and right context information during training, resulting in more accurate word embeddings that capture the nuances of language and improve tasks such as sentiment analysis, question answering, and text classification.

In the field of Machine Translation, contextual vectors are powerful tools for improving the accuracy and fluency of translated text. Traditionally, phrase-based translation systems mapped words or phrases independently, ignoring any contextual relationships. However, with the advent of neural machine translation (NMT) models, contextual vectors have become a crucial aspect of the translation process.

NMT models, such as Google Translate’s GNMT, use encoder-decoder architectures like recurrent neural networks (RNNs) or transformers. These models learn to generate contextual representations, known as encoder states, which capture the information of the entire source sentence. These encoder states, in combination with attention mechanisms, help translators to generate accurate and coherent translations, as they can consider the context of the entire sentence rather than individual words or phrases. In both NLP and MT, contextual vectors have revolutionized the way we process and understand language.

They allow for more accurate predictions and translations by incorporating contextual information and capturing the complex relationships between words and phrases. Whether it is accurately representing the meaning of a sentence in natural language understanding tasks or translating sentences in a way that considers the broader context, contextual vectors have proven to be invaluable in these contexts.

As researchers continue to explore new techniques and models, we can expect further advancements in the utilization of contextual vectors in these fields, resulting in more accurate and nuanced language processing and translation.

What are the key benefits and implications of incorporating contextual vectors in data processing and analysis?

Incorporating contextual vectors in data processing and analysis brings several key benefits and implications that can significantly enhance the understanding and utilization of data. First and foremost, contextual vectors provide a deeper level of meaning and understanding to textual data.

By capturing the contextual information surrounding a word or phrase, they can better represent the semantics and relationships between different elements in the data. This allows for more accurate and meaningful data analysis, enabling businesses and organizations to gain valuable insights and make informed decisions.

Moreover, contextual vectors enable improved data clustering and classification. By considering the context in which data points appear, they can group related items together and identify patterns or similarities that may have been overlooked using traditional analysis methods. This can be particularly advantageous in areas such as customer segmentation, fraud detection, and recommendation systems, as it allows for more precise and targeted actions based on the identified patterns.

Another important benefit of incorporating contextual vectors is their ability to enhance natural language processing (NLP) tasks. By understanding the context in which words or phrases are used, NLP models can better comprehend the meaning and intent behind the text. This can greatly improve tasks such as sentiment analysis, entity recognition, and language translation, leading to more accurate and reliable results.

In addition to the benefits, incorporating contextual vectors also brings certain implications. One of the key implications is the need for large amounts of labeled data for training the models. Contextual vector models, such as word embeddings or transformer-based architectures, require a substantial amount of annotated data to capture the diverse range of contexts and meanings.

This can pose a challenge for organizations with limited labeled data, as it may be difficult to train robust and accurate models. Furthermore, the use of contextual vectors introduces computational complexity. Building and using contextual vector models can be computationally expensive, requiring significant resources in terms of processing power and memory.

This can potentially limit the scalability and implementation of such models, especially in scenarios where real-time or high-speed data processing is required. Overall, incorporating contextual vectors in data processing and analysis offers immense benefits in terms of improved understanding, clustering, and NLP capabilities.

However, it is important to consider the implications such as the need for labeled data and computational complexity. By carefully weighing the benefits against the challenges, organizations can effectively leverage contextual vectors to obtain valuable insights from their data and optimize their decision-making processes.

Are there any potential challenges or limitations when it comes to utilizing contextual vectors, and how are researchers working to address them?

When it comes to utilizing contextual vectors, there are certainly potential challenges and limitations that researchers are facing. One of the main challenges is the inherent complexity of capturing and representing context accurately.

Contextual vectors, which aim to represent word meaning in relation to their surrounding context, often require large amounts of data and advanced language models to ensure accuracy. Another limitation is the inability of contextual vectors to capture certain linguistic phenomena, such as idiomatic expressions or domain-specific language.

While contextual vectors have shown promising results in various natural language processing tasks, they are still not perfect and can sometimes struggle in understanding nuances or sarcasm in text. To address these challenges and limitations, researchers are actively exploring various methods. One approach is using larger and more diverse training datasets to improve the representation of context.

By training contextual vectors on a wide range of texts from different domains and genres, researchers hope to enhance their ability to handle different types of language effectively. Additionally, there is ongoing research focused on designing more sophisticated language models that can capture and comprehend the complexity of human language better.

These models not only consider the immediate context but also take into account the wider semantic and syntactic structure of sentences. Furthermore, researchers are also working on developing techniques that can capture and represent specific linguistic phenomena that may be missed by traditional contextual vectors.

For example, incorporating domain-specific knowledge or utilizing external resources like ontologies or knowledge graphs can help address some of the limitations related to domain-specific language. Additionally, research is being conducted to explore the potential of combining contextual vectors with other linguistic resources or representation methods to improve their overall performance and accuracy. In conclusion, while contextual vectors have revolutionized natural language processing tasks, there are still challenges and limitations that researchers are working to address.

The complexity of capturing context accurately and the inability to handle certain linguistic phenomena are among the key obstacles. However, ongoing research aims to overcome these limitations by utilizing larger and more diverse training datasets, designing sophisticated language models, and incorporating domain-specific knowledge. Through these efforts, researchers hope to enhance the capabilities of contextual vectors and improve their performance across various language processing tasks.

Conclusion

Unraveling the concept of contextual vectors is an enlightening disposition in understanding the function and significance of AI and machine learning in digital environments.

These vectors serve as robust linkages between human language and machine interpretation, augmenting the efficiency of AI systems across a myriad of applications.

Be it bridging language nuances in machine translation or optimizing SEO operations via tools like Search Atlas by LinkGraph, contextual vectors remain integral.

Despite certain limitations, their expansive potential and futuristic applications indicate the critical role they are poised to play in structuring the semantic web and revolutionizing AI comprehensibility.

In essence, the journey to decipher contextual vectors is a journey towards a future where machines understand and interact with us better – a premise as promising as it sounds.