BERT: Google’s Largest Update in Years

Google’s BERT went live in late 2019 and impacted almost 10% of all searches. Arguably one the largest algorithm update of 2019, here is everything SEOs and […]

Google’s BERT went live in late 2019 and impacted almost 10% of all searches. Arguably one the largest algorithm update of 2019, here is everything SEOs and site owners should know about Google’s advanced natural language processing model.

What is Google’s BERT?

BERT is a deep learning algorithm and short for Bidirectional Encoder Representations from Transformers. The algorithm helped surface more relevant results for complicated search queries.

What was the effect of the BERT algorithm update on Google search?

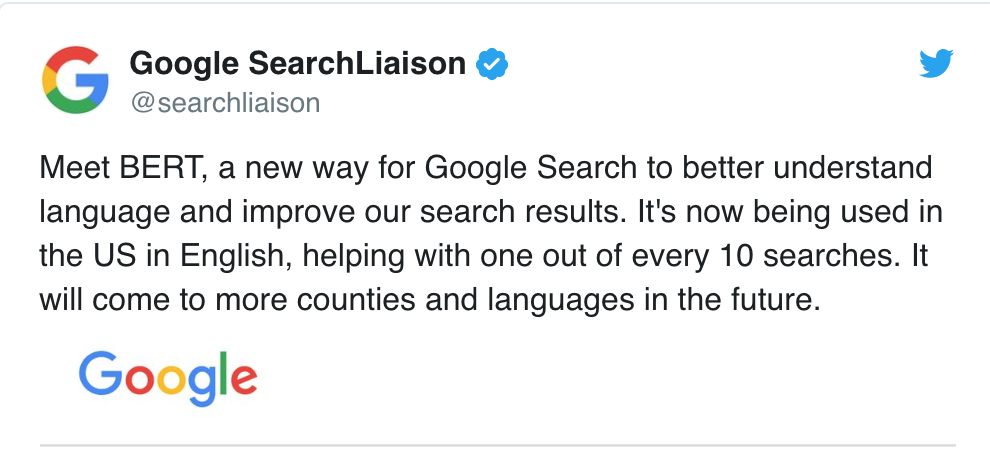

This is what Google said:

“These improvements are oriented around improving language understanding, particularly for more natural language/conversational queries, as BERT is able to help Search better understand the nuance and context of words in Searches and better match those queries with helpful results.

Particularly for longer, more conversational queries, or searches where prepositions like “for” and “to” matter a lot to the meaning, Search will be able to understand the context of the words in your query. You can search in a way that feels natural for you.”

In fact, according to Google the BERT update will impact 1 in 10 English searches in the U.S., that’s 10% of search queries. This is Google’s most significant update in the past 5 years, at least according to them:

We’re making a significant improvement to how we understand queries, representing the biggest leap forward in the past five years, and one of the biggest leaps forward in the history of Search.

The introduction of BERT caused major ranking fluctuations in the first week of the new algorithm’s deployment. SEOs over at Webmaster World have been commenting on the fluctuations since the beginning of the week, noting the largest rankings fluctuation to hit the SEO world since RankBrain.

BERT Analyzes Search Queries NOT Webpages

The October 24th, 2019 release of the BERT algorithm improves how the search engine giant analyzes and understands search queries (not webpages).

How Does BERT Work?

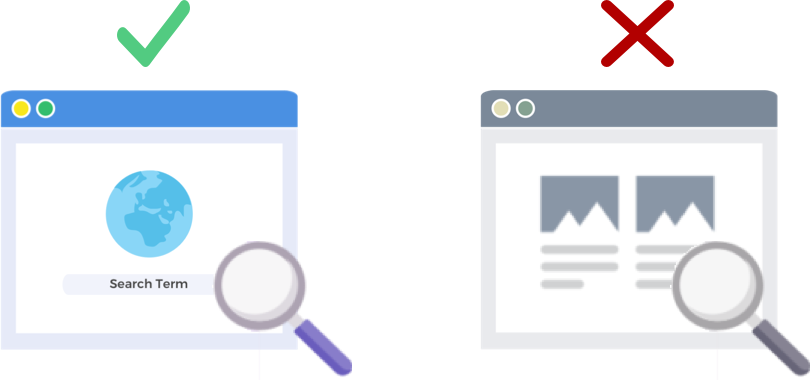

BERT improves Google’s understanding of search queries using a bi-directional (that’s the B in BERT) context model. Google actually talked a bit about the mechanics of BERT around this time last year when they announced BERT as a new technique for Natural Language Processing (NLP) pre-training.

What is pre-training? Pre-training is just teaching a machine how to do tasks, before you actually give it work to do. Traditionally pre-training datasets are loaded with a few thousand to a few hundred thousand human-labeled examples.

Pre-training has been around for a beat, but what makes BERT special is that it’s both contextual (each word’s meaning shifts based on the words around it) and bidirectional – the meaning of a word is understood based on the words both Before it and after it.

Per Google’s Blog:

In the sentence “I accessed the bank account,” a unidirectional contextual model would represent “bank” based on “I accessed the” but not “account.” However, BERT represents “bank” using both its previous and next context — “I accessed the … account” — starting from the very bottom of a deep neural network, making it deeply bidirectional.

The Google BERT update builds on recent advancements in machine learning and entity recognition. Basically BERT helps identify all the parts of speech and context of the words prior to Google processing a search.

What Does BERT Mean for Search Results?

According to Google, it means that users are going to start seeing more relevant results, results that better match the intent of a user’s search. This algorithm improvement will span regular results and rich snippet results.

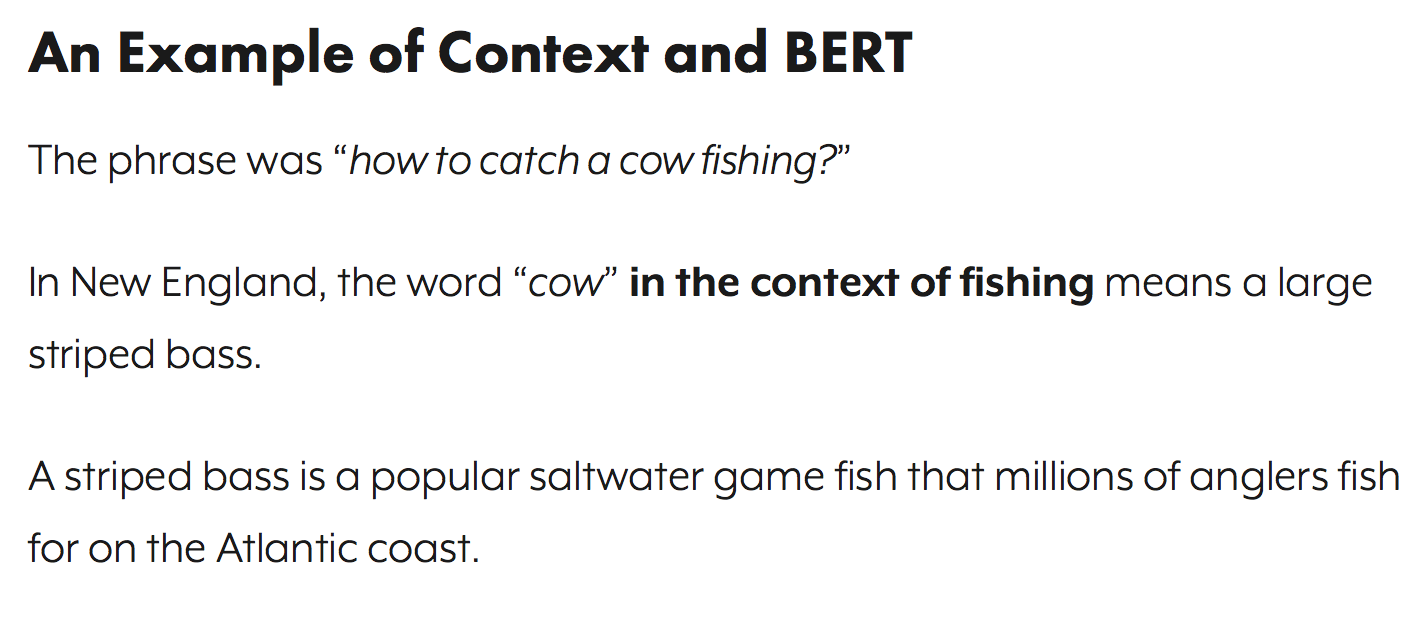

Google provided a few helpful examples in their blog entry announcing BERT.

The Esthetician Example

First up, we have a user trying to understand if Estheticians spend a lot of time on their feet as part of the job.

You can see below that before BERT Google read the query “do estheticians stand a lot at work” and produced a result comparing types of work environments for estheticians.

After BERT Google is surfacing an article on the physical demands of being an esthetician, much more in line with the information the searcher was originally trying to surface.

The Math Practice Books Example

In this example, a user is looking for math practice books for adults, but surfaces math practice books for children.

After BERT, Google correctly recognizes the context of the query, accounting better for the second part of the search “for adults”.

The Can You Pick Up Medicine For Someone Else Example

In this example the query “can you get medicine for someone pharmacy” returns a result about how to fill prescriptions in general, rather than how to fill them for a 3rd party. After BERT, Google better understands the goal of the user and surfaces a piece about whether or not a patient can have a friend or family member pick up their prescription for them.

What Can I Do to Rank Better after BERT?

For any keywords where you lose rankings, you should take a look at the revised search results page to better understand how Google is viewing the search intent of your target terms. Then revise your content accordingly to better meet the user’s goals.

If you lost rankings under BERT it’s more likely to be an issue related to how well your page matches a user’s search intent (helps a user reach their goal) than it is to be a content quality issue.

Given that BERT is likely to further support voice search efforts over time, we’d also recommend websites write clear and concise copy. Don’t use filler language, don’t be vague, get straight to the point.

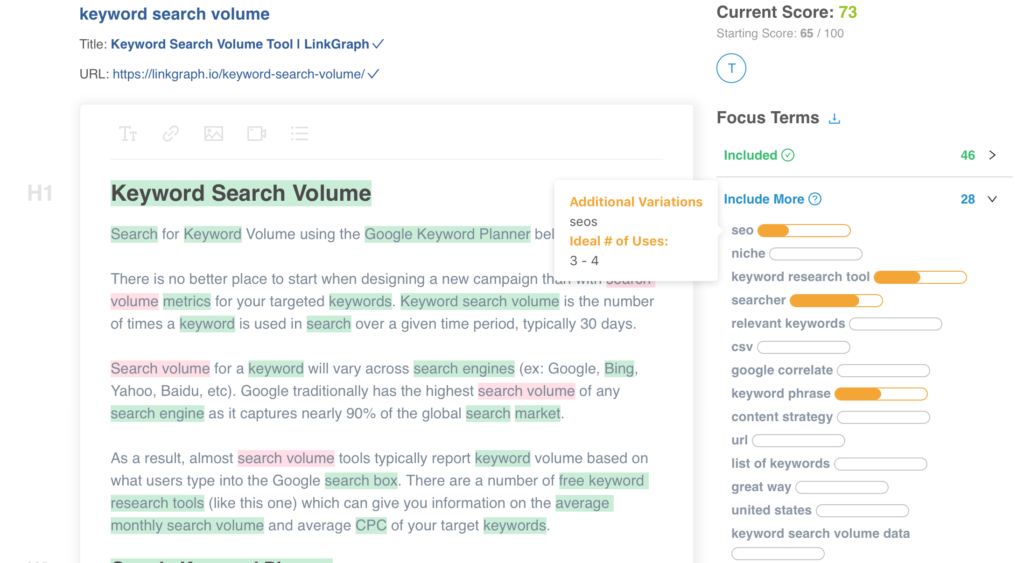

Need help optimizing your content? Check out LinkGraph’s On Page Content Optimizer, reach out to a member of our team at sales@linkgraph.io, or schedule a meeting to get setup today.

Where Can I Learn More?

Dawn Anderson gave a great presentation earlier this month at Pubcon on “Google BERT and Family and the Natural Language Understanding Leaderboard Race,” and you can take a look at her presentation.

Thanks for having my #Pubcon. Here is my deck <3 <3 https://t.co/aGYDI9pfdY

— Dawn Anderson (@dawnieando) October 10, 2019

Jeff Dean also recently did a keynote at on AI at Google including BERT, that you can watch.