Maximize Crawl Budget for SEO: Boost Site Indexing Efficiency

Are you aware that how efficiently search engines crawl your website can significantly influence your online presence? This article delves into the intricacies of optimizing your crawl […]

Are you aware that how efficiently search engines crawl your website can significantly influence your online presence?

This article delves into the intricacies of optimizing your crawl budget, a key element in Search Atlas and Linkgraph SEO strategies that could make or break your site’s indexing performance. Readers will discover practical ways to assess current crawl efficiency, avoid common pitfalls that waste budget, and learn how to construct a site architecture that search engines can navigate effortlessly.

By implementing these strategies, you’ll address the pressing problem of ensuring your website’s content is found and ranked by search engines such as Google. With guidance on ongoing monitoring and maintenance, this post promises to equip you with the necessary tools to maximize your crawl budget and keep your URLs in good standing in the expansive digital universe.

Key Takeaways

- Proper crawl budget management is critical for site indexing and search visibility

- Avoiding duplicate content and redirect loops is essential for maintaining crawl efficiency

- Regularly updating and optimizing sitemaps can significantly improve site indexing

- Monitoring server performance and addressing errors can prevent wasted crawl budget

- Periodic site audits are necessary for sustained SEO performance and efficient crawling

Understanding Crawl Budget and Its Impact on SEO

At the heart of online visibility lies the concept of crawl budget—a critical factor for any site’s relationship with search engines. Crawl budget refers to the frequency and number of pages a web crawler scans on a site during a given period. Its proper management is essential for efficient site indexing, leading to improved search rankings and visibility. A site map can guide web crawlers through the site effectively, avoiding issues like duplicate content, which can negatively impact the crawl budget. This section will delve into what influences the allocation of crawl budgets and how they affect site indexing and visibility.

Defining Crawl Budget in the Context of Search Engines

Within the realm of search engine optimization, the concept of a crawl budget is pivotal for webmasters aiming to maximize their site’s visibility. It pertains to the number of pages Googlebot, or other search engines’ crawlers, can and will visit on a given website within a certain timeframe. Sites with efficient server response times and optimized URL redirection strategies tend to have a higher crawl budget, allowing for more frequent indexing and, consequently, opportunities for higher rankings. Analytics can aid site owners in understanding how their crawl budget is utilized, guiding them to make informed decisions that bolster their SEO efforts.

How Crawl Budget Affects Site Indexing and Visibility

An optimized crawl budget ensures that search engines index a website efficiently, boosting the site’s visibility and potential to rank higher on search engine results pages. The implementation of sitemaps and hreflang tags, alongside a well-managed API, can enhance the information architecture, leading to more effective crawling of new and updated pages. This strategic allocation of resources saves bandwidth, prevents waste on non-essential pages, and focuses the crawler’s attention on the content that truly matters to the audience.

Factors Influencing Crawl Budget Allocation

The allocation of a crawl budget can be heavily influenced by factors such as the use of ‘noindex’ directives, which signal to crawlers that a web page should not be indexed, thus preserving crawl budget for more essential pages. Additionally, improving user experience by streamlining navigation and minimizing the frequency of http errors helps ensure crawlers can access and cache content effectively, making the most of the allocated budget. Essential to this process is the optimization of site resources, as poor performance can diminish a crawler’s ability to efficiently index a site, negatively impacting overall site visibility.

Grasping the importance of crawl budget in SEO is only half the battle. Now, let’s uncover the stealthy culprits that wither this precious resource.

Identifying Factors That Waste Crawl Budget

Maximizing a website’s crawl budget necessitates an understanding of elements that lead to its depletion. Issues such as duplicate content, infinite URL spaces due to faceted navigation, and redirect loops contribute to unnecessary crawl budget expenditure. Insight into these complexities, bolstered by data analysis from tools like Google Analytics and careful examination of XML sitemaps, can reveal soft error pages and low-quality content that hinder SEO efficiency. The upcoming sections will dissect these aspects to provide targeted strategies for preserving a site’s crawl budget for what counts.

Duplicate Content Issues

Duplicate content dilutes search visibility and can cause significant portions of a website’s crawl budget to be squandered. When web crawlers encounter multiple pages with substantially similar or identical content, they expend valuable resources on non-unique pages, diminishing the opportunity to index more pertinent sections of a site. Employing the “nofollow” tag judiciously and mastering the use of canonical tags can direct search engines to the original and most relevant content, ensuring marketing efforts and CSS-driven design enhance the user experience without compromising SEO effectiveness.

Infinite URL Spaces and Faceted Navigation

Infinite URL spaces, often stemming from faceted navigation systems, can dramatically deplete a website’s crawl budget. When parameters for sorting and filtering create countless URL combinations, they challenge search engines’ algorithms and can lead to significant crawl inefficiencies. Monitoring via Google Search Console provides site owners with statistics highlighting this issue, presenting actionable data to streamline HTML link structures and refine their link-building strategies, thereby conserving crawl budget and enhancing a site’s algorithmic favorability.

Soft Error Pages and Redirect Loops

Soft error pages and redirect loops present significant hurdles in preserving a website’s crawl budget, often overlooked but critical in search engine optimization. An experienced webmaster knows that when a search engine encounters a soft error page, which appears to be a normal page but signals an error to Google Search, precious crawl budget is wasted as the crawler’s attention is diverted. Moreover, redirect loops created by improper configurations on the web server can cause an infinite loop, consuming the crawler’s time and resources, thereby reducing the frequency with which valuable content is indexed. By carefully auditing and correcting these errors, a website can ensure optimal user experience and efficient use of its crawl budget.

Low-Quality or Thin Content Pages

Content that lacks depth or offers little value—known as low-quality or thin content—can seriously affect a site’s crawl efficiency. When a spider encounters numerous low-value pages, such as underperforming landing pages or content that does not adequately address user queries, it squanders bandwidth that could be better used crawling new or updated high-value pages. A discerning webmaster, with an eye toward digital marketing efficacy, will evaluate page performance and prune content that does not meet the threshold for quality, directing the spider’s efforts towards pages that truly enhance user engagement and SEO performance.

Identifying and addressing thin content not only preserves crawl budget but also aligns with the goal of delivering a superior user experience:

| Page Type | Action | Outcome |

|---|---|---|

| Underperforming Landing Page | Re-assess and optimize | Improves relevance and user engagement |

| Duplicate or Stale Content | Remove or update | Enhances content freshness and value |

| Irelevant or Off-topic Pages | Refine or consolidate | Aligns with user interests and search intent |

Recognizing what depletes your crawl budget is only the first step. Let’s shift our focus to evaluating how effectively your website uses its current allocation.

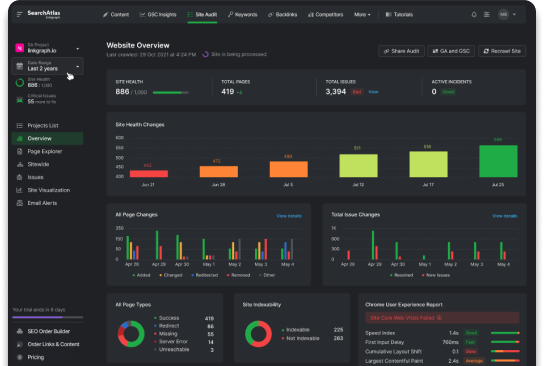

Assessing Your Website’s Current Crawl Efficiency

To bolster the efficiency of SEO and site indexing, assessing a website’s current crawl efficiency is imperative. By delving into Google Search Console for crawl stats, webmasters can gauge the crawl rate and demand, a reflection of their site’s health in the eyes of search engines. The meticulous dissection of log analysis will reveal key data that, alongside an understanding of PageRank and machine learning algorithms, can identify crawl errors and bottlenecks. Additionally, evaluating server performance and analyzing response codes with the assistance of a site audit tool can pinpoint areas needing improvement. Each element—from user agent behaviors to server responsiveness—plays a vital role in optimizing a site’s visibility and search performance.

Utilizing Google Search Console for Crawl Stats

Google Search Console provides invaluable insights into the crawl stats of a website, which is pivotal for understanding and optimizing its architecture for search engines. By reviewing crawl errors and the average response time, a webmaster can tweak content marketing strategies to better align with user behaviors and refine their website to prioritize valuable content. This process, coupled with informed keyword research, directly influences how often a web crawler visits the site, improving indexation and SEO performance. These actionable metrics guide users toward enhancing their site’s appeal to both web crawlers and visitors:

- Investigate crawl errors to identify and fix problems causing poor indexation.

- Analyze response times and server health to improve website speed and crawler efficiency.

- Review sitemap coverage to ensure all important content is being crawled and indexed.

Interpreting Crawl Rate and Crawl Demand Data

Interpreting crawl rate and demand data is vital for any website’s SEO strategy. A tool like Semrush can offer deep insight into how frequently search engine bots navigate a website, revealing opportunities for optimization in response to end user behavior. By evaluating these metrics, site owners can ascertain how their content resonates with both crawlers and audiences, guiding enhancements in site structure and content discoverability.:

- Analyze the crawl rate to identify how often search engine bots visit your site.

- Understand crawl demand to prioritize content updates and publication frequency.

- Study navigation patterns to ensure an intuitive user experience for both bots and users.

Identifying Crawl Errors and Bottlenecks

Identifying crawl errors and bottlenecks requires meticulous research and analysis of a website’s technical elements. Webmasters can use tools to assess how effectively a search engine’s crawler navigates their site by analyzing parameters such as the frequency of crawl errors, which can signal broken links or issues with server connectivity. Addressing these bottlenecks not only improves a site’s crawl efficiency but also enhances the overall user experience, leading to potentially higher search rankings and more organic traffic:

| Error Type | Common Causes | Potential Impact on Crawl Budget |

|---|---|---|

| 4XX errors | Broken links, incorrect URLs | Wastes crawl budget on inaccessible pages |

| 5XX errors | Server issues | Causes crawlers to temporarily abandon the site |

| Incorrectly blocked URLs | Improper use of robots.txt or noindex tags | Prevents crawling of valuable content |

| Redirect loops | Flawed redirect implementation | Consumes crawl resources in futile loops |

Evaluating Server Performance and Response Codes

Evaluating server performance and response codes is an integral part of optimizing a website’s crawl budget. Swift server responses facilitate a more efficient crawl process, ensuring that search engines can access content without delay. By scrutinizing response codes, site owners can quickly address issues indicated by 4xx and 5xx status codes, which can otherwise stall or prevent web crawlers from indexing valuable content. Monitoring these aspects is essential for maintaining a healthy, search-friendly website:

- Analyze server logs to track response time trends and address slow responses.

- Identify and resolve common 4xx errors, such as 404 Not Found, to prevent wasted crawl efforts on nonexistent pages.

- Correct server-side 5xx errors, ensuring consistent accessibility for both users and search engine crawlers.

Now that we’ve measured the efficiency of our site’s crawlability, let’s turn our attention to enhancement. The next section lays out hands-on tactics to maximize your crawl budget, ensuring search engines prioritize your content.

Strategies to Optimize Crawl Budget for Better Indexing

Optimizing a website’s crawl budget effectively boosts its SEO and indexing efficiency. Strategies such as prioritizing high-quality content pages, executing effective URL structures, and managing parameterized URLs with robots.txt and canonical tags form the foundation. Reducing redirect chains and 404 errors, coupled with improving site speed and server responsiveness, are also critical measures. The forthcoming sections will delve into these techniques, offering insights on how they maximize visibility and enhance overall search engine performance.

Prioritizing High-Quality Content Pages

To optimize a website’s crawl budget, one effective strategy is focusing on its high-quality content pages. By ensuring the most valuable and relevant pages receive the attention of web crawlers, site owners can improve indexing efficiency and consequently, their SEO standings. This prioritization is achieved by auditing existing content to identify pages that provide substantial information, solve user problems, or offer in-depth insights, and then updating the sitemap and internal linking structure to highlight these pages to search engines.

Implementing this strategy includes maintaining an organized content hierarchy and implementing metadata with precision, allowing search engines to discern the significance and relevance of each page easily:

| Content Quality | Action | SEO Benefit |

|---|---|---|

| High-value Informational Content | Enhance visibility in sitemap | Ranks for target queries, attracts organic traffic |

| Detailed Resource Pages | Improve internal linking | Increases page authority, encourages deeper engagement |

| Data-Driven Analysis Pages | Optimize on-page SEO factors | Strengthens topical relevance, improves SERP positioning |

Implementing Effective URL Structures

Crafting effective URL structures is a cornerstone of maximizing a website’s crawl budget, where clear, descriptive, and keyword-rich URLs differentiate valuable content and streamline a search engine’s indexing process. Webmasters enhance SEO and site efficiency by avoiding overly complex URLs, reducing dynamic URL strings, and using hyphens instead of underscores to improve readability for both users and crawlers. This focus on URL clarity ensures the most important content is discovered and prioritized by web crawlers, thereby driving more targeted traffic to a site.

Managing Parameterized URLs With Robots.txt and Canonicals

Effective management of parameterized URLs is essential in optimizing a crawl budget, and this can be accomplished using robots.txt and canonical tags. By instructing search engines with robots.txt to avoid unnecessary parameters, and designating preferred URLs with canonical tags, site owners can prevent the wastage of crawl budget on duplicate or similar content. This approach not only promotes more efficient indexing by focusing on primary content pages, but also enhances a website’s SEO by preventing confusion over which pages should rank in search results.

Reducing Redirect Chains and 404 Errors

Minimizing the occurrence of redirect chains and 404 errors is a practical step in fine-tuning a website’s crawl budget. Tightening up redirects ensures search engines expend energy on live, relevant pages rather than losing time in a maze of unnecessary redirects that can erode crawler efficiency. Additionally, addressing and repairing 404 errors prevents the waste of resources on dead ends, thereby preserving the crawl budget for content that fulfills user queries and bolsters a site’s SEO standings.

Enhancing Site Speed and Server Responsiveness

Enhancing site speed and server responsiveness form critical components in a crawl budget optimization strategy. A swift server response facilitates search engines in swiftly accessing and indexing website content, ensuring a website remains competitive in search engine results pages. Practical measures such as optimizing images, leveraging browser caching, and minimizing JavaScript can noticeably improve site speed, thus aiding crawlers in efficiently navigating and indexing a site’s content.

Perfecting your crawl budget sets the stage. Next, we fortify the scaffolding of your site’s architecture to lift your content higher in search engine visibility.

Enhancing Site Architecture for Improved Crawlability

Enhancing site architecture plays a critical role in optimizing crawl budget and, by extension, SEO efficiency. Critical steps include creating a logical internal linking framework, ensuring crawlers navigate with ease; updating and submitting sitemaps regularly to keep search engines informed of site changes; using robots.txt to guide crawlers effectively, avoiding unnecessary page scans; and leveraging XML sitemaps for highlighting priority pages. These measures collectively support more precise indexing, directly benefiting a site’s visibility in search results.

Creating a Logical Internal Linking Framework

Establishing a logical internal linking framework is essential for any site aiming to optimize its crawl budget and bolster SEO. By designing an intuitive link structure, site owners ensure that search engine crawlers can navigate the site’s content seamlessly, highlighting valuable pages and boosting overall site credibility. Capitalizing on this can lead to better indexing as web crawlers understand the hierarchy and relationship between pages. The impact is twofold: it improves user experience, keeping visitors engaged longer and decreases bounce rates, and enhances a search engine’s ability to prioritize and index important content efficiently:

- Audit and map out the website’s structure to identify and strengthen connections between related content.

- Employ breadcrumb navigation to make the site’s layout transparent for users and search engines alike.

- Utilize internal linking to distribute page authority throughout the site, especially to cornerstone content that drives traffic and conversions.

Updating and Submitting Sitemaps Regularly

Regularly updating and submitting sitemaps directly influences a website’s search engine optimization and indexation efficiency. This practice ensures that search engines are promptly notified of new or modified content, facilitating quicker indexing and better visibility. Maintaining an accurate and current sitemap aids web crawlers in understanding the structure of a site, enabling a more strategic allocation of crawl budget to the content that truly drives traffic and engagement.

Utilizing Robots.txt to Guide Crawlers Appropriately

Utilizing Robots.txt to guide crawlers appropriately serves as a strategic move for webmasters aiming to enhance their site’s crawlability and maximize crawl budget efficiency. This text file, located at the root of a website, directs search engine crawlers toward or away from specific parts of the site, preventing the waste of crawl resources on irrelevant pages. By issuing clear directives to search engines, site owners can ensure crawlers focus on indexing the most valuable content, thus improving a website’s SEO and overall visibility:

| Action | Robots.txt Directive | Intended Outcome |

|---|---|---|

| Prevent crawling of private pages | Disallow: /private/ | Secures sensitive areas from being indexed |

| Allow full site access | Allow: / | Encourages comprehensive indexing of site content |

| Exclude resource-intensive pages | Disallow: /gallery/scripts/ | Conserves crawl budget for essential pages |

Leveraging XML Sitemaps for Priority Pages

Leveraging XML sitemaps for priority pages is a crucial step towards enhancing site architecture and maximizing crawl budget efficiency. By clearly defining these high-value pages in an XML sitemap, webmasters signal to search engines which content should be crawled first, ensuring the most important information is indexed quickly and accurately. This approach not only streamlines the crawling process but also helps improve a site’s visibility and ranking potential.

To better understand how to prioritize content through XML sitemaps, consider the following structure that illustrates the relationship between content significance and crawler focus:

| Content Priority | Frequency of Updates | XML Sitemap Inclusion |

|---|---|---|

| High | Daily/Weekly | Essential |

| Medium | Monthly | Recommended |

| Low | Rarely | Optional |

Site owners, through strategic sitemap submissions, effectively communicate to crawlers the pages that host key content, thus directing their resources where it benefits the SEO objectives the most.

Strengthened architecture paves the way for spiders to traverse with ease. The true test lies in vigilance: ongoing monitoring is the heartbeat of a healthy site.

Ongoing Monitoring and Maintenance

Ongoing monitoring and maintenance are crucial for ensuring that the optimization of crawl budgets translates into tangible SEO benefits. Regularly reviewing crawl statistics and logs helps in uncovering insights on how search engine bots interact with the website. Staying updated with search engine guidelines guarantees that strategies remain effective in the fast-evolving SEO landscape. Adjustments based on performance metrics enable the fine-tuning of tactics to enhance site indexing efficiency. Conducting periodic site audits is necessary for continuous improvement and maintaining a competitive edge in search rankings.

Regularly Reviewing Crawl Statistics and Logs

Regular analysis of crawl statistics and logs is a pivotal task for webmasters seeking to maintain optimal SEO performance. This continuous monitoring process provides data-driven insights into how search engine crawlers interact with their sites, allowing the identification and rectification of issues before they impinge on the site’s indexing or user experience. A nimble approach to interpreting these analytics can lead to quicker adjustments in SEO strategies, ensuring that a website remains both visible and attractive to its intended audience. To keep track of these metrics, consider the following actions:

- Examine server log files to discern the behavior of search engine crawlers on the website.

- Utilize Google Search Console’s coverage reports to identify crawled pages and any crawl anomalies.

- Track crawl frequency over time to understand and anticipate the indexing pattern of search engines.

Staying Updated With Search Engine Guidelines

Staying informed about the latest search engine guidelines is a cornerstone of maintaining an optimized crawl budget and, by extension, robust site indexing. The digital landscape evolves swiftly, and search engines frequently update their algorithms and best practices. Webmasters who keep abreast of these changes stand a better chance of aligning their site’s structure and content with the current standards, ensuring their SEO strategies remain effective and their websites retain prominence in search results.

Adjusting Strategies Based on Performance Metrics

Adjusting strategies based on performance metrics is essential for webmasters intent on fine-tuning their SEO and crawl budget efficiency. The continuous evaluation of metrics such as crawl rates, index coverage, and search rankings provides actionable feedback, informing strategic shifts that can bolster site performance. For instance, a dip in crawl frequency might prompt a site owner to streamline navigation or enhance content quality, ensuring that web crawlers spend their budgets on pages that yield the most significant SEO gains.

Conducting Periodic Site Audits for Continuous Improvement

Conducting periodic site audits is a proactive measure that comes with considerable SEO benefits, fostering continuous improvement in crawl budget optimization. During these audits, webmasters can identify and correct technical flaws that impede search engine crawlers, such as broken links or misconfigured redirects, thereby enhancing the site’s indexing efficiency. This strategic approach not only smooths the pathway for search crawlers but also boosts the user experience, contributing to better search rankings and increased web traffic.

Frequently Asked Questions

A crawl budget refers to the number of pages a search engine’s bot will crawl on your website within a given timeframe, affecting the site’s indexing and subsequent search visibility.

Poor crawl efficiency can hinder search engines from indexing content effectively, potentially lowering your site’s visibility and ranking in search results.

To optimize crawl budget, prioritize high-value pages, update content regularly, ensure swift site loading, and eliminate duplicate pages to enhance search engine crawling efficiency.

Site architecture enables search engines to discover and index web pages efficiently, thus impacting a site’s crawlability by providing a clear, navigable structure.

Regular monitoring of crawl budget is crucial for SEO as it ensures search engines index content efficiently, boosting site visibility and improving search rankings.

Conclusion

Optimizing your crawl budget is crucial for improving SEO and ensuring efficient site indexing, as it allows search engines to allocate resources to your most valuable pages. Employing strategies such as managing duplicate content, enhancing site speed, and crafting logical internal linking can drastically improve a website’s visibility and search ranking. Regular updates and monitoring of sitemap submissions, along with addressing errors and bottlenecks, are essential for maintaining a search-friendly site architecture. Ultimately, a well-managed crawl budget increases a site’s potential to attract relevant, organic traffic by prioritizing content that best serves user queries and interests.