Demystifying Site Audits in Technical SEO

Unlocking the Mysteries of Technical SEO Site Audits Navigating the depths of technical SEO can be a formidable endeavor, yet the rewards for a well-audited website are […]

Unlocking the Mysteries of Technical SEO Site Audits

Navigating the depths of technical SEO can be a formidable endeavor, yet the rewards for a well-audited website are substantial.

At the heart of improving website visibility and search engine rankings lies a rigorous technical SEO site audit, an intricate process that unveils critical insights for on-page and off-page optimization.

LinkGraph’s comprehensive approach to technical audits provides clients with the means to identify and address SEO issues that hinder a website’s performance.

Their tailored strategies ensure each aspect of a website is scrutinized, from site architecture to metadata efficacy.

Keep reading to understand how a thorough technical SEO audit can transform your online presence.

Key Takeaways

- Technical SEO Site Audits Are Essential for Optimizing Website Performance and Search Engine Rankings

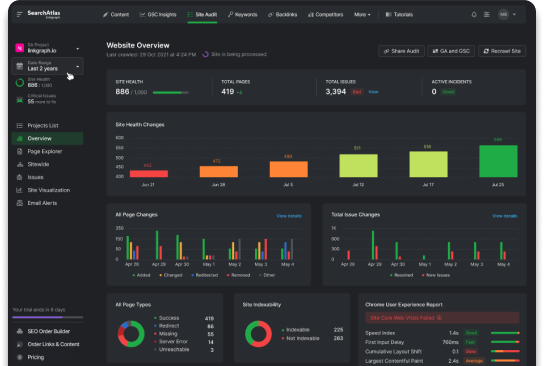

- LinkGraph’s SEO Services Use Advanced Tools Like SearchAtlas SEO Software for Comprehensive Audit Capabilities

- Mobile-Optimized Sites Are Vital in Today’s Digital Landscape, and Technical Audits Must Confirm Cross-Platform Functionality

- Implementation of Structured Data Through SEO Services Enhances Visibility and Search Engine Communication

- Effective Use of Meta Robots Tags Can Guide Search Engine Crawlers and Improve a Website’s Indexing Strategy

Setting the Stage for Your Technical SEO Audit

Embarking on a technical SEO site audit demands a precise understanding of the undertaking’s scope and the objectives it aims to achieve.

Professional auditors homing in on what truly matters initiate their journey by pinpointing key performance indicators that align with the client’s digital growth aspirations.

This meticulous approach is foundational in establishing a well-defined timeline, ensuring the process is both efficient and comprehensive.

For those serious about enhancing their website’s compatibility with search engine algorithms, a structured audit is the linchpin in drawing the map towards improved online visibility and user engagement.

Understanding the Scope and Goals of an Audit

A technical SEO site audit crystallizes the path for strategic improvements, and understanding both its scope and goals is imperative for results that translate into real-world efficacy. By delineating the breadth of the audit, the client and SEO specialist establish a common ground on the meticulous checks performed, from site speed analysis to URL inspection protocols.

Goals are paramount, setting the benchmarks for success, outlining clear metrics for evaluating website health and aligning these aims with the overarching objectives of the business. It is this clarity that transforms a technical SEO audit from a mere diagnostic exercise into a powerful fulcrum for bolstering search engine ranking and amplifying user experience.

Identifying Key Performance Indicators for Assessment

At the heart of a technical SEO audit, identifying key performance indicators (KPIs) for assessment is paramount for discerning how effectively a website maneuvers within the digital ecosystem. SEO specialists select these KPIs based on their proven correlation to search engine visibility and organic traffic growth, thus providing a clear lens through which to scrutinize a site’s SEO health.

Metrics such as page speed, crawl errors, and the delicate balance of on-page elements vie for attention under the scrutiny of a seasoned auditor wielding SearchAtlas SEO tools. With these metrics in clear focus, LinkGraph’s SEO services meticulously chart the course toward optimizing a website to meet the stringent demands of modern search engines and the expectations of users.

Establishing a Timeline for the Audit Process

When preparing for a technical SEO site audit, pinpointing a feasible timeline stands as a critical step, one that requires acute forethought and strategy. LinkGraph’s seasoned SEO specialists collaborate with clients, designating appropriate milestones and deadlines to facilitate a seamless audit process that honors the company’s operational cadence.

Determining an optimal timeline for an SEO audit necessitates a balanced approach that considers the complexity of tasks and the imperatives of the digital market. The dynamic team at LinkGraph adeptly negotiates these variables, ensuring timelines are realistic yet ambitious, tailored to achieve measurable results within a structured, predictable framework.

Essential Tools and Software for Technical Audits

In the realm of technical SEO, leveraging the appropriate tools and software is not just advantageous—it’s imperative.

These digital instruments are the explorers’ compasses, guiding auditors through the intricate labyrinth of data that constitutes a website’s SEO profile.

Pursuing the quest for optimization without these technologies is akin to navigating unchartered territories devoid of any map.

As professionals initiate the audit, precise selection of SEO audit software becomes crucial, accompanied by utilizing webmaster tools for profound insights.

Meanwhile, Google Analytics stands as an unwavering ally, decrypting the enigma of traffic patterns.

Equally critical is the implementation of a trustworthy crawler tool—a steadfast beacon that illuminates the hidden crevices impeding a site’s search engine ascendancy.

Selecting the Right SEO Audit Software

When the task at hand is to distill complexities into actionable insights, the choice of SEO audit software becomes pivotal. A robust platform, such as LinkGraph’s SearchAtlas SEO software, presents a full spectrum of diagnostic features that align with ever-evolving search engine algorithms, streamlined for the scrupulous and nuanced task of technical SEO diagnostics.

Selecting the precise SEO audit software entails scrutinizing its capacity to perform critical tasks with unerring accuracy and depth:

- Assessment of indexability to ensure search engines can seamlessly crawl and list a website’s content.

- Thorough examination of on-page SEO elements, from meta tags to content quality and structure.

- Advanced capabilities to track down and analyze backlink profiles with a comprehensive link building and free backlink analysis features.

Ultimately, the chosen software must include insightful reporting mechanisms, offering clarity and direction for strategic decision-making. Scope and scalability are considerations, for they dictate how effectively the software can accommodate growth and the varied demands of different clients, from local businesses to global enterprises seeking white label SEO services.

Utilizing Webmaster Tools for Insightful Data

In the intricate dance of Technical SEO Site Audits, webmaster tools play a pivotal role, serving as the gateway to invaluable data that underpins strategic decision-making. LinkGraph’s SEO specialists harness these tools, including Google Search Console and Bing Webmaster Tools, to glean an understanding of how search engine crawlers interact with a site, providing a clearer picture of the website’s visibility and indexing status.

Insights into critical SEO elements like sitemap submissions, security issues, and the successful retrieval of a site’s URLs emerge through diligent use of such comprehensive platforms. SearchAtlas SEO software integrates these insights, yielding a holistic view that equips website owners and marketers with the knowledge necessary to refine their SEO tactics and improve organic search presence.

Leveraging Google Analytics for Traffic Analysis

Within the panorama of technical SEO audits, Google Analytics emerges as a crucial tool, unearthing patterns and trends in website traffic that inform the strategic direction of optimization efforts. Through its nuanced tracking capabilities, LinkGraph’s SEO specialists extract rich, actionable data that shapes an informed approach to enhancing search engine visibility and user engagement.

Analysis furnished by Google Analytics transcends mere numbers, offering a narrative of user behavior and interactions that SEO services can distill into performance enhancement tactics. With a focus on the metrics that are most indicative of SEO success, such as traffic sources and user flow, SearchAtlas SEO software takes these analyses and melds them into the broader tapestry of comprehensive audit findings.

The Importance of a Reliable Crawler Tool

A reliable crawler tool is indispensable in the technical SEO site audit process, acting as the eyes and hands of the SEO specialist within the digital landscape. Such tools expose a site’s architecture to the examiner, revealing how search engine bots navigate and perceive the site’s structure, content, and hierarchy.

Employing a capable crawler tool is like having an indefatigable colleague tirelessly assessing organic traffic channels, unearthing crawl errors, and confirming that proper redirects are in place. It provides a comprehensive report on a myriad of technical elements, that, when improved, can lead to significant leaps in search engine ranking performance, aligning with the client’s desired outcomes.

Crawling Your Website: The First Step in SEO Audits

Embarking on the journey of a technical SEO site audit signifies the initiation of a deeper exploration into the inner workings of a website’s digital landscape.

At the forefront of this exploratory mission lies the web crawling process—akin to charting the vast seas for navigational hazards.

By setting up an adept crawler for a comprehensive scan, LinkGraph’s SEO specialists deftly unveil the hidden impediments that could be stifling a website’s SEO potential.

As the audit unfolds, the identification of crawl errors emerges as a pivotal step, shedding light on the barriers that prevent search engines from fully understanding and valuing the site’s content.

Interpreting the data harvested from this site crawl is not merely about amassing information; it is about translating these findings into actionable insights that fuel informed decision making and strategic adjustments.

Each of these processes contributes to demystifying the complex elements that shape a site’s SEO, paving the way for enhancement and success in the search engine results page.

Setting Up Your Crawler for a Comprehensive Scan

For SEO specialists embarking on a technical audit, configuring a crawler for thorough inspection is critical. LinkGraph’s SEO services advise deploying SearchAtlas SEO software to penetrate the digital depths of a website, probing for structural anomalies and bottlenecks that disrupt search engine indexing and ranking efficacy.

Upon activation, this refined scan by a crawler such as Screaming Frog or LinkGraph’s custom tools systematically traverses each web page. It evaluates crucial SEO elements like status codes and link health, paving the way for targeted interventions that align with search engine best practices and user expectations.

Identifying Crawl Errors That Hinders SEO

In the intricate process of a technical SEO site audit, identifying crawl errors is essential, as they are indicative of the barriers that impede a site’s SEO efficacy. These errors can range from broken links and incorrect redirects to issues with the robots.txt file that unintentionally block search engine bots.

LinkGraph’s meticulous SEO services employ SearchAtlas SEO software to detect these critical crawl errors, ensuring that nothing stands between the website content and the discerning eyes of search engine crawlers. The swift diagnosis and resolution of these issues are vital steps in securing the website’s rightful place on the search engine results page:

| Error Type | Description | Impact on SEO |

|---|---|---|

| 404 Not Found | Links to non-existent pages | Negatively affects user experience and link equity |

| 302 Found | Temporary redirects not passing full link value | Dilutes page authority and confuses search engines |

| Robots.txt Block | Improper instructions preventing indexing | Hinders site content from being discoverable |

Interpreting the Data From Your Site Crawl

After the diligent work of a crawler tool, the task shifts to interpreting the collated data, a process where the refined expertise of LinkGraph’s SEO services shines. It’s not merely the collection of information but its analysis that unveils the site’s SEO health, guiding subsequent optimization efforts.

Translating the dense array of crawl data into a coherent narrative requires a keen eye for detail and a deep understanding of search engine dynamics. It is through this exercise that opportunities and bottlenecks are identified, with the ultimate goal being to align the website’s structure and content with the algorithms that govern online visibility.

- Crawl data interpretation begins with an analysis of collected URL information, assessing accessibility and indexation status.

- Reviewing site architecture and internal linking structures follows, ensuring that the flow of authority throughout the site is optimal.

- Lastly, SEO experts evaluate the quality and relevance of content, correlating it with ranking factors for determining strategic adjustments.

Analyzing Site Structure and Internal Linking

In the intricate world of search engine optimization, the dissection of a site’s structure and the scrutiny of its internal linking framework are tasks of great import.

They are not mere technicalities but rather, central elements that underpin the SEO strength and clarity of a website.

Professional SEO agencies, armed with deep analytical prowess, undertake a comprehensive audit to map the site architecture, spot and rectify issues with internal links, and organize content hierarchies.

This evaluation ensures a fluid, logical site layout, optimizing each avenue that directs search engines and users alike through the site’s pages, thereby elevating its place within the search engine results page.

Mapping the Site Architecture for Clarity

The dynamic tapestry of a website’s architecture holds numerous clues to its SEO efficacy. SEO specialists at LinkGraph, wielding SearchAtlas SEO software, meticulously map this architecture, unearthing the inherent structure that facilitates both search engine crawler access and intuitive user navigation.

With a clear architectural blueprint, LinkGraph’s SEO services ensure that each strand of the site’s framework is woven to support a coherent strategy. This precision mapping dismantles any ambiguity and sets a strong foundation for a well-orchestrated SEO campaign.

Spotting Potential Issues With Internal Links

Internal links act as pathways guiding users and search engine crawlers through a website, hence identifying weak links in this matrix is crucial for sustaining SEO health. LinkGraph’s SEO services meticulously scan the labyrinth of connections within a site, targeting links that may lead to 404 errors or are structured in a manner that dilutes page authority.

SearchAtlas SEO software plays a pivotal role, offering precise insights into the intricacy of internal links and their influence on user engagement and search engine understanding: it pinpoints areas where internal linking strategies may be misfiring, allowing for swift corrective actions to enhance site navigability and SEO performance.

| Issue Type | Common Signs | Potential Remedies |

|---|---|---|

| Broken Links | 404 page errors, user complaints | Update links to the correct URLs or remove them |

| Link Equity Dilution | Excessive use of non-authoritative or irrelevant links | Strategically structure internal links to focus on authoritative pages |

| Navigational Confusion | High bounce rates, low session durations | Redesign site map to streamline user pathway |

Best Practices for Organizing Content Hierarchy

A well-organized content hierarchy streamlines a website’s framework, reinforcing thematic relationships and enhancing the user experience. LinkGraph’s SEO services emphasize content organization as a critical factor in defining a site’s navigational flow and topical clarity, encouraging a logical progression from general to specific topics that mirrors intuitive user behavior.

Employing best practices in content hierarchy, LinkGraph’s strategists utilize the SearchAtlas SEO software to orchestrate content organization that search engines reward. By ensuring that cornerstone content and vital category pages remain prominent, the company paves the way for improved understanding by search engine bots, which contributes to stronger rankings and a cohesive user journey.

Enhancing Website Speed and Performance

In the digital age, where the speed of information exchange is relentless and user patience is ever-diminishing, a website’s performance can make or break its success.

A technical SEO site audit evaluates website speed as a vital component influencing user satisfaction and search engine rankings.

This assessment identifies and ameliorates factors impeding load times, ultimately culminating in a robust strategy to monitor and enhance ongoing site performance.

As digital practitioners pivot towards embracing these performance-centric approaches, they ensure websites are not only discoverable but also deliver a swift, seamless experience to visitors.

Pinpointing Factors That Affect Site Speed

In a technical SEO site audit, the analysis of website speed zeroes in on elements that decelerate page load times, directly affecting user engagement and retention. Factors such as server response time, large image or CSS file sizes, and non-optimized JavaScript come under scrutiny, each a potential culprit in undermining site performance against the benchmarks set by search engines like Google.

LinkGraph’s SEO specialists harness the analytical prowess of SearchAtlas SEO software to diagnose these performance inhibitors, recognizing that even milliseconds of delay can pivot a user’s decision to stay or leave. The audit meticulously evaluates resource-intensive plugins, heavy multimedia content, and the efficacy of caching mechanisms, ensuring that navigational fluidity aligns with the swift currents of today’s digital streams.

Implementing Fixes for Slow Loading Times

LinkGraph’s SEO services take definitive action to rectify slow loading times, optimizing a site’s journey towards peak performance.

With an expansive suite of tools like Google PageSpeed Insights at their disposal, SEO specialists deploy advanced techniques to enhance server response times and refine resource loading sequences, thereby ensuring that every website page fulfills the expectations of swift accessibility.

Monitoring Ongoing Performance Metrics

Website performance metrics serve as the compass for continual refinement in the fluid realm of user expectations and search engine algorithms. LinkGraph’s SEO services consider these metrics essential in maintaining the site’s competitive edge, with a concerted focus on routinely tracking load times, user interaction signals, and server reliability to preemptively address potential falloffs in performance.

LinkGraph employs sophisticated monitoring techniques within its SEO strategy, ensuring a pulse is always kept on the website’s health through SearchAtlas SEO software. This vigilance supports proactive adjustments that safeguard the site’s agility and responsiveness, fostering a consistently smooth user experience that aligns with the high-speed demands of the digital landscape.

Mobile Optimization in Technical SEO

In today’s digital milieu, the imperative of mobile optimization cannot be overstated within the discipline of technical search engine optimization.

Recognizing that a substantial faction of users operates primarily on mobile devices, technical SEO audits must encompass rigorous assessments to confirm that websites offer an accessible, functional, and consistent experience across all platforms.

Auditing mobile usability, securing content parity between mobile and desktop environments, and guaranteeing mobile-friendly site configurations stand as critical pillars in fortifying a website’s overall SEO health and its ability to engage with the modern user where they are most active—on the go.

Ensuring Your Site Is Mobile-Friendly

Ensuring that a website is mobile-friendly is not merely a recommendation but a necessity in today’s mobile-first world. LinkGraph’s SEO services understand the critical importance of a mobile-optimized site, initiating audits that confirm adaptive design, fast loading times, and navigational ease on smaller screens.

Responsive design elements and mobile-specific SEO are prioritized, considering variables like touch screen navigation and mobile search behaviors. The aim is to provide an exceptional user experience, no matter the device, fostering engagement and boosting mobile search rankings in the daunting competition of digital real estate:

- Responsive design implementations are audited for consistency across desktop and mobile interfaces.

- Mobile page speed tests are conducted to ensure quick load times for users on the move.

- Mobile UX is assessed to guarantee seamless interaction for touch-based navigation.

Checking for Mobile Usability Issues

For a website’s success in today’s market, addressing mobile usability issues is not just about achieving a responsive design; it is about ensuring every aspect of the mobile experience caters to user expectations. LinkGraph’s SEO services excel at identifying any hindrances to mobile usability, focusing vigilantly on elements like text readability, button sizes, and interactive elements that must be Optimized for Touch-Based Navigation.

Testing extends beyond the aesthetic, probing deeply into mobile functionality to ensure a frictionless user journey:

- Scrutiny of touch-target sizes and spacing to prevent user frustration from faulty interactions.

- Evaluation of mobile form factors ensuring ease of data input for customers on the go.

- Assessment of mobile redirects and pop-ups for a seamless and unobtrusive browsing experience.

These methodical inspections are paired with the implementation of intuitive solutions, drawing upon the full capabilities of SearchAtlas SEO software to elevate mobile user engagement and drive traffic—standards that both users and search engines demand in an increasingly mobile-centric world.

Aligning Mobile and Desktop Content for Consistency

In the landscape of technical SEO, ensuring content alignment between mobile and desktop versions of a website is not merely a facilitative practice, but an essential criterion for search engine evaluation. By solidifying content parity, LinkGraph’s SEO services pave the way for uniformity, preventing discrepancies that could otherwise diminish a site’s search engine credibility.

This harmonization is executed with meticulous precision: preserving the integrity of content across all platforms while enhancing accessibility and user retention.:

- LinkGraph audits for content consistency, safeguarding equivalent informational value for both desktop and mobile users.

- Visual and textual elements are optimized to provide a parallel experience, fulfilling the expectation of seamless content interaction irrespective of the device used.

- Strategies are implemented to maintain feature and functionality equivalence, ensuring no user is disadvantaged by their choice of browsing platform.

LinkGraph’s commitment to these principles reflects an understanding of their weight in a cohesive SEO strategy, supporting a harmonious narrative that bolsters search engine trust and user satisfaction in an era where cross-platform presence is indispensable.

Safeguarding Your Site With Secure Protocols

In the intricate web of factors that propel a website to SEO success, security protocols are not merely protective measures but also pivotal elements that impart trust and authority.

Delving into the domain of technical SEO site audits reveals the unquestionable impact of secure data transmission on search engine rankings.

This holds paramount consideration in today’s digital ecosystem, where LinkGraph’s SEO services underscore the significance of HTTPS protocols, assess websites for security vulnerabilities, and emphasize the meticulous implementation of SSL/TLS certificates.

These practices are not just about defense against cyber threats—they’re about fortifying a site’s credibility in the vigilant eyes of both users and search engines.

The Importance of HTTPS for SEO

The transition from HTTP to HTTPS is a critical factor in today’s SEO landscape, bolstering a site’s credibility and search engine ranking potential. HTTPS, a Secure Web Protocol, is recognized by search engines as a mark of trust and authenticity, influencing the perceived reliability of a website in the eyes of both users and search engine algorithms.

By instilling confidence through encrypted connections, HTTPS contributes to a safer browsing environment, which search engines, like Google, favor and reward with higher rankings. This translates into a competitive advantage for SEO, as secure sites are more likely to climb the search engine results page, attracting more traffic and higher user trust levels.

Assessing the Website for Potential Security Risks

Diligent security risk assessments are imperative in the stewardship of robust technical SEO site audits. LinkGraph’s SEO services conduct precise evaluations to uncover vulnerabilities that could compromise data integrity and erode user trust.

Investigations extend to the very bedrock of the website’s framework, with a systematic review of password policies, data encryption methods, and software updates: key components that, if flawed, can act as an open invitation to cyber threats.

| Security Component | Potential Risk | Impact on SEO and User Trust |

|---|---|---|

| SSL/TLS Certificate | Expired or improperly implemented certificate | Diminishes site credibility and can lead to warning prompts that deter users |

| Password Policies | Weak authentication processes | Increases the potential for breaches, undermining the site’s reputation |

| Software Updates | Lack of regular updates | Leaves the site susceptible to known exploits, negatively affecting user perception and search ranking |

Implementing SSL Certificates Correctly

Correct implementation of SSL certificates is a non-negotiable aspect of securing a website and maintaining its SEO standings. LinkGraph’s SEO services meticulously execute the installation and configuration of SSL certificates to prevent errors that can deter users and diminish search engine trust.

Execution of the following steps ensures the SSL certificate not only encrypts data but also serves as a positive SEO signal:

- Verification of certificate validity and proper installation on the server.

- Ensuring proper certificate renewal protocols are in place to avoid lapses in security.

- Configuration of all website pages to default to HTTPS, thereby solidifying site-wide encryption.

With these procedures, the SEO experts at LinkGraph aim to construct a secure digital environment that enhances user confidence and aligns with search engine guidelines for optimal online performance.

Indexing Issues and the Robots.txt File

Exploring the intricacies of technical SEO, site auditors frequently confront the pivotal yet often misunderstood element of the robots.txt file—a key cog in the machinery of website indexing and SEO performance.

Understanding its role, diagnosing indexing issues, and crafting a robots.txt file with precision stands central not only to enabling search engine crawlers to index a website’s content effectively but also to steering clear of preventable obstructions that can undermine a site’s visibility.

As a gateway to the wealth of content within, a meticulously constructed robots.txt file is a testament to efficient site management and cogent SEO strategies.

Understanding the Role of Robots.txt in SEO

Grasping the role of the robots.txt file in SEO is akin to unveiling a strategic blueprint that guides search engine crawlers. LinkGraph’s SEO services emphasize the prudent use of this file to communicate with search engine bots, ensuring they crawl and index the website content that amplifies visibility, while responsibly advising them on sections to bypass for optimal search performance.

Through the strategic implementation of the robots.txt file, LinkGraph’s SEO specialists optimize a website’s indexing potential, underscoring the significance of this file in safeguarding against the indexing of duplicate or irrelevant pages that could dilute the site’s search relevance. This careful orchestration of crawler traffic by SEO experts enhances the integrity and efficiency of the site’s presence in search engine results.

Diagnosing and Fixing Indexing Problems

When diagnosing indexing problems, LinkGraph’s SEO specialists first conduct a meticulous assessment using advanced tools to pinpoint discrepancies in site indexing. This often involves examining the robots.txt file–a critical determinant in a website’s search engine visibility–for directives that could inadvertently block valuable content from being indexed.

Upon identifying the root causes of indexing issues, LinkGraph’s team swiftly implements corrective measures, ensuring that the robots.txt file is accurately guiding search engine crawlers to the content that warrants indexing. These precise adjustments result in a well-indexed site, poised to capture the attention it deserves in search engine results pages.

Crafting a Robots.txt File for Maximum Efficiency

The construction of an efficient robots.txt file is a crucial aspect of a technical SEO site audit; it requires not only an understanding of search engine behaviors but also a nuanced approach to website management. LinkGraph’s SEO services emphasize accuracy and precision in defining the directives within the robots.txt file, ensuring that it reflects the strategic objectives of the SEO campaign and facilitates optimal indexing by search engine bots.

With thorough expertise, LinkGraph’s specialists approach this task with a series of calculated steps:

- Analysis of the website’s current content and structure to identify what needs to be indexed versus what should be excluded.

- Careful formulation of allow and disallow directives to correctly navigate crawler traffic.

- Meticulous testing and validation to confirm that the robots.txt file is effectively guiding crawlers without any unforeseen blockages or issues.

An adeptly crafted robots.txt file stands as a testament to efficient SEO practice, serving to streamline the indexing process while maintaining the website’s integrity in the digital ecosystem. This document, when finely tuned by LinkGraph’s seasoned practitioners, supports a robust presence in the search engine results page by prioritizing the website’s most valuable content.

Identifying and Resolving Duplicate Content

In the unfolding narrative of technical SEO site audits, the discovery and resolution of duplicate content stands as a fundamental chapter, pivotal to the integrity and performance of any website.

Tools dedicated to unearthing content redundancies ascertain the extent of duplication, while proactive strategies address the intricacies of content overlaps, ensuring uniqueness across the site’s pages.

Canonical tags emerge as the guardians of content originality, offering an elegant solution to signal preferred versions of similar content, preserving the clarity of the website’s narrative in the vast expanse of the digital landscape.

Tools for Detecting Duplicate Content on Your Site

Detecting duplicate content is a sophisticated element of SEO audits, a puzzle that LinkGraph’s SEO services solve using advanced tools and analytical finesse. The deployment of cutting-edge software like SearchAtlas SEO software becomes an asset, meticulously sifting through web pages to identify similarities that could impact search engine rankings.

Upon identifying potential content duplication, LinkGraph employs tools capable of drilling down to the root of the issue: whether it’s a matter of identical paragraphs or pages that are too closely mirrored. This intelligent discovery process ensures that any potential SEO pitfalls are addressed, maintaining the integrity of the website’s unique offering:

| Duplicate Content Issue | Tool Detection Capability | Impact on SEO |

|---|---|---|

| Repeated Paragraphs or Sentences | Text comparison algorithms flag matching content blocks | Can dilute the topical authority of the pages |

| Mirrored Page Structures | Structural analysis identifies duplicate template use | Search engines may struggle to discern page hierarchy |

Strategies for Handling Content Similarities

LinkGraph’s SEO services approach the challenge of managing content similarities with a strategic blend of meticulous revision and the use of canonical tags. This navigates search engine crawlers towards the preferred version of similar content, upholding the site’s hierarchical integrity and circumventing potential ranking confusions.

Employing nuanced editing techniques also plays a crucial role in dissipating content overlap, as distinctiveness is honed across the site’s pages, ensuring uniqueness that aligns with the company’s brand message and SEO objectives. These calculated refinements serve to enhance the site’s relevance and authority in the competitive digital space.

The Role of Canonical Tags in Preventing Duplication

The intricacies of technical SEO audits require specialized strategies to manage content effectively, and one such strategy involves the implementation of canonical tags. Canonical tags are an SEO cornerstone, signaling search engines to attribute original content authority to specified URLs, thus maintaining a website’s integrity by averting the negative impacts of perceived duplicate content.

In their diligent efforts to optimize a website’s SEO, LinkGraph’s SEO services expertly integrate canonical tags, carefully instructing search engines on which page versions to index. This tactic prevents content duplication pitfalls by clearly demarcating original content, safeguarding the site’s ranking potential and ensuring the presentation of unique content in search results.

Making the Most of Metadata During Audits

In the endeavor of demystifying technical SEO site audits, the analysis of metadata emerges as a critical component.

Encapsulating crucial elements such as title tags and meta descriptions, structured data, and meta robots tags, metadata serves as a guidepost for search engines, conveying the essence and purpose of a website’s content.

Optimizing metadata not only clarifies a site’s relevance to search queries but also significantly influences its potential to captivate user attention within search engine results.

With each nuanced directive, these elements of code act as the silent yet potent communicators to search engine crawlers, meticulously directing them in how to display and interpret the site’s data—thereby underpinning a comprehensive and effective technical SEO site audit.

Optimizing Title Tags and Meta Descriptions

In the meticulous process of a technical SEO site audit, Optimizing Title Tags and Meta Descriptions stands as a cornerstone for enhancing a website’s discoverability. SEO specialists from LinkGraph harness the potency of succinct, keyword-rich title tags and compelling meta descriptions to not only correspond with search intent but also to entice clicks from the search engine results page, boosting organic traffic.

Recognizing their pivotal role in on-page SEO, LinkGraph’s experts carefully craft these elements to align with the meticulously developed SEO content strategy, ensuring that each page presents a clear, relevant, and attractive proposition to both search engines and potential visitors. Their efforts result in a harmonious synergy between content and metadata, solidifying the foundation for improved search visibility and user engagement.

Structured Data and Its Impact on SEO

In the realm of technical SEO, the role of structured data, or schema markup, is to make the content of a website easily interpretable and actionable for search engines. This markup helps to define elements such as products, reviews, or events, creating rich snippets that enhance visibility in search results and can lead to a higher click-through rate.

As search engines endeavor to understand content context and relevance more deeply, structured data becomes a powerful signal that can improve a site’s search performance. Implemented strategically through LinkGraph’s expert SEO services, it bridges the gap between website content and the requirements of search engine algorithms, ensuring that a website communicates effectively within the digital ecosystem:

| Structured Data Type | SEO Benefit |

|---|---|

| Product Information | Enables rich results that can increase visibility and click-through rates. |

| Organizational Information | Helps search engines understand and display company data, enhancing brand presence. |

| Event Scheduling | Assists in showcasing events directly in search results, fostering user engagement. |

Meta Robots Tags and Their Directives for Crawlers

Meta robots tags serve as the subtle orchestrators of a website’s dialogue with search engine crawlers, guiding them with precision through the labyrinth of digital content. LinkGraph’s SEO services deftly apply these tags, imbuing them with directives tailored to enhance a site’s indexing strategy and protect against search engine missteps.

Facilitating nuanced control over crawler activity, these tags determine the pages to be indexed or obscured from search engines, ensuring that every piece of content aligns with the targeted SEO vision. The implementation of meta robots tags by LinkGraph’s professionals is executed with an understanding of their critical role in shaping a website’s search engine narrative and overall visibility.

Conclusion

Conducting a thorough technical SEO site audit is essential for ensuring that a website meets the intricate requirements of search engines and provides an optimal experience for users.

By meticulously analyzing site architecture, identifying crawl errors, enhancing website speed, ensuring mobile optimization, and safeguarding with secure protocols, SEO specialists are able to identify and resolve issues that could otherwise hinder a site’s performance.

The use of powerful tools such as SearchAtlas SEO software, Google Analytics, and other webmaster tools, provides detailed insights, allowing for a comprehensive approach in improving search engine rankings and user engagement.

Strategic use of metadata, structured data, and robots.txt files further refines a site’s communication with search engines, solidifying the site’s visibility and integrity.

In essence, the power of a technical SEO site audit lies in its ability to demystify the complexities of a website’s digital landscape, unlocking potential for improved discoverability and competitive edge in the online realm.