11 SEO Tips and Tricks to Improve Indexation

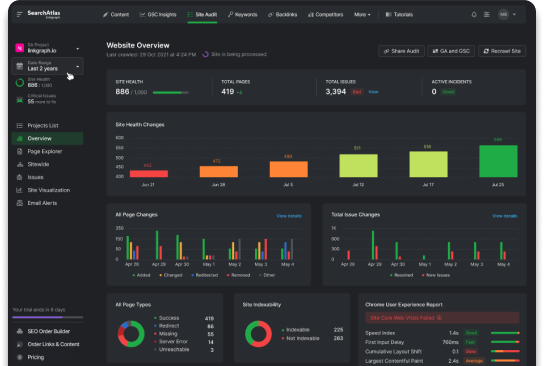

Maximizing Your Website’s SEO: Proven Strategies for Enhanced Indexation Understanding the nuances of search engine optimization is an intricate endeavor, one that requires constant vigilance and a […]

Maximizing Your Website’s SEO: Proven Strategies for Enhanced Indexation

Understanding the nuances of search engine optimization is an intricate endeavor, one that requires constant vigilance and a dynamic approach.

Companies aiming to scale the summit of Google search results must embrace a comprehensive SEO strategy, deploying a formidable array of techniques to ensure their website not only appeals to users but also adheres to the meticulous criteria of search engines.

LinkGraph, as an assiduous provider of SEO services, offers a suite of tools and expertise designed to address key areas such as web page indexation and the intricate labyrinth of Google’s algorithms.

Mastering these strategies can result in enhanced search visibility, driving more visitors to your site and improving overall online performance.

Keep reading as we explore the tenets of impeccable SEO practices and how LinkGraph’s innovative solutions can elevate your digital presence.

Key Takeaways

- LinkGraph’s SEO Services Emphasize the Careful Crafting and Updating of XML Sitemaps to Maximize Search Engine Indexing and Site Visibility

- Strategic Use of robots.txt and Meta Robots Tags Is Crucial for Guiding Search Engine Crawlers and Protecting Sensitive Content

- Regular Audits and Adjustments of Crawl Rates Through Google Search Console Can Optimize Indexing Without Overburdening Server Resources

- Handling Duplicate Content With Tools, Canonical Tags, and Redirects Is Key to Maintaining a Unique and Authoritative Website

- Implementing Comprehensive Mobile Optimization Strategies, Including Responsive Design and Mobile Usability Testing, Is Essential for User Engagement and SEO

1. Track Crawl Status With Google Search Console

Embarking on the journey to elevate a website’s visibility within search engine results pages begins with understanding the intricacies of how web pages are discovered and indexed.

A meticulous approach to tracking crawl status via Google Search Console offers businesses the critical insight required to ensure their digital content is seamlessly navigated by search engines.

Deploying the power of this tool effectively commences with a proper setup and extends to the vigilant monitoring of crawl reports.

By proactively identifying and resolving crawl errors, verifying sitemap correctness, and asserting control over the indexation process through re-indexing requests, companies can enhance their site’s SEO efficiency.

These pivotal actions lay the groundwork for achieving optimal presence in search rankings and fortifying a site’s SEO foundation.

Set Up Google Search Console Properly

Ensuring a website is correctly set up on Google Search Console can significantly influence its search engine optimization success. This initial step permits businesses to confirm ownership of their site, thereby unlocking a suite of powerful tools that facilitate meticulous monitoring and enhancement of search visibility.

Upon verification of ownership, the focus must shift to configuring the Console settings tailored to the site’s specific needs. Key actions include submitting an XML sitemap and selecting a preferred domain, both of which form the underpinnings of a sound SEO strategy:

- Submit an XML sitemap to aid Googlebot in discovering and crawling all relevant pages on the site.

- Select the preferred domain to ensure search engines understand the canonical version of your website to present in search results.

Monitor and Analyze Crawl Reports

Expertly monitoring and analyzing crawl reports through Google Search Console positions a company to grasp the nuanced behavior of search engine crawlers on their website. These reports, rich with data, provide a granular view of how content is processed and any issues that may obstruct a crawler’s ability to index pages efficiently.

Businesses stand to garner substantial benefits by scrutinizing crawl anomalies that could signal SEO issues, from server errors to misplaced noindex directives. Addressing these promptly ensures vital pages are indexed and poised to meet the search query needs of their target audience, thereby sharpening the overall SEO strategy.

Identify and Address Crawl Errors

Addressing crawl errors emerges as an imperative step in polishing a website’s SEO performance. Through Google Search Console, businesses can pinpoint the specific issues impeding search engines from effectively crawling their web pages. It is paramount for site administrators to address these errors promptly, as they can hinder a site’s ability to rank well and reach the desired target audience.

By remedying crawl errors, a company’s digital assets become more navigable for Googlebot, leading to improved indexation and, consequently, better search visibility. This direct correlation underscores the necessity for ongoing vigilance and swift correctional measures to maintain an SEO-friendly site structure:

| Crawl Error Category | Typical Causes | Implication for SEO |

|---|---|---|

| Server Errors | Overloaded servers, timeouts | May cause intermittent indexation issues and poor user experience |

| 404 Not Found | Broken links, deleted pages | Results in lost opportunities for user engagement and link equity |

| Access Denied | Improper permissions, robots.txt blocks | Hinders crawlers, impacting the visibility of vital pages |

| Soft 404 | Incorrectly labeled pages, poor redirects | Diminishes the relevance and quality signals sent to search engines |

Submit and Test Sitemap for Errors

As part of overseeing a website’s SEO health, Submitting and Periodically Testing the Sitemap for errors is a task of paramount importance. Ensuring the precision of the sitemap bolsters the website’s information architecture, allowing Googlebot to efficiently crawl and index content, thus favoring a positive impact on search rank.

LinkGraph’s SEO Services advocate for regular sitemap evaluation via Google Search Console to detect and rectify errors that could impede search visibility. This proactive approach confirms that all vital web pages are accessible to Google’s crawler, aligning with best practices for sustained online prominence.

Request Re-Indexing of New Content

Staying current with Google’s fast-paced indexing landscape is crucial for maintaining optimal SEO performance. For fresh or updated content, LinkGraph’s cadre of SEO Experts Recommends leveraging Google Search Console’s feature to request re-indexing, ensuring that the newly added or modified pages are recognized and evaluated by Google in a timely manner.

Initiating a re-indexing request prompts Googlebot to revisit the content, potentially hastening the inclusion of updates in Google search results. Taking this initiative often leads to quicker reflections of changes in search rankings, an essential consideration in the competitive digital arena:

- Access Google Search Console and navigate to the URL inspection tool.

- Enter the URL of the new or updated page you wish to index.

- Click the “Request indexing” button to trigger Googlebot’s re-evaluation process.

2. Create Mobile-Friendly Webpages

In an era where mobile devices dominate internet access, ensuring that a website is mobile-friendly has become a cornerstone of search engine optimization.

Companies acknowledge that a significant share of their visitors now come from a variety of devices, thereby necessitating the implementation of responsive design techniques that guarantee a consistent user experience across all platforms.

Conducting thorough mobile usability testing, Optimizing Page Speed for Mobile Devices, and circumventing mobile-specific crawl errors are pivotal measures that underscore the significance of mobile optimization in bolstering a site’s stand in Google search results.

Through such careful attention to mobile accessibility, businesses can effectively cater to the modern user’s needs, fundamentally reinforcing their SEO strategy and positioning within the competitive digital marketplace.

Use Responsive Design Techniques

Responsive design techniques serve as the scaffold for crafting websites that proffer an uncompromising user experience, regardless of the device in hand. By employing fluid grids, flexible images, and CSS media queries, developers create a single, dynamic site that intuitively conforms to the screen sizes and orientations of tablets, smartphones, and desktops.

The implementation of responsive design extends beyond mere aesthetics; it is a crucial factor that search engines like Google employ to determine a site’s ranking in their results. Mobile responsiveness ensures site content is accessible and legible across all devices, thereby catering to users’ expectations and encouraging engagement:

| Design Element | Role in Responsiveness | Impact on User Experience |

|---|---|---|

| Fluid Grids | Adapt layout to available screen real estate | Ensures content is always well-organized and readable |

| Flexible Images | Resize within grid parameters | Prevents visual distortion and enhances load times on various devices |

| CSS Media Queries | Apply different styling rules based on device characteristics | Delivers a tailored viewing experience, optimizing navigation and interaction |

Perform Mobile Usability Testing

Mobile usability testing represents an essential process in the refinement of user experience, specifically tailored for the mobile user. This rigorous analysis detects navigational impediments, assesses touch target sizes and validates the mobile-friendly nature of interactive elements to ensure seamless operation across the diversity of mobile devices.

LinkGraph’s meticulous attention to mobile usability testing, as part of their comprehensive SEO services, ensures that clients’ websites not only meet but exceed the expectations of the mobile-centric market. Such dedication is pivotal in securing higher engagement rates and underpinning the efficacy of the site’s overall SEO performance on mobile search engine results pages.

Optimize Page Speed for Mobile

Optimizing page speed for mobile devices is not merely an enhancement; it is a prerequisite for flourishing in a mobile-first world. With the majority of search queries now occurring on mobile platforms, a swift page response is vital to user satisfaction and search engine rankings alike.

A site’s ability to load quickly on smartphones and tablets directly influences bounce rates and user engagement. This performance metric is a critical factor that can markedly differentiate a mobile site in a crowded market:

- Minimize server response time to enhance mobile user experience.

- Compress images and use modern formats like WebP for faster mobile page loading.

- Implement efficient code and reduce the number of redirects to improve mobile speed.

LinkGraph, with their strategic approach to SEO, firmly grasps the significance of mobile page speed optimization. Their SEO Services Work Diligently to fine-tune clients’ sites, ensuring that visitors on mobile devices experience quick, uninterrupted access to content, thereby elevating the website’s standing in search engine results pages.

Avoid Mobile-Specific Crawl Errors

Avoiding mobile-specific crawl errors is paramount in sustaining a website’s SEO vitality. Mobile-specific errors, such as unplayable content or blocked resources, need swift identification and resolution to prevent detrimental impacts on search rank and user experience.

LinkGraph’s SEO services include meticulous inspection and adaptation of websites to ensure compatibility with Google’s mobile indexation standards. This attention to detail ensures that all mobile visitors encounter a frictionless experience, a factor increasingly critical to search engine result page standings:

| Error Type | Description | SEO Impact |

|---|---|---|

| Unplayable Content | Content that cannot be played on mobile devices, such as certain types of videos or games | Leads to poor user experience and potential decreases in mobile search ranking. |

| Blocked Resources | Site resources such as scripts, stylesheets or images that are blocked for crawlers | Prevents Googlebot from rendering pages correctly, affecting how they are indexed and ranked. |

| Mobile Interstitials | Intrusive pop-ups or interstitials that impede user access to content on mobile devices | Can result in a penalization from Google, as they harm mobile user experience. |

By leveraging advanced practices and the expertise of seasoned professionals, LinkGraph champions the elimination of mobile-specific obstacles, nurturing the organic growth of client websites in an ever-evolving digital ecosystem.

3. Update Content Regularly

Securing a prominent position in today’s dynamic search engine results necessitates not just a foundation of strong SEO practices but a commitment to the ongoing refinement of website content.

Establishing a content update calendar serves as a systematic approach to keep a website vibrant and informationally relevant.

Refreshing outdated articles and pages with current insights, integrating fresh and pertinent content, and applying comprehensive content audits are crucial endeavors to keep a website aligned with evolving user interests and search engine algorithms.

Each of these strategies converges to foster an environment of continuous improvement, reinforcing a website’s relevance and authority in its respective niche.

Establish a Content Update Calendar

Staying ahead in the digital arena means updating content with foresight and precision. A content update calendar stands as a beacon for this endeavor, guiding the strategic deployment of SEO-rich revisions and new material, affording a site sustained visibility and relevance.

An efficient calendar underscores the cyclical nature of content refinement, mapping out when a webpage or blog post will undergo updates to maintain accuracy and engage a discerning customer base. Streamlined coordination of content updates ensures consistency in messaging and the optimal use of crawl budget for indexing:

- Detail monthly themes to align content with current industry trends and search queries.

- Schedule weekly audits to identify web pages in need of updated information or SEO enhancements.

- Allocate specific dates for publishing new content that answers the evolving needs and interests of the target audience.

Refresh Outdated Articles and Pages

Refreshing outdated articles and web pages is an essential segment of maintaining a website’s relevance and authority. Through careful analysis and updating, businesses gift their content a new lease on life, engaging users with the most current and accurate information available.

This practice not only sustains user interest but also signals to search engines that the website is a living document, constantly evolving to meet the changing landscape of information and user needs:

- Analyze page metrics to determine which content is underperforming or outdated.

- Implement current industry data and research to revitalize existing content.

- Revise page title and meta descriptions to align with updated information and target keywords.

Such efforts are not superficial; they underpin the website’s expertise, authority, and trustworthiness (E-A-T) in the eyes of both users and search engine algorithms. A methodical refurbishment of older content reinforces the foundation upon which a site’s search performance thrives.

Add Fresh and Relevant Content

Injecting a stream of fresh and relevant content into a website is a critical maneuver that invigorates a brand’s digital presence and amplifies its resonance with contemporary search engine demands. LinkGraph’s SEO services adeptly Curate and Infuse Engaging, Topical Content that not only piques the interest of visitors but also aligns seamlessly with search engine metrics for content quality and relevance.

The addition of new material acts as a beacon to search engines, signaling that a company remains current and informative, a key aspect that can catapult its web pages to the summit of search engine results. LinkGraph’s expertise ensures that each piece of content is meticulously crafted to address the latest trends and user queries, effectively positioning clients as thought leaders in their respective fields.

Utilize Content Audits for Improvement

Through the Implementation of Regular Content Audits, businesses can methodically improve their digital footprint across the vast terrain of the internet. LinkGraph’s SEO services integrate these audits into their comprehensive approach, meticulously scrutinizing each facet of a client’s content to identify areas ripe for optimization, ensuring their website embodies the pinnacle of relevancy and utility.

Audit outcomes serve as a roadmap, emboldening companies to enact informed enhancements that resonate with both search engines and users. The precision of this process, conducted by LinkGraph’s seasoned experts, empowers clients to refine their SEO strategy, nurturing a website’s authority and enhancing its ability to compete for top placement in search engine results.

4. Submit a Sitemap to Each Search Engine

In the realm of search engine optimization, the submission of a sitemap is regarded as a fundamental step toward ensuring that each page of a website is acknowledged and indexed by various search engines.

A comprehensive XML sitemap acts as a blueprint, guiding search engines through the intricate pathways of a website, enabling them to effortlessly parse through its structure.

Attention to detail is crucial, as including only indexable URLs optimizes the crawling process, saving precious crawl budget and enhancing page visibility.

Timely updates to the sitemap after site modifications are critical to reflect the most accurate representation of a site’s content landscape.

While the focus often lies on dominant search engines, comprehensive SEO strategies encompass sitemap submissions to an array of search engines, broadening the scope of visibility and driving more targeted traffic.

LinkGraph’s expertise in crafting and distributing sitemaps ensures that its clients achieve a robust online presence across multiple platforms.

Create a Comprehensive XML Sitemap

In the world of Search Engine Optimization, the development of a comprehensive XML sitemap represents a critical cornerstone. This document enables search engines to navigate a site’s content with ease, ensuring that no webpage goes unnoticed. LinkGraph’s SEO services prioritize the creation of meticulously structured XML sitemaps, emphasizing the inclusion of all indexable URLs to maximize the effectiveness of search engine crawlers’ visits.

LinkGraph’s approach entails a customized blueprint for each client, tailored to present the website’s hierarchy in the most search-engine-friendly manner. This ensures efficient crawling and indexation of every valuable webpage, directly contributing to improved visibility and higher positioning in search engine results. Moreover, their expertly crafted sitemaps adhere to search engines’ best practices, reinforcing their clients’ online domains as trustable and authoritative sources in their respective niches.

Include Only Indexable URLs in Sitemaps

In the tapestry of search engine optimization, the discerning inclusion of only indexable URLs within sitemaps is a move of strategic acumen. LinkGraph’s experts understand that a sitemap chock-full of accessible URLs for Googlebot and other crawlers is key to making each webpage a contender in the digital landscape.

Their SEO services focus on the deliberate elimination of URLs that wield noindex tags or those leading to duplicate content, streamlining the indexation process. This precise selection ensures that each URL within the sitemap serves as a robust pillar supporting the overarching SEO strategy, optimizing the pathways for search engines to recognize and classify the site’s valuable content.

Update Sitemap After Site Changes

Maintaining an up-to-date sitemap after any site changes is a pivotal element for solidifying a website’s search engine optimization strength: It signals to search engines that new pages require attention or that older pages have been improved or removed. Such diligent updates facilitate the latest content’s swift inclusion in search engine databases, ensuring the sitemap remains an accurate reflection of the website’s current structure.

| Action | Purpose | Result |

|---|---|---|

| Addition of New Pages | To expand the website’s reach with fresh content | New pages are quickly indexed and ranked |

| Removal of Obsolete Pages | To keep the website relevant and up-to-date | Prevents indexing of non-existent content which streamlines search relevance |

| Modification of Existing Pages | To reflect updates and ensure content accuracy | Enhances the quality of search listings with the most current information |

Regular revisions to the sitemap post alterations become a cornerstone of an effective SEO blueprint, ensuring search engines have a lens into the site’s evolution. The practice of updating the sitemap becomes not just maintenance but proactive management of how content is accessed and valued, shaping the site’s authority and relevance in the digital expanse.

Submit Sitemaps to Other Search Engines

Expanding a website’s SEO influence involves meticulous attention beyond the dominion of the largest search engines. The submission of sitemaps to a broader spectrum of engines amplifies a site’s reach, tapping into unique user bases and search algorithms that may present untapped opportunities for traffic and visibility.

LinkGraph navigates the submission process with precision, ensuring that each sitemap adheres to the individual specifications and protocols unique to alternative search platforms. This holistic approach to sitemap distribution facilitates a more diverse web presence, enhancing the probability of high search engine results pages placement across the entire search ecosystem:

- Centrally manage submission of sitemaps to ensure consistency and efficiency.

- Customize sitemaps according to the specifications of each search engine.

- Monitor the crawl status and indexing success on each platform for comprehensive oversight.

5. Optimize Your Interlinking Scheme

Chiseling a path to SEO excellence involves not just the creation of stellar content, but also a strategic blueprint for how that content is interconnected within a website’s broader architecture.

The optimization of a site’s interlinking scheme is a sophisticated yet integral facet of search engine optimization that can dramatically propel a site’s visibility and user experience.

It calls for the meticulous construction of a logical site structure, precise use of anchor text, deep linking strategies, and consistent audits for broken links.

These practices synergize to create a more cohesive and navigable web presence, further cementing a website’s position within the search engine results pages and helping to establish a formidable foothold in the digital marketplace.

Build a Logical Site Structure

Establishing a logical site structure serves as the framework upon which search engine crawlers can navigate and discern a website’s hierarchy. LinkGraph’s diligent application of SEO best practices, including information architecture designed for clarity and precision, ensures that each web page is logically categorized and easily discoverable. Their expertise diminishes confusion for both users and search engines, bolstering a website’s SEO performance.

In the quest for search optimization excellence, LinkGraph champions the integration of coherent site structures that amplify indexability. Intuitive categorization and coherent links create a roadmap for search engines, enabling simpler tracking of a site’s myriad pages. This organized approach by LinkGraph facilitates swift indexation, elevating a site’s visibility within Google search results and enhancing user navigability.

Use Anchor Text Strategically

The strategic utilization of anchor text within a website’s interlinking scheme is pivotal to guiding search engines toward understanding the context and relevance of linked content. LinkGraph emphasizes the importance of descriptive, keyword-rich anchor text to strengthen internal linking and SEO potential, carefully avoiding over-optimization which can detract from the site’s credibility in the eyes of search engine algorithms.

LinkGraph recognizes that the precision in anchor text selection can significantly impact a webpage’s ability to rank for targeted queries. By crafting anchor text that is concise and aligned with the content’s core themes, they ensure a website’s interlinking profile contributes to a coherent user journey and search engines’ comprehension of the site’s informational structure, thereby enhancing search result placement.

Link Deep Within Your Site’s Pages

LinkGraph harnesses the potency of deep linking, a technique that fastens user engagement and bolsters page authority within a site. By embedding links that connect superficial content to more profound, substantive material, they elevate the user journey, driving traffic to less visible yet high-value pages.

- Assessing the site’s content hierarchy identifies ideal candidates for deep linking.

- Links are woven seamlessly into relevant, high-traffic pages, drawing readers deeper into the site.

- Strategic use of anchor text ensures visibility and relevance to search engine crawlers, enhancing the page’s authority.

This strategic interweaving of internal pathways not only enhances the user experience but also signals to search engines the depth and breadth of a site’s offerings. By diversifying the entry points to essential information, LinkGraph’s clients witness a strengthened network of interpage connectivity that potentiates the search engine’s indexing and ranking process.

Audit Internal Links for Broken Links

An integral component of maintaining a website’s interlinking scheme is the auditing of internal links for any broken connections. Identifying and repairing broken links are critical tasks that sustain a site’s integrity, preventing the erosion of user experience and preserving link equity.

Consistent audits underpin the assurance that all navigational pathways within a site lead visitors to the intended destination, reinforcing the website’s structure and search engine standing. LinkGraph’s thorough review of internal links, with a focus on identifying and rectifying any broken links, reflects their dedication to optimization and superior SEO outcomes:

| Task | Purpose | Outcome |

|---|---|---|

| Identify Broken Links | To maintain website integrity and user navigation | Directs users and crawlers to the correct pages, enhancing SEO |

| Repair Broken Links | To restore full site functionality and preserve link equity | Improves site reliability and search rankings by removing dead ends |

6. Deep Link to Isolated Webpages

In the intricate web of search engine optimization, deep linking stands out as an essential tactic for bolstering the discoverability and value of isolated webpages.

By identifying and fortifying under-linked pages within a website, organizations can facilitate smoother navigation for users and search engines alike.

Strategic placement of deep links not only enhances the interconnectedness of a site’s content but also encourages backlinks from external sources, further solidifying the site’s SEO footprint.

Monitoring the resultant impact on isolated pages becomes a data-driven exercise, offering profound insights into the efficacy of deep linking efforts in raising a page’s profile in the digital ecosystem.

Identify Under-Linked Pages

Within the realm of optimizing search engine performance, identifying under-linked pages is a strategic imperative. LinkGraph’s SEO services meticulously analyze a website’s link structure to spotlight pages with limited internal connectivity, identifying prime candidates for the deep linking process. This diagnostic approach is crucial in revealing content islands that, once better integrated, can significantly improve the site’s overall SEO landscape.

Expert scrutiny by LinkGraph’s seasoned professionals uncovers these hidden gems within a website’s architecture. Reinvigorating under-linked pages by establishing relevant, meaningful connections not only bolsters the individual page’s SEO value but also enhances the site’s coherence and navigational experience for visitors and search engines alike.

Strategize Deep Link Placement

Masterful deep link placement requires an insightful alignment with user intent and page relevancy: Strategizing where to embed deep links within content necessitates cognizance of the user journey and the contextual relevance each link brings. LinkGraph’s approach ensures each deep link serves a dual purpose, supporting user navigation and reinforcing the topical authority of both connecting pages.

| Deep Linking Step | Objective | SEO Benefit |

|---|---|---|

| Select Target Pages for Deep Links | To identify and prioritize under-linked content that adds value to the user | Improves content visibility and relevance in the eyes of search engines |

| Determine Contextually Relevant Anchor Points | To integrate deep links seamlessly into high-traffic areas | Enhances user experience by providing direct access to related content |

LinkGraph’s astute development of a deep linking strategy is further refined by the careful selection of anchor text: Ensuring that the choice of words accurately reflects the linked content is paramount. This meticulous attention to detail guarantees that deep links amplify the site’s relevance and clarity, subtly driving search engines to reward the interconnected content with higher rankings.

Encourage External Deep Links

Cultivating external deep links is a strategy LinkGraph deploys to reinforce the SEO stature of its clients’ isolated webpages. By drawing high-quality, relevant links from respected external sources to specific internal pages, they enhance the domain’s authority and visibility within search engine results.

LinkGraph employs targeted outreach and content marketing techniques to attract inbound links that drive directly to deep, resource-rich pages. This practice, in turn, infuses the website with robust link equity, fostering an upturn in page authority that yields tangible improvements in search rankings.

Monitor the Impact on Isolated Pages

Assiduously monitoring the impact of deep linking on isolated pages yields critical insights into the efficacy of such SEO enhancements. LinkGraph’s SEO services incorporate advanced analytical tools that track a myriad of metrics, including page traffic, bounce rates, and conversion improvements, to gauge the success of linking strategies and iteratively refine their approach.

Understanding the cascade of effects from deep linking efforts allows LinkGraph to make data-driven decisions that bolster a webpage’s prominence and search performance. By regularly assessing the uptick in user engagement and organic search visibility, they ensure that isolated pages gain traction and contribute more significantly to the website’s SEO objectives.

7. Minify on-Page Resources & Increase Load Times

As digital competition intensifies, the optimization of on-page resources is a critical SEO technique for ensuring that a website not only retains user attention but also gains favor from search engines.

Minimizing the size of CSS, JavaScript, and HTML files is paramount in enhancing page loading times, a factor that search engines weigh heavily when ranking sites.

In this competitive landscape, the deployment of strategies such as image and multimedia optimization, effective use of browser caching, and the integration of a Content Delivery Network (CDN) promises substantial gains in site performance and user experience.

These optimizations are not merely enhancements; they are essential components of a comprehensive SEO strategy that bolsters a website’s visibility, usability, and overall search engine standing.

Apply Minification to CSS, JavaScript, and HTML

Minification is a technique where experts Streamline CSS, JavaScript, and HTML Files by removing unnecessary characters that do not contribute to the execution of the code, such as whitespace, new lines, and comments. LinkGraph’s professionals meticulously apply this process to reduce file sizes, expediting the code execution and rendering process, leading to faster page load times and an improved user experience.

The prudent application of minification, as undertaken by LinkGraph’s adept developers, serves as a testament to their commitment to refined web page optimization. This practice significantly improves bandwidth usage and loading speed, contributing to a website’s enhanced search engine rankings and providing a tangible boost to the site’s overall SEO health.

Optimize Images and Multimedia

Image and multimedia optimization stands as a cornerstone of LinkGraph’s SEO strategy, critically enhancing page load speeds while maintaining visual fidelity. The company’s adept use of compression technologies effectively reduces file sizes without compromising on the clarity or impact of visual content, impressively balancing functionality with aesthetic appeal.

LinkGraph’s advanced strategies also extend to the selection of appropriate file formats and resolutions tailored to suit different devices and contexts, thereby ensuring multimedia content contributes positively to the site’s SEO rather than becoming a hindrance. This nuanced approach augments user engagement while simultaneously minimizing load times, crucial for achieving top-tier search engine rankings.

Leverage Browser Caching Techniques

Leveraging browser caching techniques is a fundamental strategy for improving page load times, which holds weight in search engine algorithms. LinkGraph’s proficiency extends to configuring server settings that instruct browsers on how long to store resources, reducing the need for repeated resource downloading during subsequent visits.

Effective browser caching minimizes server load and enhances user experience by enabling quicker page rendering. These techniques are essential for optimizing a website’s SEO and are meticulously implemented by LinkGraph to ensure clients’ websites perform at their peak:

| Caching Element | Function | SEO Advantage |

|---|---|---|

| Expires Headers | Dictate how long browsers should cache specific resources | Resources load faster, improving user experience and contributing to SEO |

| Cache-Control Headers | Provide caching policies for clients and proxies | Enhances the efficiency of resource delivery, leading to better page performance |

| ETags | Validate cached resources to determine if they match the server versions | Ensures fresh content is rendered, balancing performance with up-to-date content |

Use a Content Delivery Network (CDN)

Integrating a Content Delivery Network (CDN) is a strategic move employed by LinkGraph to optimize the delivery speed of website content to users worldwide. This network of servers effectively distributes the workload, ensuring that irrespective of geographical location, visitors receive data from the nearest server, thus dramatically reducing latency and accelerating page load times.

Employing a CDN goes beyond improving user experience; it is a pivotal component in LinkGraph’s comprehensive SEO strategy. By diminishing site loading duration, the CDN enhances website performance metrics which are essential in gaining a competitive edge in search engine rankings, directly influencing the SEO success of a client’s digital presence.

8. Fix Pages With Noindex Tags

Within the framework of optimizing a website’s visibility, scrutinizing the proper use of noindex tags emerges as a pivotal aspect of search engine optimization.

Erroneously deploying noindex tags can inadvertently render key pages invisible to search engines, leading to substantial opportunities for visibility being overlooked.

A comprehensive audit for incorrect noindex tags, the judicious adjustment of robots meta tags, and the assurance of key pages’ indexability are indispensable steps towards rectifying the accessibility of a website’s content.

Mastering these facets offers the potential to unleash the full indexation prowess of a website, realigning a brand’s digital assets with the discerning eyes of search engine crawlers.

Audit Pages for Incorrect Noindex Tags

Executing an audit for incorrect noindex tags is a meticulous exercise of paramount importance within LinkGraph’s SEO services. This auditing practice uncovers instances where noindex directives might be misapplied, preventing essential pages from appearing in search engine results and thus curtailing their visibility. Correcting such errors is essential to ensure that all significant content has the opportunity to be indexed and found by the target audience.

LinkGraph’s professionals conduct a comprehensive examination of a website’s meta tags, identifying and amending inaccuracies that could undermine a website’s indexation by search engines. Such vigilance in auditing ensures that clients’ websites retain their intended visibility, bolstering their position in the maze of online search results:

| Audit Task | Purpose | SEO Outcome |

|---|---|---|

| Detection of Noindex Tags | To identify pages inadvertently blocked from indexing | Prepares the page for proper indexation and visibility in search results |

| Correction of Meta Tags | To rectify the improper use of noindex directives | Ensures valuable pages are accessible for crawling and indexing |

Adjust Robots Meta Tags Appropriately

Appropriate configuration of robots meta tags is a critical step in ensuring that search engines correctly understand which pages to index and which to disregard. LinkGraph’s approach meticulously adjusts these tags, thus perfecting the site’s narrative to search engines, ensuring that only content meant for public consumption is visible and that privacy is maintained for sensitive pages.

Setting robots meta tags to “index, follow” is the default state for pages intended for search visibility, while “noindex, nofollow” tags are reserved for those that should remain hidden. LinkGraph’s expertise in tag refinement affirms that no valuable page remains unindexed due to a misconfigured directive:

| State | Robots Meta Tag | Intended Function |

|---|---|---|

| Public Content | index, follow | Guides search engines to index the page and follow the links on it |

| Private Content | noindex, nofollow | Prevents search engines from indexing the page and following its links |

Ensure Key Pages Are Indexable

Ensuring that pivotal pages are indexable forms the keystone of LinkGraph’s comprehensive SEO strategy. This meticulous process involves the assessment and configuration of server responses, content accessibility, and metadata to guarantee that search engines can seamlessly crawl and add crucial pages to their index, thereby maximizing visibility and accessibility to users.

Within the ambit of SEO, LinkGraph’s technique ensures key pages are unimpeded by technical barriers that could stifle search engine crawlers. Their professional acumen leads to the optimization of HTTP status codes and the rectification of any linking issues, paving the path for key content to be discovered and ranked, enhancing a website’s presence in the digital landscape.

9. Set a Custom Crawl Rate

Optimizing a website’s crawlability is a nuanced aspect of search engine optimization, involving the strategic regulation of how often search engine bots access a site’s content.

There is a delicate balance to strike; too infrequent, and a website may miss out on timely indexing of updated content, whereas too frequent, it risks overwhelming server resources, potentially leading to slower response times or even downtime.

By establishing a custom crawl rate, website administrators can guide search engine bots to crawl their site at a pace that aligns with both their content update frequency and server capacity.

This level of control is instrumental in maintaining a robust online presence, ensuring content is indexed efficiently without impairing site performance.

Understand When to Adjust Crawl Rate

Discerning the optimal moments to adjust a website’s crawl rate is essential for webmasters aiming to balance content freshness with site performance. Key occasions for tweaking crawl frequency include post-site migration or following significant content updates, ensuring rapid indexing of new or revised pages.

- Evaluate server capacity and response time to set a baseline crawl rate.

- Monitor traffic patterns and peak hours to avoid potential crawl-induced strain.

- Adjust the crawl rate in response to major site updates to expedite indexing.

Establishing a controlled crawl rate becomes particularly critical when a website experiences substantial changes in its content infrastructure or when analytics show a shift in user behavior. Such proactive adjustments secure efficient resource allocation, equipping search engine bots to reflect a website’s current status without impeding user experience.

Set Crawl Rate via Search Console

LinkGraph’s strategic SEO services recognize the importance of optimizing a website’s crawl rate through Google Search Console. Their team enables clients to fine-tune how search engine bots critically engage with website content, setting a crawl rate that respects the equilibrium between content discovery and server health, ensuring search engines encounter fresh content without overburdening the site’s resources.

Adjusting the crawl rate is a pivotal component of LinkGraph’s meticulous SEO management, ensuring a website’s new or updated content is indexed at an optimal pace. Through Google Search Console, they navigate the delicate intricacies of SEO, customizing the frequency of crawls to facilitate prompt and efficient indexation while preemptively safeguarding against potential server overload.

Monitor Server Log for Crawl Frequency

Monitoring the server log for crawl frequency becomes an exercise in precision, allowing LinkGraph’s professionals to glean actionable insights on search engine behavior. By analyzing these logs, they determine the frequency of crawls and the specific pages accessed by Googlebot, enabling the identification of patterns that could inform further optimization strategies.

This data-centric approach to SEO leverages server logs to reveal the influence of a set custom crawl rate on the site’s performance and visibility. The insights unearthed through meticulous log analysis guide adjustments in crawl rate settings, ensuring consistency throughout the search engine’s indexing activities:

| Server Log Analysis Objective | SEO Benefit |

|---|---|

| Determine Crawl Patterns | Optimizes the crawl rate for better indexing and visibility |

| Uncover Indexing Frequency | Ensures a balanced server load and timely content updates |

| Identify Googlebot Activity | Assists in fine-tuning SEO strategies based on crawler behavior |

10. Eliminate Duplicate Content

Within the complex tapestry of search engine optimization, duplicate content stands as a silent saboteur of indexing efficiency and search engine credibility.

Duplicated content can dilute a website’s relevancy and split page authority, inadvertently stifling a site’s visibility in search results.

The meticulous identification and resolution of these content redundancies are critical to construct a unique and authoritative digital presence.

This process entails utilizing advanced tools to detect similarities, implementing canonical tags to signify preferred pages, and reconfiguring or redirecting content to uphold the singularity of the information provided.

By rigorously addressing duplicate content, websites can hone their SEO strategy, ensuring that every piece of content contributes positively to search ranking performance and user experience.

Locate Duplicate Content With Tools

LinkGraph’s comprehensive SEO services incorporate advanced tools crafted to detect and rectify duplicate content, ensuring each webpage within a client’s portfolio is unique. These sophisticated algorithms are designed to scan and compare content across a breadth of pages, spotlighting duplications that could dilute search engine authority and impede SEO performance.

The deployment of these precise tools plays a crucial role in preserving the integrity of a website’s content strategy. LinkGraph’s experts deftly navigate through digital content, extracting and resolving redundant elements to fortify the website’s singularity, thereby reinforcing its standing in search engine results and enhancing the overall user experience.

Apply Canonical Tags Correctly

Correct application of canonical tags is critical in communicating to search engines which version of similar content is the definitive one to index. LinkGraph’s strategic use of these tags prevents search engines from spreading ranking signals across duplicated pages, thereby concentrating SEO efforts and preserving the integrity of the primary content’s search ranking.

By meticulously implementing canonical tags, LinkGraph ensures that clients’ websites avoid the pitfalls of content duplication. This nuanced approach directs search engines to focus on the content that best represents the topic, enhancing the site’s SEO by preventing dilution through replicated material:

| Canonical Implementation Step | Purpose | SEO Impact |

|---|---|---|

| Identify Duplicate Content | To determine which pages contain similar material | Focuses indexing on the primary content source |

| Assign Canonical Tags | Signal search engines to the preferred page for indexing | Consolidates search signals and page authority |

| Verify Tag Accuracy | Ensure proper tag use across the website | Maintains consistent and clean indexation by search engines |

Rewrite or Redirect Duplicated Content

Redefining or rerouting content that exists in multiple locations is a quintessential step in the SEO optimization process. LinkGraph’s expertise in this domain involves rewriting content to create distinctive versions or implementing 301 redirects to guide search engines and users to the most relevant, canonical page, thereby circumventing the negative SEO impacts of content duplication.

In handling the complexities of duplicate content, LinkGraph tailors solutions by assessing the uniqueness of the web copy and the strategic goals of the client. When necessary, content is rephrased to add value and differentiate from similar pages, while in other cases, permanent redirects consolidate traffic and link equity towards the primary, authoritative source favored in search engine rankings.

11. Block Pages You Don’t Want Spiders to Crawl

In the advancing landscape of Search Engine Optimization, the astute management of a website’s crawlability is essential for both protecting sensitive areas and directing search engine spiders to content-rich pages.

With this understanding, businesses must adeptly utilize tools that delineate the boundaries of a search engine’s crawl.

Crafting a meticulously validated robots.txt file serves as the first line of defense, setting the parameters for crawler access.

Strategic disallow directives within this file can protect specific directories from being scanned, while the meta robots tag provides granular control at the page level.

These techniques empower webmasters to sculpt the crawl landscape, ensuring that only the most impactful content is indexed, and bolstering the site’s search engine stature.

Create and Validate Robots.txt File

The creation of a robots.txt file is an astute maneuver within the arsenal of LinkGraph’s SEO services, aimed at dictating which areas of a website should be off-limits to search engine spiders. Such a file is deftly constructed to communicate with crawlers, preventing them from accessing specific directories that may contain duplicate, sensitive, or irrelevant content, thereby ensuring that only the most valuable and pertinent content is scanned and indexed.

LinkGraph underscores the importance of validating the robots.txt file to eliminate any risk of inadvertently blocking crucial content from being indexed, a critical step for maintaining unhindered visibility in search engine results. Their meticulous process vets each directive within the file for accuracy and effectiveness, maintaining an optimized balance between accessible content for indexing and protected areas, ensuring a fortified SEO strategy that aligns with the client’s objectives.

Strategically Disallow Specific Directories

Employing strategic disallow directives in the robots.txt file expertly shapes a search engine’s traversal of a website. LinkGraph’s perspicacious approach involves pinpointing directories that do not contribute to the site’s SEO goals, such as administrative pages or duplicate content archives, and expressly instructing crawlers to avoid these areas. This precise guidance enhances the efficiency of the crawl process, ensuring that search engine resources are focused on content that serves the user and bolsters the site’s digital footprint.

By curating a well-defined robots.txt file, clients benefit from a structured approach to site indexing that aligns with their SEO objectives:

- Disallow directives remove superfluous or private pages from a search engine’s audit.

- Targeted crawl exclusions preserve server resources and enhance site performance.

- Robust site architecture is maintained by directing spiders to strategically valuable content.

This methodology plays a critical role in cultivating an optimized web presence and maintaining the overall health of the client’s SEO endgame.

Use Meta Robots to Fine-Tune Page-Level Crawl Control

In the multifaceted realm of search engine optimization, the use of meta robots tags provides a nuanced level of control over how individual pages are crawled. LinkGraph harnesses the specificity of these tags, applying ‘noindex’ directives on a page-by-page basis to prevent search engines from indexing non-essential or confidential content, thereby fine-tuning a website’s crawlability for maximal SEO benefit.

Through strategic implementation of meta robots tags, LinkGraph’s SEO services masterfully direct search engine spiders, facilitating granular governance of content visibility. This targeted approach allows for the exclusion of particular pages from search results, enhancing the website’s overall indexation profile and concentrating the crawl on strategically valuable content to better serve user queries and strengthen search performance.

Conclusion

Maximizing a website’s SEO through proven strategies is crucial for superior indexation and online visibility.

Properly setting up and utilizing Google Search Console, creating mobile-friendly pages, and regularly updating content are foundational to ensuring readiness for search engine crawls.

Submitting comprehensive sitemaps to multiple search engines and maintaining a logical site structure greatly improves discoverability, while employing robust interlinking and deep linking strategies strengthens the site’s internal connections and user navigation.

Moreover, enhancing page load times by minifying resources and leveraging CDNs, along with rectifying noindex tags and eliminating duplicate content, solidifies a website’s competitiveness in search rankings.

Finally, strategic control over crawl rates and page availability to search spiders ensures the right content is indexed, enhancing the website’s search engine stature.

Collectively, these strategies form a multi-faceted approach that can not only improve a site’s presence in search results but also provide an enriched user experience.