Crawl Budget

Maximizing Your Site’s Visibility: Understanding Crawl Budget In the digital terrain of search engine rankings, understanding and managing a website’s crawl budget is critical for enhanced visibility […]

Maximizing Your Site’s Visibility: Understanding Crawl Budget

In the digital terrain of search engine rankings, understanding and managing a website’s crawl budget is critical for enhanced visibility and user traction.

A site owner might possess an impactful ecommerce site, yet without adept crawl budget optimization, the pivotal content risks remaining veiled from searchers.

LinkGraph’s SEO services hinge upon such nuanced facets, ensuring that the crawl space allotted by search engines is utilized efficiently to bolster a site’s search results prominence.

This article will guide readers through the intricacies of crawl budget management, empowering them to unlock the full potential of their online presence.

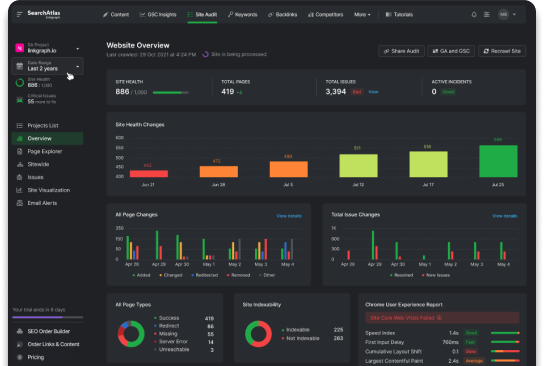

Keep reading to discover how to leverage LinkGraph’s Search Atlas SEO tool to refine and command your site’s crawl budget.

Key Takeaways

- Crawl Budget Is a Vital Part of SEO, Influencing How Search Engines Index Pages

- LinkGraph’s SEO Services Optimize Websites for Efficient Utilization of Crawl Budgets

- Site Structure and Error Correction Are Crucial Factors for Effective Crawl Budget Management

- Regular Site Audits and Log File Analysis by LinkGraph Help Maintain Search Engine Visibility

- Strategic Adjustments Based on Crawl Insights Are Essential for Sustained SEO Success

Decoding the Basics of Crawl Budget

In the competitive expanse of the digital landscape, a site owner’s odyssey to prominence hinges upon the keen understanding of Search Engine Optimization (SEO) intricacies.

Among the elements critical to enhancing online visibility lies the concept of ‘crawl budget.’

This term, crucial yet often overlooked, refers to the limited number of pages a search engine’s crawler, such as Googlebot, will scan on a given website within a specific timeframe.

Grasping the function and optimization of crawl budget not only paves the way for more effective content indexing but also ensures that each web page emerges as a potent contender in search results.

LinkGraph’s SEO services delve into this concept, elucidating how crawl budget allocation influences a site’s accessibility to searchers and the delicate equilibrium between crawl rate and demand in the grand scheme of a website’s search presence.

Understanding What Crawl Budget Is

When engaging with the concept of crawl budget, it is essential to recognize its dual components: crawl rate limit and crawl demand. The crawl rate limit is the maximum fetching frequency that Googlebot deems appropriate for a given site without hampering its response time, while crawl demand is Google’s interest in your pages, dictated by their popularity and freshness.

- Crawl rate limit ensures that the site’s user experience remains unaffected by the crawling process.

- Crawl demand dictates which pages are prioritized for indexing based on their perceived value to searchers.

This intricate balance, crafted by the search engine, ensures that the most significant content reaches potential customers without overburdening the site’s resources. The pivotal role played by LinkGraph’s SEO services is in aligning a site’s architecture with these parameters to maximize search visibility and user engagement.

How Crawl Budget Is Allocated

Allocation of crawl budget is a dynamic process that hinges on a website’s roadmap for search engine discovery, structured through a sitemap and influenced by the frequency of content updates. By optimizing server responses and mitigating server errors, SEO experts at LinkGraph ensure crawler access is timely and efficient, increasing the chances of indexing newer or updated pages.

Moreover, through meticulous site audits, LinkGraph’s specialized Services Identify and Correct Factors that might deplete crawl budget, such as redirect chains or duplicate content, enabling a more strategic allocation that prioritizes crucial web pages. The ultimate goal is steering Googlebot towards content that fortifies a site’s relevance and appeal to its target audience, bolstering visibility in the labyrinth of search results.

The Role of Crawl Budget in Site Visibility

The strategic implementation of crawl budget is a linchpin for augmenting a site’s visibility within the vast ocean of digital information. By streamlining how often and which parts of a site are ingested by search engine crawlers, a skilled SEO agency like LinkGraph can dramatically heighten a site’s prominence in the eyes of both search engines and potential customers.

Site owners aiming to captivate a wider audience must appreciate that a well-managed crawl budget leads to more frequent indexing, effectively updating the search landscape with the site’s latest offerings. With Search Atlas as a cornerstone tool, LinkGraph orchestrates this delicate balance, ensuring your site’s valuable content surfaces effortlessly, satisfying both user experience and the nuances of search engine paradigms.

Interplay Between Crawl Rate and Crawl Demand

The relationship between crawl rate and crawl demand is symbiotic, each influencing the prominence of a given page within search engine indexes. While the crawl rate delineates the boundaries of a crawler’s activity, ensuring server stability and user experience, crawl demand prioritizes the pages that captivate end-users and search engines with relevancy and freshness.

It is within this dynamic landscape that LinkGraph’s SEO Services Navigate, optimizing each facet to augment a site’s digital footprint. By aligning crawl rate with server capabilities and enhancing crawl demand through strategic content marketing, they deftly position ecommerce sites to capitalize on peak visibility opportunities.

| Element | Definition | Impact on SEO |

|---|---|---|

| Crawl Rate Limit | The threshold of a search engine’s fetches that maintains optimal site performance. | Creates equilibrium for server load, ensuring seamless user interaction. |

| Crawl Demand | Search engine’s inclination towards indexing a page, influenced by user interest. | Drives priority indexing of pages with higher relevance and newness. |

Identifying Factors That Influence Crawl Budget

For any enterprise seeking to escalate its search engine stature, the meticulous optimization of its website’s crawl budget is tantamount to a digital imperative.

A myriad of factors interweave to sculpt a domain’s crawlability, each holding sway over the frequency and thoroughness of search engine sweeps.

Within this discourse lies an exploration of pivotal elements such as website structure and how its meticulous engineering can impact a crawler’s itinerary through a site’s expanse.

The freshness of content emerges as another determinant, signaling the crawler to the perpetual evolution within a website.

Moreover, the prevalence of site errors emerges as an impediment, potentially constraining crawl efficiency.

Additionally, the facilitative role of sitemaps as roadmaps for search engines is indisputable in propelling crawl rates.

These components coalesce to frame the fulcrum upon which a site’s visibility pivots, a fulcrum deftly leveraged through the expertise of LinkGraph’s SEO services.

Website Structure and Crawl Budget

The architecture of a website significantly influences its crawl budget. LinkGraph’s SEO services recognize that a clean, hierarchical website structure aids crawlers in navigating through the crawl space efficiently, prioritizing key landing pages and sections without dissipating resources on less significant areas of the domain.

An astute Configuration of Internal Links, judicious use of noindex directives, and the avoidance of deep subdomains facilitate the crawler’s journey, ensuring enterprise websites with thousands of pages optimize their crawl budget and remain prominent in search engine outcomes. Thus, LinkGraph emphasizes on crafting an SEO-friendly site structure tailored to enhance crawl budget efficacy.

Content Freshness and Its Impact on Crawling

The currency of content carries immense weight in the realm of SEO, with content freshness serving as a beacon for search engine crawlers. The signal it sends is clear: current, updated content reflects a website’s commitment to relevance and user engagement, Compelling Crawlers Like Googlebot to visit more frequently.

LinkGraph’s SEO services harness this dynamic by ensuring that their clients’ content is not merely prolific but also pulsates with up-to-date information. This consistent refreshment of content catalyzes crawl demand, placing the spotlight on your site as a source of fresh insights and information, thereby sharpening its competitive edge in search rankings.

The Effect of Site Errors on Crawl Frequency

Site errors serve as a significant impediment to maximizing crawl frequency, directly impacting a website’s SEO performance. Imperfections such as broken links, server errors, or redirect issues signal search engines that a website may not offer optimal value to searchers, leading to a potential decrease in crawl rate and a diminished presence in search results.

With an understanding of crawl budget, LinkGraph’s SEO services Meticulously Scrutinize a Website’s Health through comprehensive site audits. Identifying and swiftly rectifying these errors ensures unimpeded crawler access and maintains a robust crawl frequency, thereby safeguarding a website’s visibility to its customer base.

| Site Error Type | SEO Implication | Remedial Action Through SEO Services |

|---|---|---|

| Broken links | Detrimental to site credibility; reduces crawl efficiency. | Immediate identification and correction of link pathways. |

| Server errors | Impairs user experience; hinders consistent crawling. | Optimization of server configurations and responses. |

| Redirect issues | Depletes crawl budget; confuses site structure. | Simplification of redirect chains; ensures direct access to content. |

How Sitemaps Can Optimize Crawl Rates

Central to scaling the summit of search engine rankings, sitemaps are instrumental in optimizing crawl rates. Sitemaps serve as a guide, laying out a transparent blueprint of a website’s content terrain, which ensures that crawlers do not overlook vital pages.

The incorporation of sitemaps caters to the crawl space of a website, allowing LinkGraph’s SEO experts to communicate directly with search engines about the pages deemed paramount for visibility: a strategic method that leads to a more efficient and focused crawling process.

| SEO Component | Role in Crawl Budget | Impact on Crawl Rates |

|---|---|---|

| Sitemaps | Strategic mapping of online content for search engines. | Enhances the precision and efficiency of the crawling process. |

| Content Visibility | Assertion of crucial web pages for indexation. | Increases likelihood of high-value pages being indexed and ranked. |

Analyzing the Impact of Site Errors on Crawl Budget

In an arena where every detail matters, the optimization of crawl budget stands as an essential strategy for improving a site’s search engine footprint.

Issues lurking within a site’s architecture can inadvertently consume this budget, diminishing the site’s potential to secure a favorable position in search results.

In the subsections to follow, the focus will turn to the various site errors that can erode a crawl budget, the importance of leveraging webmaster tools for diligent monitoring, and proactive measures to address these errors, ultimately safeguarding the precious resource of crawl budget.

Types of Site Errors That Can Deplete Crawl Budget

Excessive site errors can swiftly exhaust a website’s crawl budget, leaving important pages unscanned by search engines. Addressing errors such as looping redirect chains and inaccessible pages caused by improper use of the noindex directive prevents this wasteful depletion and conserves crawl budget for content that elevates a site’s search engine position.

Complicating the matter further, unintentional creation of duplicate content through issues like www and non-www versions of a site essentially forces Googlebot to choose between identical pages. By resolving these redundancies, LinkGraph helps site owners reclaim their crawl budget, focusing crawler attention on unique, value-adding content that enhances online visibility.

Monitoring Site Errors With Webmaster Tools

Meticulous monitoring through webmaster tools like Google Search Console can empower site owners to identify and rectify server errors, broken links, and redirect issues that hamper crawl budget optimization. These tools serve as a diagnostic interface, delivering insight into the crawl space, enabling detection of anomalies that could impede Googlebot’s access or consume valuable crawl budget with non-essential pages.

- Webmaster tools alert marketers to critical crawl errors impacting site health.

- They offer comprehensive crawl stats and reports, informing strategic SEO decisions.

- Site owners can utilize this data to prioritize fixes that conserve crawl budget.

By harnessing the power of these SEO instruments, LinkGraph’s SEO services provide a clear picture of a site’s crawl efficiency. Professionals at LinkGraph leverage this data, streamlining the SEO process to propel a site’s content to the forefront of search results, ensuring maximum visibility and user engagement.

Addressing Errors to Preserve Crawl Budget

Addressing errors promptly and effectively is a cornerstone of preserving crawl budget, thus steadfastly maintaining a website’s visibility. LinkGraph’s SEO services meticulously target and correct discrepancies that squander crawl resources, ensuring that search engine bots focus on valuable content rather than being sidetracked by fallacies within the site’s digital framework.

Each correction initiated by LinkGraph’s strategy not only recovers crawl budget but also fortifies the integrity of a site’s structure, thereby enhancing its stature in search engine assessments. The diligence applied in this remediation process echoes the commitment of LinkGraph’s SEO services to propel a website to greater visibility and user engagement by optimizing its underlying SEO foundation.

Implementing Effective Crawl Budget Management

As digital real estate grows ever more expansive, the astute management of crawl budget emerges as a decisive factor in ensuring that a website stands out amid the infinite digital information.

This nuanced aspect of SEO demands a proactive approach, spearheaded by a thorough assessment of a site’s current crawl status.

By prioritizing the indexing of pivotal pages and judiciously guiding search crawlers with tools like robots.txt, site owners can wield control over the navigational behavior of these digital explorers.

LinkGraph’s suite of strategies and practices for crawl budget optimization provides a gateway to enhance a site’s online prominence, ensuring that essential content is not only discovered but also given the respect it commands by search engine algorithms.

Assessing Your Site’s Current Crawl Status

To initiate a robust crawl budget management strategy, an assessment of your site’s current crawl status becomes essential. LinkGraph’s SEO services begin this process by Analyzing Server Logs and Crawl Reports, providing a baseline understanding of how search engine crawlers interact with your website.

Such an evaluation illuminates areas where your crawl budget may be suboptimal, highlighting opportunities for refinement. Upon understanding this data, businesses can make informed adjustments to their SEO strategies, thereby enhancing their site’s search engine visibility:

| Assessment Metric | SEO Significance | LinkGraph’s Strategic Approach |

|---|---|---|

| Server Log Analysis | Reveals crawler access patterns and frequency. | Optimizes crawl rate and budget allocation. |

| Crawl Error Inventory | Identifies impediments in content indexing. | Addresses errors to maximize budget efficiency. |

| Index Coverage Report | Assesses the extent of indexed site content. | Guides backlinks and content updates to improve indexing. |

Prioritizing Important Pages for Search Crawlers

Effective crawl budget management places a premium on ensuring search crawlers can easily discover and index a website’s most important pages. By strategically directing crawlers to these priority pages, LinkGraph’s SEO services work to amplify the likelihood that these areas of a website will rank higher in search results, driving the potential for increased organic traffic.

LinkGraph’s seasoned team employs a Meticulous Approach to Sculpting an SEO Strategy that focuses on enhancing the visibility of essential landing pages and cornerstone content. Through concentrated efforts on page hierarchy and internal link structure, they adeptly signal to search crawlers which content pieces are vital, thus optimizing the website’s search footprint:

| SEO Focus Area | Goal | LinkGraph’s Strategic Method |

|---|---|---|

| Page Hierarchy | Streamline crawler navigation to key pages | Implementing clear, logical site architecture |

| Internal Link Structure | Emphasizing importance of select pages | Strategic use of link equity to boost page significance |

Using robots.txt Effectively to Guide Crawlers

LinkGraph’s adept use of robots.txt translates to a Strategic Navigation System for search engine crawlers. This crucial file provides precise instructions, enabling site managers to exclude non-essential sections from being crawled, thus reserving crawl budget for more critical content.

- Delineating crawl parameters with robots.txt ensures a targeted approach.

- Earmarking valuable crawl space for key sections enhances overall site visibility.

- Effectively utilizing robots.txt mitigates resource waste, securing efficient indexing.

Through careful calibration, LinkGraph ensures that robots.txt acts as the cornerstone for crawl budget optimization. Their methodical process involves instructing search bots on exactly which paths to follow, creating a more focused and effective exploration of a site’s relevant content.

Implementing Crawl Budget Optimization Practices

To ensure an enterprise’s website resonates with the rhythm of search engine crawlers, implementing crawl budget optimization practices is a transformative step. LinkGraph’s SEO services meticulously calibrate a site’s crawl budget, infusing it with the prowess to command crawler attention towards the most significant content, thereby amplifying the site’s stance in search indices.

The deliberate distillation of crawl directives, facilitated by LinkGraph’s expertise, aligns a website’s content offerings with the demands of modern SEO. Optimization extends beyond mere adjustments; it encompasses a holistic reshaping of the crawl landscape, entrusting vital content with the visibility it warrants:

| Optimization Practice | Description |

|---|---|

| Crawl Directive Calibration | Refining crawler guidelines to prioritize essential content within the site. |

| Holistic Reshaping | Sculpting the site to ensure seamless crawler navigation and content discovery. |

Advanced Techniques to Maximize Crawl Budget Efficiency

In the realm of digital visibility, every facet of a website’s construction and content strategy contributes to its potential for capturing the spotlight in search engine results.

As businesses and site owners continue to vie for ranking superiority, the judicious management of crawl budget stands out as a pivotal practice.

Advanced techniques to leverage server response times, manage duplicate content, and improve link equity distribution form the vanguard of efforts to optimize how search engines navigate and assess a site’s worthiness for prominent placement.

Each tactic not only refines the technical prowess of a website but also reinforces its ability to stand as a beacon in the ever-evolving quest for SEO excellence.

Leveraging Server Response Times for Better Crawling

Optimal server response times are fundamental for efficient crawling, as they allow search engine crawlers to access and index web pages swiftly. By enhancing server configurations and promptly rectifying any connectivity issues, LinkGraph’s SEO Services Ensure that server errors do not obstruct Googlebot’s ability to traverse a site, thereby safeguarding the valuable crawl budget.

LinkGraph’s strategic focus on server performance is not just about averting bottlenecks; it enhances overall crawl efficiency. Fast-loading pages are more likely to be indexed quickly, reflecting positively on a site’s SEO health and helping it surface more prominently in search engine results, which can profoundly impact a site owner’s visibility and user engagement.

Managing Duplicate Content for Crawl Efficiency

Optimizing a site’s exposure within search engine outcomes entails addressing the issue of duplicate content for improved crawl efficiency. By streamlining content and removing redundancies, LinkGraph’s SEO services ensure that each web page presents unique value, preventing crawlers from squandering crawl budget on multiple pages with similar content.

LinkGraph’s approach involves sophisticated analysis and application of canonical tags, which signal to search engines the preferred version of a page. This attention to detail prevents the diffusion of link equity across duplicate pages and directs crawler resources to the original, enhancing the site’s authority and visibility in search engine rankings.

Improving Link Equity Distribution to Enhance Crawling

Enhancing the distribution of link equity across a website’s pages can significantly boost crawl efficiency. LinkGraph’s SEO services meticulously analyze and adjust the internal linking structure, ensuring that the flow of link equity highlights the most valuable pages, thereby signaling their importance to search engine crawlers.

| SEO Task | Purpose | Impact on Crawling |

|---|---|---|

| Internal Linking Optimization | To direct link equity towards high-value pages | Enhances the prominence of key pages for crawlers, improving indexation |

| Application of Canonical Tags | Clarify the principal version of content to crawlers | Consolidates crawl focus, preventing waste on duplicate page crawls |

Through strategic internal link placement, LinkGraph bolsters the significance of cornerstone content, ensuring that these sections receive the attention required from both users and crawlers. This deliberate promotion of important pages elevates the website’s visibility, translating to competitive positioning in search engine rankings.

Monitoring and Measuring Crawl Budget Over Time

Maintaining the preeminence of an ecommerce site in the digital arena requires not just establishing but continually refining its search engine rapport.

Thus, monitoring and measuring crawl budget over time becomes an intrinsic segment of a comprehensive SEO framework.

This enduring vigilance, comprising tracking crawls with log file analysis, setting benchmarks for optimization, and adjusting strategies grounded on crawl insights, is vital for perpetuating a site’s search engine favor and user reach.

LinkGraph’s SEO services shine in this domain, proffering actionable data to secure and enhance a site’s visibility and ensuring that strategic SEO adaptations are not just responsive but ahead of the curve.

Tracking Crawls With Log File Analysis

Log file analysis provides a lens through which the interactions between crawlers and a website become transparent. By sifting through server logs, SEO specialists at LinkGraph garner a granular view of the crawl behavior—capturing how often Googlebot visits, the specific pages it accesses, and the time it spends on each fetch:

| Crawl Insight | Value to SEO | LinkGraph’s Analytical Focus |

|---|---|---|

| Googlebot Visit Frequency | Indicates the rate of crawl activity and potential website engagement. | Frequency analysis to align with peak content updates. |

| Pages Accessed by Crawler | Reveals the content prioritization by search engines. | Content visibility assessment for indexation improvement. |

| Time Spent on Fetches | Reflects efficiency and potential hurdles in the crawl process. | Enhancements in server response times and page accessibility. |

Through systematic review and interpretation of these logs, LinkGraph tailors SEO strategies to optimize the crawl budget. This translates to more efficient indexing and a higher likelihood that updated content reaches customers expediently, bolstering the site’s search engine positioning and user experience.

Setting Benchmarks for Crawl Budget Optimization

Establishing benchmarks for crawl budget optimization equips site owners with clear targets for enhancing search engine engagement. With LinkGraph’s robust SEO services, benchmarks are not arbitrary figures but data-driven goals that are tailored to the unique characteristics of each client’s website, ensuring a strategic pathway to elevated search visibility.

LinkGraph’s professionals utilize an array of metrics—from indexed page ratios to crawl frequency—to create these benchmarks, ensuring that each adjustment leads directly to an optimized crawl budget. This measured approach serves as a barometer for the efficacy of ongoing SEO endeavors, providing a feasible framework for continuous improvement and elevated search engine prominence.

Adjusting Strategies Based on Crawl Budget Insights

Adapting SEO tactics in response to crawl budget insights stands at the heart of maintaining a competitive edge within the search engine landscape. LinkGraph’s SEO services excel in this regard, using analytical findings to inform and evolve a site’s SEO practices: ensuring a site remains conducive to crawl preferences and garners the full potential of its content’s reach.

- Studying server log file trends to adjust content update cycles.

- Refining page prioritization based on crawl frequency data.

- Improving server responses and page load times to maximize crawl efficiency.

This data-driven recalibration is critical to sustaining a site’s visibility and relevance in response to ever-changing search engine algorithms. By continuously revisiting and revising strategies, LinkGraph safeguards a client’s investment in SEO, aligning it with the objective of achieving and preserving premium search engine ranking positions.

Conclusion

Maximizing your site’s visibility in search engines hinges on proficiently managing your crawl budget.

The crawl budget, referring to the number of pages search engines like Googlebot can and decide to scan, is vital for ensuring your most important content gets indexed and ranks well.

LinkGraph’s SEO services provide expertise in optimizing this budget by addressing crawl rate limits and demands, correcting site errors, and implementing strategic structures such as sitemaps.

Through diligent monitoring and continuous adjustments based on server log insights, these SEO experts tailor strategies to prioritize key pages and rectify any errors that might be wasting crawl resources.

By doing so, they enhance server response times, manage duplicate content, and improve link equity distribution, ensuring that every page on your site has the chance to shine in search engine results.

In essence, proper management of your crawl budget can significantly boost your website’s search engine presence, user reach, and overall digital success.