Crawl First SEO Guide

Mastering Crawl First SEO: Your Comprehensive Guide Navigating the intricacies of Search Engine Optimization necessitates a profound understanding of what lies beneath the surface — the pivotal […]

Mastering Crawl First SEO: Your Comprehensive Guide

Navigating the intricacies of Search Engine Optimization necessitates a profound understanding of what lies beneath the surface — the pivotal realm of crawl first SEO.

As search engine crawlers dissect the web’s complexities, ensuring that your website’s architecture lays a clear path for them is akin to rolling out a red carpet for VIP guests.

It’s about refining site structure, enhancing technical elements, and creating a seamless user experience that guides search bots effortlessly through your content.

Grappling with crawl efficiency is not just for the SEO professional; it’s a crucial endeavor for any website owner aiming to climb the ranks in the Google search results.

Keep reading to unlock the potential of your online presence by mastering the principles of crawl first SEO with LinkGraph’s comprehensive guide and expert services.

Key Takeaways

- Understanding Crawler Behavior Is Crucial for Enhancing a Website’s SEO Rankings

- Optimizing Site Architecture and Addressing Technical SEO Issues Are Key to Improving Crawlability

- LinkGraph’s SEO Services Include Meticulous Log Analysis to Tailor Strategies to Actual Search Crawler Behavior

- Advanced SEO Tactics Like Schema Markup and HTTPS Adoption Are Critical for Search Engine Visibility

- Staying Updated With Search Engine Algorithm Changes Is Necessary for Maintaining Optimal SEO Performance

Understanding Crawl First SEO Principles

In the intricate ecosystem of search engine optimization, the principles of Crawl First SEO serve as the bedrock for enhanced visibility and improved seo rankings.

Before a page dances in the limelight of search results, it must be meticulously explored by search engine crawlers.

Through a nuanced comprehension of how crawling is the precursor to indexing and ranking, website owners can strategically sculpt their site architecture.

They will unravel the enigma behind crawler behavior—what invites them, what deters them, and how they interact with web pages.

Paramount to a site’s relationship with these digital explorers is the concept of crawl budget.

This measure dictates the expanse and frequency of crawlers’ visits, making it imperative for SEO professionals to optimize for efficient crawling, ensuring essential pages gain the attention they merit.

It is in mastering these fundamentals that the robust foundation for sustainable SEO success is laid.

Grasp Why Crawling Precedes Indexing and Ranking

Grasping the significance of crawling in SEO requires an understanding of the search engine’s hierarchy of operations: Crawling is the first step. Without the meticulous scrutiny of search engine bots, such as Googlebot, a web page remains invisible to the database that fuels search engine results. Therefore, ensuring that a site is accessible and inviting to these bots is a foundational task for any SEO professional.

After the initial crawl, search engines assess the content to determine its indexation. It’s at this junction where website content and site structure play pivotal roles. By presenting crawlers with a well-structured, content-rich site complete with xml sitemaps and clear navigation, a website stands to enhance its presence in search results, paving the way for more significant user engagement and conversions.

| SEO Stage | Tasks | Impact |

|---|---|---|

| Crawl | Optimize site architecture, Update XML sitemaps, Ensure crawler access | Website accessibility for search engine bots |

| Index | Audit content quality, Leverage structured data, Refine meta tags | Content comprehended by search engines |

| Rank | Enhance user experience, Improve page load speed, Acquire quality backlinks | Visibility in search engine results |

Discover the Core Components of Crawler Behavior

Unveiling the intricacies of crawler behavior is akin to decoding the DNA of SEO success. The core components such as crawl depth and crawl rate paramountly influence search engine crawlers, determining their path through a website’s hierarchy and how often they return to capture updates. These critical crawler parameters guide them in parsing through piles of URLs, distinguishing which pages hold the relevance and importance to be indexed expeditiously.

LinkGraph’s Innovative Approach to SEO Services meticulously emphasizes the importance of rendering content in a way that it aligns with the preferences of search engine bots. Understanding that factors like site structure, including the use of breadcrumb navigation and the optimization of robots.txt files, shape crawler behavior, LinkGraph ensures their clients’ websites communicate effectively with crawlers, thus maximizing the discovery of relevant content and enhancing the likelihood of achieving elevated search rankings.

Identify the Importance of Crawl Budget for SEO

In the realm of SEO, the optimization of crawl budget stands as a critical concern for website owners striving to improve their online visibility. A site’s crawl budget symbolizes the quantity of pages a search engine crawler is assigned to scan during a visit—thus, recognizing and optimizing this budget becomes essential for ensuring the most valuable pages are indexed and, consequently, have the chance to rank higher in search results.

For discerning entities, such as LinkGraph, advising clients on the nuances of crawl budget optimization translates to a strategic advantage. By investing in comprehensive log analysis and site audits, SEO services like those provided by LinkGraph improve the probability that the website’s most pertinent pages are frequented by search bots, weaving the intricate web of site visibility and search engine favorability.

Preparing Your Website for Efficient Crawling

Embarking on the journey of Crawl First SEO necessitates a prepared and accessible digital landscape that welcomes the scrutiny of search engine crawlers.

A crucial component for bolstering online presence, ensuring that every page is primed for discovery by search engine bots, hinges on the meticulous optimization of site architecture.

Comprehensively eliminating obstacles that impede crawler access, like crawl blocks and traps, is paramount in achieving a seamless experience for the digital emissaries tasked with deciphering a website’s content.

This section serves as a strategic playbook, guiding website owners through the meticulous process of creating a crawler-friendly environment conducive to maximum crawlability and SEO excellence.

Ensure Site Accessibility for Search Engine Crawlers

It is essential for website owners to champion the accessibility of their site to search engine crawlers. LinkGraph’s Comprehensive SEO Guide articulates that removing barriers, such as improperly configured robot.txt files or overly complex site structures, ensures that search bots can navigate and index a website with ease, laying the groundwork for improved SEO rankings.

LinkGraph skillfully enhances site accessibility by Analyzing and Refining Critical Elements like crawl depth and rendering capabilities. By presenting well-defined pathways for search engine crawlers, consisting of streamlined site architecture and effective link elements, their clients’ sites are poised to be comprehensively explored and favorably assessed.

Optimize Site Architecture for Maximum Crawlability

Optimizing site architecture is crucial for boosting crawlability and ensuring that search engine bots can traverse a website efficiently. LinkGraph’s SEO services focus on enhancing site structure to promote better crawl depth and indexing by search bots. A well-architected website not only supports a crawler’s journey but also impacts the user experience, directly influencing SEO outcomes.

Key elements such as navigation, internal link strategy, and the appropriate use of headers and meta tags play an integral role in making a website comprehensible for search engines. By crafting a clear hierarchy and Employing Breadcrumb Navigation, LinkGraph ensures their clients’ sites are logically organized, fostering robust crawlability and SEO ranking potential:

| Element of Site Architecture | Role in SEO | Benefits |

|---|---|---|

| Clean Navigation | Guides search bots through site | Enhances crawl depth, helps indexation |

| Internal Linking | Connects content across site | Distributes PageRank, aids in ranking |

| Logical Hierarchy | Structures content by relevance | Improves user experience, supports SEO |

Eliminate Crawl Blocks and Crawler Traps

Stepping into the realm of efficient search engine crawling necessitates the eradication of crawl blocks and crawler traps that can stymie a site’s SEO progress. LinkGraph stands at the forefront of this endeavor, facilitating the smooth access of search bots by identifying and resolving technical barriers such as broken links, infinite loops, and faulty redirects that hinder a crawler’s ability to navigate a site effectively.

A refined focus on crawler traps, such as poorly executed pagination or duplicate content issues, is a distinguishing feature of LinkGraph’s Meticulous SEO Services. The company’s attention to detail ensures that search engine bots are not ensnared by redundant or irrelevant pages, thereby conserving a site’s crawl budget for content with genuine potential to elevate its position in search rankings.

Optimizing on-Page Elements for Crawl Optimization

Shifting focus to the granular level, fine-tuning a website’s on-page elements is a strategic maneuver to bolster crawl optimization.

An ensemble of precise configurations awaits the adept hands of SEO professionals who understand the imperative role this plays in guiding search engine crawlers.

Paramount in this endeavor are the strategic adjustments to robots.txt files which establish the directives for crawling, the judicious application of meta robots tags dictating page-level crawler actions, and the streamlining of URL structures to enhance navigability.

Undertaking these tasks, businesses arm their virtual domains with the refined clarity that search engine bots favor, setting the stage for improved organic search presence.

Fine-Tune Your Robots.txt File for Effective Directives

Harmonizing the directives in a robots.txt file is paramount for SEO professionals aiming to cultivate an environment conducive to search engine crawling. In this regard, LinkGraph’s SEO services ingeniously calibrate these crucial text files to communicate effectively with search bots, signifying which areas of a site are open for exploration and which are restricted, thus safeguarding valuable crawl budget for high-priority content.

At the heart of LinkGraph’s strategy lies an adept refinement of robots.txt protocols, ensuring that directives do not inadvertently disallow crawler access to critical resources needed for rendering. This careful balance maintained in the robots.txt file empowers search engine bots to encounter a website’s content in its most favorable light, significantly boosting the potential for improved indexing and heightened SEO rankings.

Utilize Meta Robots Tags Wisely

Employing meta robots tags with strategic intent is a sophisticated SEO technique that speaks to an advanced understanding of search engine protocols. These tags communicate directly to search engine crawlers, providing page-level instructions on whether to index a URL and follow the links contained therein.

LinkGraph’s expert use of meta robots tags enables their clients to exert granular control over search engine crawling, safeguarding against the indexing of duplicate or non-essential pages that may dilute a site’s SEO potency:

| Meta Robots Tag | Directive | SEO Benefit |

|---|---|---|

| “index” | Allows URL indexing | Facilitates ranking in search results |

| “noindex” | Prevents URL indexing | Preserves crawl budget for high-quality content |

| “follow” | Encourages following links on the page | Assists in the discovery of related content |

| “nofollow” | Discourages following links on the page | Prevents link equity distribution to undesirable pages |

Improve URL Structure to Aid Crawler Navigation

LinkGraph elevates the intricacies of URL structure, harnessing its profound effect on the navigation capabilities of search engine crawlers. A well-crafted URL serves as a beacon to search bots, guiding them through the digital terrain of a site and delineating the relevance and hierarchy of content in a manner that is clear and intuitive.

To this end, the seasoned professionals at LinkGraph meticulously streamline URL structures, shedding superfluous parameters and employing a consistent, keyword-informed taxonomy. This results not only in enhanced crawler navigation but also in bolstering SEO rankings by aligning web page addresses with user and search engine expectations alike.

Leveraging Technical SEO for Enhanced Crawlability

In the complex frontier of Search Engine Optimization, leveraging technical SEO’s intricate tools is paramount for cultivating enhanced crawlability.

Pivotal strategies like deploying XML sitemaps, Implementing Schema Markup, and adopting HTTPS protocols act as guiding lights for search engine crawlers, illuminating the path to comprehensive content understanding and secure communication.

This triad of technical adjustments forms a critical part of the strategic SEO approach at LinkGraph, ensuring that clients’ websites are intelligently aligned with the mechanisms of search bots.

Engaging in these practices elevates the accessibility and favorability of a website with the ultimate goal of securing a dominant stance in the competitive tableau of google search results.

Deploy XML Sitemaps for Guided Crawler Movement

LinkGraph harnesses the power of XML sitemaps to create a clear and efficient roadmap for search engine crawlers. These sitemaps act as a direct pipeline to search engines, informing them of the pages that require their attention, which enhances the overall crawlability of a website.

In the venue of SEO warfare, XML sitemaps are LinkGraph’s strategic beacon, signaling search engine bots toward freshly updated content and the most vital sections of a client’s digital domain. This practice ensures that no critical page goes unnoticed, optimizing a site’s visibility and search engine relevancy.

Implement Schema Markup for Crawler Comprehension

In the highly competitive arena of SEO, implementing schema markup is a critical move for enhancing a website’s comprehensibility to search engine crawlers. LinkGraph’s proficiency in technical SEO involves integrating schema markup to provide context to content, enabling crawlers to parse and classify the information on web pages with a remarkable degree of accuracy.

Such strategic deployment of schema markup by LinkGraph underscores the value of structured data, facilitating search engine bots’ understanding of the nuances behind website content, which can boost the clarity of search results and elevate a site’s visibility. This advanced level of comprehension lays a foundation for improved seo rankings and user engagement.

Adopt HTTPS to Establish Secure Crawler Communication

In the dynamic landscape of SEO, adopting HTTPS is critical for establishing a secure line of communication between search engine crawlers and websites. Recognizing this, LinkGraph advises its clients to Transition From HTTP to HTTPS, ensuring that the data exchange is encrypted and protected from potential interceptors.

For website owners, this adoption not only signifies compliance with best practices in cybersecurity but also contributes positively to SEO rankings, as search engines favor secure websites. LinkGraph’s adept integration of HTTPS goes hand-in-hand with its commitment to bolstering both user and crawler trust, creating a fortified gateway through which valuable site content can be safely accessed and indexed.

Monitoring and Analyzing Crawler Behavior

In the ever-evolving domain of search engine optimization, diligent monitoring and analysis of crawler behavior stand as keystones in the architecture of a robust SEO strategy.

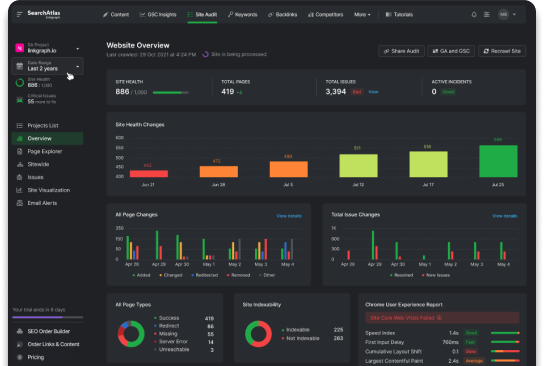

As digital marketers delve into the depths of Crawl First SEO, a tripartite approach to understanding search bots unfolds, necessitating the use of log file analysis to capture crawler activity, the exploration of Google Search Console for comprehensive crawl reports, and the astute application of adjustments derived from crawl data insights.

Each practice equips SEO professionals with the data-driven acumen required to refine and reinforce a website’s appeal to the algorithmic criteria of search engines, ensuring a website not only invites but captivates the attention of these digital archeologists.

Use Log File Analysis to Understand Crawler Activity

Engaging in log file analysis provides SEO professionals with invaluable insights into search engine crawler activity. LinkGraph leverages this analytical approach, uncovering patterns and frequencies in how crawlers navigate client websites, allowing for optimization that aligns with actual crawler behavior.

This critical analysis extends beyond superficial metrics, delving deep into the visitor logs to reveal the intricacies of crawler interactions. By Illuminating the Paths Taken by Search Bots, LinkGraph executes strategic modifications to enhance the crawlability and indexation of key pages, thereby solidifying a website’s SEO foundation.

Analyze Crawl Reports From Google Search Console

Analyzing crawl reports from Google Search Console is a meticulous process synonymous with peering under the hood of search engine activities on one’s website. LinkGraph deploys a keen eye for detail to discern the subtleties within these reports, translating them into actionable SEO tasks that propel clients’ websites into the limelight of Google search results.

With Google Search Console’s profound insights, LinkGraph can identify which site areas receive ample attention from search bots and which might require optimization for better accessibility. It is this keen analysis that allows for strategic enhancements in site architecture and content issues, crucial steps toward refined indexing and sterling SEO performance.

Make Adjustments Based on Crawling Data Insights

Acting on crawling data insights allows LinkGraph to implement calibrated modifications that holistically enhance a website’s SEO performance. By scrutinizing parameter trends such as crawl frequency and depth, SEO experts at LinkGraph can refine strategies that boost organic visibility and promote a dominant position within the SERP landscape.

This data-driven approach enables LinkGraph to tailor a website’s content and structure with precision, ensuring that each optimization effort is steered by concrete evidence of search engine activities. Such adjustments are meticulously crafted to align with the evolving algorithms of SERPs, securing a competitive edge for clients in the digital marketplace.

Advanced Strategies for Mastering Crawl First SEO

As the digital landscape continues to evolve at a rapid pace, mastering Crawl First SEO requires a sophisticated grasp of the latest advancements that impact crawling efficiency.

SEO professionals and website owners must delve deeper into the nuances of how JavaScript affects search engine crawling, confront the intricacies of crawl issues prevalent in complex web applications, and maintain a vigilant eye on search engine algorithm changes that directly influence crawling practices.

This advanced level of savvy ensures that web presences are not only discoverable but also primed to excel in the competitive realm of search engine rankings.

Explore the Impact of JavaScript on Crawling Efficiency

In the realm of Crawl First SEO, the role of JavaScript emerges as a critical factor influencing the efficiency with which search engine crawlers process and index a website’s content. Search engines have evolved to execute JavaScript to some extent, yet heavy reliance on scripts can lead to delayed indexing or inaccuracies in understanding a page’s content, posing challenges for SEO professionals aiming to ensure content is crawlable and indexable from the outset.

LinkGraph’s expertise in SEO services extends to optimizing JavaScript-heavy sites, Prioritizing Critical Content and improving crawling efficiency. Their team adeptly mitigates potential crawl issues by streamlining and deferring non-essential scripts, enhancing the rendering process for search engine crawlers, thus ensuring that the full spectrum of a website’s content is readily accessible and primed for optimum search engine ranking performance.

Tackle Complex Crawl Issues in Web Applications

Addressing complex crawl issues within web applications is an endeavor that demands advanced expertise and a precise understanding of the digital ecosystem. Web applications, with their dynamic content and intricate user interactions, pose unique challenges that can distort the perspectives of search engine crawlers and obscure the visibility of vital website elements.

Sophisticated strategies are essential for navigating the maze-like structures that modern web applications often present. LinkGraph leverages their profound acumen to detangle the web of client-side scripting, and heavy AJAX-usage, ensuring that these applications remain as crawl-friendly as their static counterparts:

- Diagnose and remediate crawl inefficiencies stemming from complex JavaScript frameworks.

- Optimize event-driven interactions that may hinder search bots’ ability to capture content updates.

- Configure server-side rendering or hybrid rendering solutions to enhance content visibility for crawlers.

Identifying effective solutions to these technical hurdles underscores LinkGraph’s commitment to comprehensive SEO that resonates with the most sophisticated search engine algorithms; therefore, securing a strategic advantage in the realm of search engine rankings.

Stay Updated With Algorithm Changes Affecting Crawling

Staying abreast of algorithm changes is a crucial aspect of SEO that savvy website owners and SEO professionals cannot afford to overlook. As search engine algorithms are continually refined to deliver more accurate results, these changes can significantly affect crawling priorities and techniques.

It is critical for the omnipresent vigilance of digital marketers to anticipate and adapt to these fluctuating standards. LinkGraph, in its position as a pioneer in the field, ensures clients are armed with the most current SEO knowledge, enabling them to maintain alignment with the often unforeseen shifts in search engine criteria:

- Comprehensively analyzing algorithm update announcements and known impacts.

- Assessing the effect of such changes on a website’s current crawl status and SEO ranking.

- Iterating SEO strategies to conform to new search engine guidelines for optimal crawler interaction.

Conclusion

Mastering “Crawl First SEO” is essential for ensuring that web pages are not only discoverable by search engine crawlers but also optimized to achieve higher rankings.

By prioritizing crawl accessibility and efficiency, we lay a solid foundation for SEO success.

Understanding and streamlining crawler behavior, optimizing site architecture, and refining on-page elements are crucial steps towards enhancing a site’s visibility.

Employing technical SEO tools like XML sitemaps, schema markup, and secure protocols further guides and aids crawlers in understanding and indexing web content effectively.

Additionally, by regularly monitoring and adapting to crawler activity insights, as well as staying updated with algorithm changes, we ensure that a website remains perfectly poised to captivate search bots and excel in the competitive landscape of search rankings.