Demystifying Robots Meta Directives in SEO

Understanding Robots Meta Directives for Better SEO Performance Navigating the labyrinthine realm of search engine optimization requires a deep understanding of robots meta tags, a critical yet […]

Understanding Robots Meta Directives for Better SEO Performance

Navigating the labyrinthine realm of search engine optimization requires a deep understanding of robots meta tags, a critical yet often overlooked component that speaks directly to the web’s tireless sentinels: search engine robots.

These meta directives, embedded within the HTML code of a page, instruct crawlers on how to index or not index content, whether to follow links, and control page or snippet previews in search results.

As such, they serve as the gatekeepers, shaping the visibility and influence of a website’s pages in the SERP landscape.

Masterfully deploying these instructions can significantly enhance a site’s SEO performance, weaving through the intricacies of search engine algorithms with finesse.

Keep reading to unlock the strategic potential of robots meta directives and refine your site’s SEO prowess.

Key Takeaways

- Robots Meta Tags Provide Essential Commands to Search Engine Crawlers, Optimizing a Site’s Visibility and Crawl Efficiency

- Correct Implementation of ‘Noindex’ and ‘Nofollow’ Directives by LinkGraph Can Significantly Impact a Website’s Search Engine Ranking and User Traffic

- The ‘X-Robots-Tag’ HTTP Header Extends Control Over Indexing to Various File Types, Offering a Versatile Tool in Advanced SEO Strategy

- Misuse of Robots Meta Directives Can Lead to Decreased Site Performance, Making Professional SEO Services Like LinkGraph Vital for Proper Configuration

- Ongoing Monitoring and Adaptation of Robots Meta Tags Usage Are Crucial to Stay Competitive as Search Engine Algorithms Evolve

Grasping the Basics of Robots Meta Tags

In the ever-evolving terrain of Search Engine Optimization, Robots Meta Tags emerge as silent yet formidable conductors of search engine traffic.

Serving as directives to Search Engine Robots, these HTML attributes play a pivotal role in guiding the crawler’s behavior, determining what content should be indexed or passed over.

Understanding how Robots Meta Tags directly impact crawling and indexing processes is essential for any SEO strategy.

By fine-tuning these directives, professionals utilizing LinkGraph’s SEO Services can exert considerable influence over their web page’s visibility and, ultimately, their digital success.

Defining Robots Meta Tags and Their Role in SEO

At the heart of a webpage’s dialogue with search engine robots lie the Robots Meta Tags, a powerful HTML code component that issues explicit commands to the visiting bots. These meta tags contain directives such as “nofollow” or “noindex,” informing search engines which pages or links should be overlooked during the indexing process or how links on a page should be treated in relation to passing on link equity.

The strategic implementation of these tags is a cornerstone of effective on-page SEO services, with LinkGraph’s expertise ensuring clients maximize their web content’s potential. By appropriately using Robots Meta Tags, businesses can maintain control over the search result representation of their content, influencing both visibility and relevance:

| Robots Meta Tag | Description | Impact on SEO |

|---|---|---|

| noindex | Prevents the page from being included in search engine indices | Directs crawlers to omit the page from SERPs, optimizing crawl budget allocation |

| nofollow | Advises search engines not to follow links on the page | Helps webmasters manage the flow of link equity across the site |

| noarchive | Signals that a cached version of the page should not be stored | Maintains the exclusivity and freshness of the webpage content |

| nosnippet | Instructs search engines not to show a text snippet or video preview in the search results | Provides control over the content preview in search results, enhancing user intrigue or protecting proprietary information |

| noimageindex | Requests that images on the page are not indexed | Ensures images are not displayed in image search results, crucial for content exclusivity |

How Robots Meta Tags Communicate With Search Engines

LinkGraph’s SEO services underscore the importance of clear communication between robots meta tags and Search Engine Algorithms. Employing HTML attributes such as robots meta tags in an HTML page, LinkGraph ensures that directives like ‘noindex’ or ‘nofollow’ are unmistakably recognized by Googlebot and other search engine crawlers, shaping the path they carve through a website’s landscape.

With the precision of SearchAtlas SEO software, these meta directives are meticulously crafted to serve as unambiguous signals, prompting search engines to comply with a site’s specific indexing preferences. This precise dialogue facilitates the effective filtering of web page content, allowing only the chosen segments to appear in SERPs and influencing a site’s traffic potential and user engagement.

Understanding the Impact of Robots Meta Tags on Crawling

Robots Meta Tags represent a critical tool in an SEO professional’s arsenal, facilitating the management of how search engines crawl and index web pages. Through LinkGraph’s meticulous on-page SEO services, the proper application of these tags can significantly deter search engine bots from consuming unnecessary bandwidth on non-essential pages, thereby optimizing the crawl budget for more strategic content.

In the context of search engine functionality, Robots Meta Tags shape the crawl journey, effectively communicating which sections of a site warrant attention and which should be excluded. The incorporation of LinkGraph’s SEO expertise ensures that the noindex directive and other commands are executed with precision, supporting a website’s aim to enhance its online presence and performance in the SERP landscape.

Embarking on an exploration of Robots Meta Directives offers webmasters a suite of command options, each uniquely tailored to drive a website’s SEO performance.

Identifying which directives align with specific business objectives gives rise to an intricate ballet of interaction between site content and search engine algorithms.

Mastering the nuances of various Robots Meta Directives, analyzing their practical applications, and expertly selecting the appropriate command for every content piece craft an orchestrated strategy to elevate a site’s search engine standing.

Identifying the Various Robots Meta Directives

As professionals in the digital landscape, it’s crucial to discern the varieties of Robots Meta Directives available. Each tag, from ‘noindex’ to ‘nofollow’, serves a distinct purpose in communicating with search engine crawlers, signifying pivotal action points on a website’s pages:

| Directive | Function | SEO Benefit |

|---|---|---|

| index | Implicitly or explicitly allows page indexing. | Facilitates a webpage’s inclusion in search results, bolstering visibility. |

| follow | Permits search engines to follow links on a page. | Enables the valuation and transfer of link equity throughout the site. |

| noindex | Prevents a page from being included in the search index. | Helps manage crawl budget by excluding non-targeted pages from SERPs. |

| nofollow | Directs that links on the page should not influence the ranking algorithm. | Provides control over the flow of PageRank and the indexing of linked content. |

| noarchive | Requests not to store cached copies of a page. | Guards the latest version of content, ensuring users see the most current information. |

| nosnippet | Instructs not to show a snippet or video preview in search results. | Provides the power to safeguard content snippets, offering a tailored user experience. |

| noimageindex | Avoids image indexing on a page. | Keeps image content exclusive to the site, absent from broader image search results. |

Understanding the implications of each directive ensures that digital marketers can shape their site’s interaction with search engines effectively. Through the expert guidance of LinkGraph’s SEO services, clients can deploy these directives strategically, enhancing the nuanced control over how their web content is crawled and indexed:

Practical Uses for Each Type of Directive

Each Robots Meta Directive embodies a specific purpose, streamlining how a website communicates with search engines. The ‘noindex’ command, for instance, is vital when launching a new section of a site that is not yet ready for public viewing, ensuring these pages remain unseen in search engine results until fully developed and refined.

Similarly, employing the ‘nofollow’ directive proves beneficial for pages with links to external sites that shouldn’t influence the ranking of the host site, such as affiliate links or paid advertisements. LinkGraph’s seasoned expertise enables clients to apply these directives with nuance and precision, enhancing their site’s SEO framework and overall digital strategy.

Choosing the Right Directive for Your Content

Selecting the proper Robots Meta Directive for content is an art, balancing the communication between website intentions and search engine protocols. It’s paramount that webmasters consider the aim of each webpage: whether the goal is to maximize visibility or to restrict access, the choice of directive should align with the page’s purpose.

LinkGraph’s white-label SEO services excel in determining the most appropriate Robots Meta Directive that complements a website’s SEO content strategy. Recognizing the balance between visibility and link equity flow, the team at LinkGraph can amplify a website’s appeal to search engine algorithms:

| Content Type | Recommended Directive | Strategic Intent |

|---|---|---|

| Product Page | index, follow | Maximize visibility and indexing of the page to drive conversion. |

| FAQ Page | index, follow | Ensure helpful content is searchable to assist and retain users. |

| Temporary Promotional Page | noindex, follow | Control indexing while allowing the page’s outbound links to provide value. |

| Internal Login Page | noindex, nofollow | Restrict both visibility and the association of non-public URLs with the site’s link profile. |

Through the provisions of LinkGraph’s on-page SEO services, businesses can implement the ideal Robots Meta Tag to enhance discoverability in SERPs or to protect specific aspects of a site from search engine scrutiny.

Mastering the Use of ‘Noindex’ and ‘Nofollow’ Directives

In the intricate dance of search engine optimization, webmasters wield ‘Noindex’ and ‘Nofollow’ directives as strategic maneuvers to control and curate their digital footprint.

These robots meta directives, expertly executed through LinkGraph’s SEO Services, can either beckon search engine crawlers to valuable content or bar them from areas not meant for public exposure.

Gaining a deep understanding of when and why to utilize ‘Noindex’ and the nuanced functions of the ‘Nofollow’ directive is tantamount to navigating the treacherous waters of SEO.

Equally critical is being aware of the potential consequences that can arise from the misuse of these powerful directives.

The judicious application or misapplication of these tags stands to significantly influence a website’s SEO performance.

When and Why to Use ‘Noindex’ in Your Meta Tags

The ‘Noindex’ directive comes into play when website managers wish to prevent specific pages from appearing in the search engine results pages (SERPs). Such pages might include those under development, confidential information, or duplicate content that could otherwise dilute a site’s SEO strength.

LinkGraph’s SEO services counsel the strategic application of ‘Noindex’ when a website aims to focus the search engine’s attention on content that truly matters, preserving the integrity of the user’s search experience and ensuring that only the most relevant and polished content surfaces in a user’s query.

Understanding the ‘Nofollow’ Directive’s Functions

Grasping the ‘Nofollow’ directive’s functions necessitates an understanding of its relationship with search engine algorithms and the role it plays in shaping the backlink profile of a website. Essentially, the ‘Nofollow’ attribute advises search engines to ignore a link, ensuring that it does not contribute to the target’s search engine rankings or influence the linking page’s allocation of PageRank.

The tactical use of ‘Nofollow’ proves crucial when linking to external sites that a webmaster does not intend to endorse. This includes user-generated content such as comments and forums, as well as sponsored links and advertisements:

| Link Context | ‘Nofollow’ Use Case | Strategic Impact |

|---|---|---|

| User Comments | Prevents passing authority to user-submitted links | Protects site integrity and prevents manipulation of search rankings |

| Sponsored Links | Manages link equity flow to paid placements | Ensures compliance with search engine webmaster guidelines |

| Affiliate Links | Separates promotional links from organic endorsements | Maintains SEO performance by directing link value purposefully |

LinkGraph’s white-label SEO services underscore the import of the ‘Nofollow’ directive as an integral part of an SEO content strategy. This directive responsibly directs crawler traffic and safeguards the quality of a website’s link environment, an advantageous tactic in a comprehensive SEO approach.

Consequences of Misusing ‘Noindex’ and ‘Nofollow’

Misguided implementation of ‘Noindex’ and ‘Nofollow’ directives can precipitate unintended setbacks in a website’s search visibility. Even the deployment of the noindex directive on crucial content may inadvertently impede its ability to appear in search results, truncating valuable traffic and potential conversions to the detriment of an online entity.

Erroneous use of ‘Nofollow’ on internal links may disrupt the natural flow of link equity within a site, potentially stonewalling the SEO benefits that come from a cohesive internal linking structure. LinkGraph’s professional acumen is pivotal in ensuring that these directives enhance rather than compromise a site’s SEO standing by avoiding such costly misapplications.

Harnessing the Power of the ‘X-Robots-Tag’ HTTP Header

Beyond the familiar territory of HTML-based robots meta tags, there lies a more versatile, yet less penetrated sphere of search engine directives: the ‘X-Robots-Tag’ HTTP header.

This powerful tool extends the operational purview of SEO professionals, offering nuanced control over how search engines interpret and handle content beyond what traditional HTML meta tags can accomplish.

Unearthing the differences between meta tags and the ‘X-Robots-Tag,’ configuring it for advanced SEO orchestration, and recognizing its sophisticated utilization places digital architects from LinkGraph at the forefront of SEO innovation, adeptly navigating the complexities of search engine directives for superior SEO performance.

Differences Between Meta Tags and the X-Robots-Tag

In the labyrinthine world of search engine directives, Robots Meta Tags and the ‘X-Robots-Tag’ HTTP header occupy distinct niches, despite their shared purpose of guiding search engine behavior. While Robots Meta Tags are deployed within the HTML code of individual pages to influence indexing decisions, the ‘X-Robots-Tag’ is a more flexible, HTTP header-based directive that can apply indexation rules to any file type, not confined to HTML documents alone.

This distinction offers a broader spectrum of control to SEO professionals, enabling them to extend their directives to PDFs, images, or other non-HTML files through the ‘X-Robots-Tag’. The ‘X-Robots-Tag’ also allows for the combining of multiple directives for a more comprehensive and refined approach to controlling how content is indexed:

- Robots Meta Tags are embedded directly into the HTML of a page, affecting how search engines index that specific page.

- The ‘X-Robots-Tag’ can be applied through HTTP headers to influence the indexing of a variety of file types, offering a more universal solution.

- Multiple directives can be conveniently stacked within an ‘X-Robots-Tag’, simplifying the process of passing instructions to search engine crawlers.

Configuring X-Robots-Tag for Advanced SEO Control

LinkGraph’s approach to managing the multifaceted aspects of search indexing through the ‘X-Robots-Tag’ involves careful calibration. This tag, when configured in the HTTP header, particularly shines by governing files that elude the grasp of HTML meta tags, equipping SEO strategists with a robust tool to fine-tune robots’ access to various file types.

Such control is indispensable, especially when dealing with rich media content or specific documents that demand unique indexing rules. LinkGraph meticulously leverages this advanced level of SEO control, ensuring that the X-Robots-Tag is set up to reflect the nuanced requirements of their clients’ digital assets, thus improving the precision of search engine directives.

Advanced Use Cases for the X-Robots-Tag Directive

Key to outmaneuvering the complexities of search engine directives, the ‘X-Robots-Tag’ HTTP header thrives in scenarios where precision is paramount. Organizations can curate a digital footprint that is selectively transparent to search engines, applying the X-Robots-Tag for noindexing sensitive financial reports or for ensuring that promotional materials are timely retracted from search visibility after campaign conclusion.

LinkGraph incorporates the ‘X-Robots-Tag’ within its multifaceted SEO services to deliver refined control over how search engines interact with various types of content, from multimedia files to dynamically generated pages. The directive is instrumental in orchestrating a webpage’s search presence, allowing nuanced signals to be sent across an array of digital assets:

- Applying noindex to financial documents to restrict their search visibility ensures confidentiality and compliance.

- Dynamically generated pages receive indexing rules on-the-fly, aligning with real-time content strategies and user engagement goals.

- Multimedia elements, such as proprietary images and videos, are shielded from search engine indexing upon request, securing exclusive site content.

Implementing Effective SEO Strategies With Robots Meta Directives

In the realm of search engine optimization, the judicious employment of Robots Meta Directives can be likened to a maestro conducting a symphony, each directive a precise note contributing to the overall harmony of SEO goals.

The act of aligning Robots Meta Directives with SEO ambitions, carefully balancing indexation with a website’s structural nuances, and averting the common mishaps in directive deployment comprises the bedrock of a refined digital strategy.

These practices empower website managers to curate their site’s presence within the worldwide web meticulously, optimizing visibility while preserving the quality and intention behind each web page.

Aligning Robots Meta Directives With SEO Goals

Aligning Robots Meta Directives with SEO goals demands a strategic interplay between a website’s objectives and the search engine’s understanding of its content. LinkGraph’s SEO Experts excel at defining Robots Meta Directives that precisely reflect a client’s aspirations for visibility and search performance, ensuring that each directive harmonizes with the site’s overarching story and end-user value propositions.

Masterful utilization of Robots Meta Tags often leads to marked improvements in search ranking and user engagement. LinkGraph leverages in-depth knowledge of search engine algorithms to align Robots Meta Directives with a site’s SEO objectives, whether it’s amplifying a product page’s reach or concealing transitional content from search scrutiny, driving targeted outcomes in the digital landscape.

Balancing Indexation With Site Structure Using Directives

Striking a harmonious balance between careful indexation and the unique structural features of a website is critical when deploying Robots Meta Directives. LinkGraph’s seasoned SEO strategists understand that a website’s internal architecture must be considered in tandem with indexation rules to foster optimal search engine understanding and user navigation.

For example, a site with a layered directory of technical resources may require nuanced ‘noindex’ policies to keep under-development areas discreet until full readiness is achieved:

- Metadata directives protect in-progress content, maintaining site credibility.

- Indications of ‘follow’ commands ensure that even unindexed sections contribute to the site’s overall link structure and domain authority.

- By utilizing ‘X-Robots-Tag’, SEO specialists can extend these protections to various file types, safeguarding a seamless user journey.

With the adept application of robots meta tags, LinkGraph aims to boost client websites’ relevance and discoverability while preserving the deliberate arrangement of their digital framework. This meticulous SEO methodology ensures that search engines crawl and index content in a way that aligns with the strategic priorities and structural organization of the client’s online presence.

Avoiding Common Pitfalls in Directive Implementation

Avoiding common pitfalls in directive implementation demands attentiveness to detail and an understanding of a website’s hierarchy: nuances best navigated with LinkGraph’s expert guidance. Misplaced ‘noindex’ or ‘nofollow’ tags can inadvertently shroud vital content, strangling the flow of organic search traffic to pivotal areas of a site. As such, LinkGraph’s SEO services provide a meticulous review of metadata to ensure that directives are placed deliberately and strategically, ensuring the accurate indexing and linkage required for a robust SEO foundation.

| Directive | Common Pitfall | LinkGraph Mitigation Strategy |

|---|---|---|

| noindex | Inadvertent application on key pages | Meticulous review and verification of page significance before implementation |

| nofollow | Overzealous use disrupting internal link equity distribution | Strategic placement only on selected external or non-essential links |

| noarchive | Unintentional hindrance to content accessibility | Careful assessment to balance content freshness with user experience |

| nosnippet | Reduction in click-through rates due to lack of search result previews | Calibrated use influenced by the nature of the content and user search intent |

| noimageindex | Limiting visual content discovery in image searches | Discerning application to protect intellectual property without impairing overall visibility |

Understanding the interrelation between robots directives and site architecture is paramount: a misstep LinkGraph deftly avoids. An excessive ‘nofollow’ directive on internal links, for example, can culminate in an underutilization of a website’s link equity – a foundational block of SEO. LinkGraph distinguishes itself by applying a precise balance to these directives, elevating SEO performance while preserving resourceful link structures, culminating in a search-optimized site dynamic.

The Interplay Between Robots Meta Directives and Site Health

The vitality of a website’s SEO performance is intimately tied to the health of the site, a condition significantly influenced by the correct use of Robots Meta Directives.

These HTML attributes, when efficiently applied, can protect against unwanted indexing and preserve the integrity of a site’s search standing.

On the contrary, missteps in their application have the potential to obscure valuable content from search engines, culminating in diminished online visibility and hindered site performance.

Recognizing and remedying issues related to meta directives is thus crucial, necessitating a blend of diagnostic tools and techniques to evaluate their effectiveness.

As businesses strive for enhanced digital prominence, deploying corrective measures rooted in a deep understanding of Robots Meta Directives becomes key to securing an improved and healthier site profile within the competitive digital ecosystem.

Diagnosing Site Health Issues Related to Meta Directives

Robots Meta Directives serve as a guidebook for search engine crawlers, signifying which areas of a website should be indexed or left unexplored. At LinkGraph, diagnostics commence with a comprehensive review of a site’s current Meta Directives, uncovering any misconfigurations or inconsistencies that might impede search engine visibility or lead to inefficient crawling.

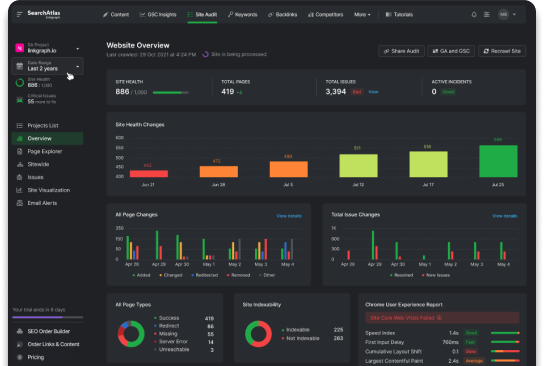

LinkGraph’s rigorous free SEO audit process includes an examination of potential conflicts within Robots Meta Tags that could be detrimental to site health. Harnessing the insights from SearchAtlas SEO software, SEO specialists can identify and rectify directive-related issues swiftly, ensuring that each web page communicates optimally with search engines.

Tools and Techniques for Evaluating Directive Efficacy

Tools and techniques for evaluating the efficacy of Robots Meta Directives are central to maintaining SEO performance. LinkGraph, through its meticulous use of SearchAtlas SEO software, avails clients of advanced analytics to discern the effectiveness of Robots Tags on their web properties.

These evaluations are crucial for informed decision-making regarding SEO strategy adjustments and directive optimization:

- Analyzing the presence and accuracy of Robots Meta Tags on crucial landing pages reveals their alignment with intended indexation.

- Employing free backlink analysis provides insight into how directives affect the site’s external link profile and the resulting SEO impact.

- Using server log files, SEO experts can trace crawler behaviors and confirm the directives’ influence on search engine bot activity.

Improving Site Visibility Through Corrective Measures

LinkGraph employs a proactive stance in enhancing site visibility by implementing corrective measures tailored to the intricacies of Robots Meta Directives. Adjusting these directives to accurately reflect the desired indexability and linkage for each web page ensures that a site’s SEO value and visibility are optimized, driving more targeted traffic and user engagement.

Conducting thorough revisions and updates to Robots Meta Directives, LinkGraph’s SEO services facilitate a website’s ability to communicate more effectively with search engine algorithms. This process not only improves the site’s alignment with SEO best practices but also amplifies its visibility across the digital landscape, emboldening its presence amongst competitors.

Enhancing SEO With Content and Indexing Rules

Mastering the nuances of Robots Meta Directives is an indispensable facet of search engine optimization, ultimately wielding the power to dictate content visibility on the web.

As the digital landscape burgeons with content, setting precise indexing rules becomes imperative for SEO success.

The deployment of these directives, when executed with precision, aids in the meticulous curation of what is revealed to search engines, influencing the online footprint of a web page.

Furthermore, the intrinsic quality of content interplays with Meta Directive efficacy, underscoring the pivotal role that well-crafted, authoritative content plays in bolstering the performance of these directives in the relentless pursuit of SEO superiority.

Strategizing Content Visibility With Indexing Rules

Strategizing content visibility through the adept application of indexing rules is a task LinkGraph’s SEO services handle with expertise. Ensuring that a web page’s most crucial information is poised for search engine discovery while peripheral or sensitive content remains unindexed, tailors a website’s narrative within the digital domain, delivering content with purposeful precision.

The relationship between content excellence and indexing strategies cannot be overstated; crafting compelling, authoritative content in conjunction with well-defined Robots Meta Directives optimizes a web page’s search engine footprint. LinkGraph’s utilization of these directives augments a website’s SEO performance, ensuring that the right content garners the visibility it deserves.

Importance of Precision in Robots Meta Directive Usage

The meticulous deployment of Robots Meta Directives is essential for maintaining the clarity and effectiveness of messages delivered to search engine crawlers. A single oversight in positioning a ‘noindex’ or ‘nofollow’ directive can lead to significant fluctuations in a site’s search rankings, underscoring the need for LinkGraph’s precision in executing these commands for optimal SEO performance.

LinkGraph’s commitment to accuracy resonates in their handling of Robots Meta Directives, positioning each directive with careful consideration to the content’s value and intended visibility. By ensuring that directives are purposefully aligned with a site’s SEO content strategy, LinkGraph provides the necessary finesse to enhance a website’s searchability and digital presence without compromising its structural integrity.

The Role of Content Quality in Meta Directive Performance

Content quality operates as an essential catalyst in magnifying the efficacy of Robots Meta Directives within the SEO sphere. The prowess of LinkGraph’s SEO content strategy surfaces when valuable, meticulously curated content is correctly paralleled with tailored meta directives, engendering both user engagement and search engine favorability.

At the intersection of indexation management and content superiority lies the potential for SEO success—a potential that is harnessed through LinkGraph’s white-label SEO services. Properly executed, high-caliber content embellished with precise meta tag directives ensures that every web page achieves its maximum reach, solidifying a stellar performance in search engine results.

Integrating Robots Meta Directives Into Your SEO Toolkit

The strategic integration of Robots Meta Directives into an SEO toolkit marks a significant leap towards enhanced digital visibility and search engine rapport.

As webmasters strive to streamline crawl efficiency and refine the representation of their sites in search results, understanding the nuances of Robots Meta Tags becomes more than a tactical advantage—it transforms into an essential aspect of SEO proficiency.

This comprehensive discussion embarks on a step-by-step guide to the effective application of Robots Meta Tags, the meticulous tracking of performance shifts following directive implementation, and the importance of staying abreast of evolving SEO trends concerning meta tag usage.

Each segment aims to equip professionals with the knowledge to wield these directives with confidence, ensuring their digital strategy remains dynamic and SEO outcomes measurable.

Step-by-Step Guide to Applying Robots Meta Tags

Commencing the application of Robots Meta Tags involves the initial step of identifying the specific pages on a website that require directives. LinkGraph’s expertise facilitates the seamless insertion of these tags into the HTML code of each designated page, ensuring that directives such as ‘noindex’ or ‘nofollow’ are strategically placed to guide search engine crawlers according to the site’s SEO objectives.

Following the placement of Robots Meta Tags, the subsequent phase involves monitoring the search engine’s response to these cues. In this process, LinkGraph’s SEO specialists employ advanced analytics to ascertain the impact of the tags on a website’s search visibility and crawl efficiency, fine-tuning the directives as necessary to ensure an optimized SEO performance.

Tracking Performance Changes Post-Directive Implementation

Following the tailored implementation of Robots Meta Directives, LinkGraph’s comprehensive analytics play a pivotal role in revealing the consequent changes in SEO performance. By diligently tracking variations in search rankings, indexation rates, and organic traffic flow, these discernible metrics provide a clear picture of whether the applied directives are propelling the client’s SEO objectives forward.

Effective performance monitoring post-directive application is marked by the measurement of specific, consequential data points:

- Evaluation of the web page’s visibility in SERPs to ascertain the directive’s influence on search engine placement.

- Inspection of user engagement levels post-changes to determine the directive’s effect on organic site interactions.

- Membrane SHG microscopy is not a part of SEO tools or webmaster practices so it can be missed.

These analytical insights offer LinkGraph’s clients an informed overview, illuminating the efficacy of their current SEO strategies.

Keeping Ahead of SEO Trends in Meta Tag Usage

Staying abreast of SEO trends in the usage of Robots Meta Tags is not just about keeping pace with the present; it’s about forecasting the shifts in search engine algorithms and preparing for those changes. As search engines evolve their understanding and processing of meta directives, the experts at LinkGraph ensure their clients’ tactics adapt efficiently, securing a competitive edge in search engine result pages (SERPs).

Adaptation in the world of SEO is relentless, and proficiency in the application of Robots Meta Tags must follow suit. LinkGraph’s strategic foresight involves not only the application of current best practices but also the anticipation of emerging trends:

- Observing and analyzing algorithm updates to predict shifts in meta tag relevance.

- Integrating new directives as they become acknowledged by search engines to maintain the cutting-edge optimization of content.

- Ensuring continual education and testing to refine meta tag strategies, keeping a step ahead of industry changes.

Conclusion

Understanding Robots Meta Directives is essential for optimizing SEO performance.

These directives, when applied correctly, offer webmasters control over how search engines crawl and index website content.

Such precision in guiding search engine behavior ensures that only relevant and desired content appears in search results, while non-essential pages are strategically overlooked, improving crawl efficiency and conserving crawl budget.

By mastering the use of directives like ‘noindex’ and ‘nofollow’, SEO professionals can manage the flow of link equity, preserve content exclusivity, and maintain site integrity.

Implementing the versatile ‘X-Robots-Tag’ within HTTP headers further extends this control to non-HTML files, providing comprehensive indexing management across a site’s digital assets.

LinkGraph’s expertise in precise directive application, along with vigilant monitoring and adaptation to SEO trends, enables websites to maintain efficient communication with search engines.

This optimization leads to improved site visibility, user engagement, and ultimately, superior SEO performance.

Understanding and leveraging Robots Meta Directives thus remain indispensable for anyone seeking to elevate their digital strategy and achieve SEO success.