Essential On-Site Technical SEO Factors for Higher Rankings

Essential on-Site Technical SEO Factors to Optimize Your Website Navigating the landscape of on-site technical SEO factors can be akin to exploring a complex labyrinth, each corner […]

Essential on-Site Technical SEO Factors to Optimize Your Website

Navigating the landscape of on-site technical SEO factors can be akin to exploring a complex labyrinth, each corner holding the potential to drastically improve a site’s search engine ranking.

An understanding of technical elements such as sitemaps, robots.txt files, and URL structure is not merely advantageous—it’s imperative for web developers and marketers aiming to enhance their site’s visibility and user experience.

LinkGraph’s comprehensive suite of SEO services, including meticulous SEO audits, stand ready to guide businesses through these multifaceted on-site challenges.

With a focus on the essentials of SSL certification, site speed, and core web vitals, optimizing a website becomes a structured journey towards digital success.

Keep reading to embark on a path toward SEO mastery, guided by the expert insights from LinkGraph.

Key Takeaways

- Sitemaps Guide Search Engine Crawlers and Influence Website Search Engine Ranking

- The robots.txt File Optimizes Search Engine Indexing by Instructing Crawlers on Which Pages to Index or Ignore

- Crawl Errors Can Hinder Search Engine Indexing but Can Be Identified and Rectified Using Webmaster Tools Like Google Search Console

- SSL Certification Enhances Website Security, Contributing to Search Engine Trust and Ranking

- Core Web Vitals Are Essential Metrics Used by Google to Assess User Experience and Inform SEO Enhancements

Understanding and Implementing Sitemaps

A robust sitemap stands as a foundational component within the multifaceted on-site technical SEO arena.

It serves as a pivotal guide for search engine crawlers, ensuring they effectively index a website’s pages and thus, significantly influence its search engine ranking.

By decoding the varieties of sitemaps, webmasters can tailor these frameworks to boost the visibility of their site’s architecture.

This section details the distinctive sitemap types, delineates a practical process for their creation, and elucidates the methodology for their submission to search engines—all elements essential for enhancing a website’s searchability and user experience.

Identify the Types of Sitemaps and Their Functions

Traditionally, there exist two primary sitemap variants—XML and HTML sitemaps. An XML sitemap, tailored for search engine spiders, meticulously lists URLs, prioritizing site content and aiding in streamlining crawlability, while an HTML sitemap enhances user navigation by presenting site visitors with a simplified directory of the web pages available.

In the realm of SEO, sitemaps extend beyond simple URL listings; they play a critical role in enabling search engines to understand site structure and can include metadata, such as the frequency of updates and the importance of certain pages within the overall website hierarchy. This assists search engines in allocating crawl budget, and ensures they index relevant, frequently updated content, thereby fortifying a website’s SEO foundation.

Step-by-Step Guide to Creating a Sitemap

Commencing the creation of a sitemap begins with thorough reconnaissance of the website’s structure, identifying all its pages, media, and files: a crucial preliminary step before any coding ensues.

| Step | Action | Outcome |

|---|---|---|

| 1 | Analyze Website Structure | Comprehensive List of Website Resources |

| 2 | Code the Sitemap | XML/HTML sitemap file ready for use |

Following the assessment, developers can generate the sitemap using a selection of tools or through manual coding, depending on the complexity and size of the site. The resultant sitemap should then undergo optimization to warrant a format that is both precise for search engine crawlers and comprehensible for users.

How to Submit Your Sitemap to Search Engines

Upon creation and optimization of a sitemap, the subsequent step involves submitting it to search engines, a critical move to expedite the indexing process. This typically takes place through the search engine’s respective Webmaster Tools, such as Google Search Console, where the URL of the sitemap can be entered directly into the ‘Sitemaps’ section.

Completion of this process signals to search engines, like Google, that a new or updated sitemap is ready for consideration. It’s a proactive measure that aids the search bots in discovering new content additions and structural changes more rapidly, favorably influencing the site’s potential to climb in search engine results pages (SERPs).

The Role of Robots.txt in SEO

At the core of on-site technical SEO, the robots.txt file serves as a critical directive to search engine crawlers.

This powerful, yet often overlooked SEO asset dictates how search bots navigate a site, crucial for protecting sensitive content and optimizing the indexing process.

Addressing its strategic development involves understanding its purpose, meticulously crafting the file to meet a website’s specific needs, and verifying its correct implementation—all essential measures that reinforce search engine compatibility and enhance a site’s online visibility.

A well-configured robots.txt file can be transformative, positioning a website to outperform competitors by ensuring that valuable crawl budget is spent on pages that matter most to searchers and potential customers.

Understanding the Purpose of Robots.txt

The robots.txt file performs a gatekeeping function, instructing search engine bots on which pages or sections of a website should remain unseen and which are open for indexing. Significantly, this simple text file directs web crawlers in their journey across a website’s territories, ensuring that they spend their valuable time analyzing pages that meaningfully contribute to the site’s SEO footprint.

With this strategic configuration, the robots.txt file aids in shielding private pages from the public eye, while simultaneously signalling to crawlers the portions of the site ripe for exploration: an undertaking critical for elevating a website’s presence in SERPs. The careful crafting of such a file demands both foresight and precision, as it underpins the ease with which a search engine discerns the structure and priority of the site’s content:

| Function | Description | Impact on SEO |

|---|---|---|

| Access Control | Determines visibility of the site’s sections to search bots | Prevents crawl budget waste on non-essential pages |

| Indexing Guidance | Communicates preferred pages for search engine analysis | Enhances the indexing of prioritized content |

Crafting an Effective Robots.txt File

When crafting an effective robots.txt file, professionals must balance accessibility with exclusivity, creating a blueprint that guides search bots toward content that enhances the website’s search engine standing. This text file communicates clear directives to the search engine crawlers, ensuring that they index the right content and overlook the areas marked as off-limits.

The careful construction of a robots.txt file commences with an analysis of the website’s content priorities and security requirements: an analytical approach is key to establishing a targeted set of instructions for search bots. Crafting this file with meticulous attention to detail not only secures sensitive areas but also optimizes crawl efficiency, bolstering the website’s SEO performance:

- Examine the website content to determine which areas are prioritized for search engine exposure.

- Identify directories or pages that should be restricted from search bot access to maintain privacy or security.

- Implement explicit directives within the robots.txt file to guide search bots towards desirable content and away from restricted areas.

Verifying Your Robots.txt Implementation

The final, yet pivotal step in the optimization of a website’s SEO lies in the verification of the robots.txt file’s efficacy. This process involves ensuring that the directives deployed within the file are correctly understood and followed by the search engine crawlers.

Webmasters must use tools designed to simulate the perspective of search bots: an essential check that confirms whether the robots.txt file accurately communicates which areas of a site to crawl and which to ignore. These verification efforts not only underscore potential issues but also spotlight opportunities for further refinement of SEO strategies:

- Utilize search engine tools such as Google Search Console to test robots.txt directives.

- Analyze the responses of crawl simulations to ascertain if search bots are adhering to the specified protocol.

- Adjust and revalidate the robots.txt file as necessary to achieve optimal search engine interpretation and performance.

Resolving Common Crawl Errors

In the realm of on-site technical SEO, the adept management of crawl errors is indispensable for advancing a site’s search engine ranking prospects.

These errors can obstruct the path search engine crawlers take, causing them to miss critical content and thereby detrimentally affect a website’s visibility.

Leveraging Webmaster Tools is pivotal for detecting such discrepancies in crawling processes.

Once identified, webmasters must prioritize and remediate these errors to smooth the path for search bots.

Continual vigilance is required, with routine monitoring necessary to ensure new crawl issues do not compromise the meticulous work invested in a website’s SEO strategy.

Detecting Crawl Errors Using Webmaster Tools

Detecting crawl errors is a critical step for webmasters, as these errors can impede search engine crawlers from accessing and indexing important content on a website. Webmaster Tools, like Google Search Console, offer indispensable insights into the health of a site’s indexing status, highlighting areas that require attention and providing actionable data to address issues.

Upon logging into Webmaster Tools, users can navigate to the coverage section to review error reports that outline any crawling difficulties their site may face. This interface facilitates prompt identification of issues such as broken URLs, server errors, or security concerns that could otherwise diminish the site’s visibility on search engine results pages:

- Review Webmaster Tools error reports for identifying crawl errors.

- Investigate listed issues such as inaccessible URLs, server malfunctions, or security warnings.

- Initiate error resolution to prevent these impediments from hindering search engine indexing.

Prioritizing and Addressing Crawl Errors

Pinpointing the most critical crawl errors forms the bedrock of optimization efforts, demanding immediate attention be given to severe issues such as 404 errors and server availability problems. These defects can significantly disrupt a website’s accessibility to both users and search engines, rendering prompt resolution paramount to maintain a healthy digital presence.

Post-identification, the route to rectifying crawl errors involves a methodical approach to troubleshooting and applying corrective measures to alleviate the issues at hand:

- Analyze server logs to identify patterns that might signal the root cause of crawl errors.

- Repair broken links and rectify any improper redirects that contribute to navigation and indexing challenges.

- Update security protocols and resolve any related errors to ensure website safety is not a barrier to search engine crawling.

Monitoring for New Crawl Issues Regularly

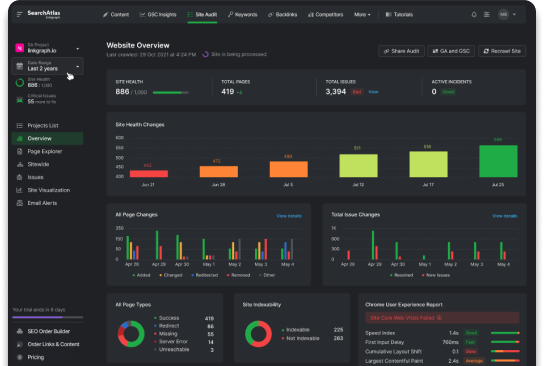

Staying vigilant with regular monitoring is the linchpin in preemptively addressing crawl errors, ensuring they don’t metastasize into larger SEO impediments. LinkGraph’s SEO services recognize the critical importance of this continuity, offering dedicated tracking to discern any new crawl issues that arise, laying the groundwork for immediate and effective resolutions.

| Monitoring Frequency | Tools Utilized | Benefit |

|---|---|---|

| Ongoing | Search Atlas, Google Search Console | Prevents impact on SEO rankings |

| Ad-hoc Post-Updates | LinkGraph’s Site Audit | Identifies issues post implementation |

Employing advanced analytics as part of LinkGraph’s SEO Audit services, clients are privy to comprehensive reports that spell out any emergent crawl issues, with granular details that inform the necessary corrective strategies. This not only preserves the user experience but also maintains the integrity of the website’s structure for search engine crawlers.

Ensuring URL Structure Consistency

In the meticulous task of on-site technical SEO, the constancy of URL structure emerges as a critical element, capable of either propelling a website toward commendable search engine visibility or hindering its rise in the ranks.

Equally noteworthy is the URL’s case sensitivity, which can influence the user experience as well as how search engine crawlers interpret and index website pages.

This underscores the need for techniques aimed at normalizing URL structures, forging a cohesive and crawler-friendly framework that aligns with SEO best practices and enhances the site’s discoverability.

The Impact of URL Case Sensitivity on SEO

The preciseness of URL case sensitivity plays a subtle yet significant role in search engine optimization, impacting how search engine crawlers index and differentiate between content. A mismatch in case sensitivity can lead to a perception of duplicate content by search bots, diluting the authority of web pages and potentially resulting in lower search engine rankings.

Experts at LinkGraph underscore the importance of uniform URL structures, considering them a key component in the user’s journey and a facet that search engines evaluate. By fostering URL consistency, brands ensure that every visitor – human or crawler – is directed to the correct resource, enhancing search relevance and efficiency.

Techniques for Normalizing URL Structures

Mastering the art of URL normalization is akin to providing a clear roadmap for both users and search engine crawlers. To achieve uniformity, LinkGraph equips sites with strategies that transform URL structures into consistent, optimized formats:

- Implementing server-side redirects to unify varying capitalization and enforce a standard case-sensitive protocol.

- Adjusting the website’s internal linking to utilize a singular, preferred URL structure, thereby preventing the dispersal of link equity.

- Configuring canonical tags to signal the preferred version of a webpage when potential duplicates exist.

LinkGraph’s approach to URL normalization ensures that variations do not fracture the user experience or impede a site’s SEO performance. This meticulous attention to detail cultivates a cohesive digital ecosystem where every link fortifies the site’s authority and search engine discovery potential.

The Importance of SSL Certification for SEO

The safeguarding of user data through Secure Socket Layer (SSL) certification has graduated from a preference to a necessity in the dynamic sphere of website optimization.

As a trusted hallmark of security, SSL certification not only protects data integrity and confidentiality during transit between the user’s device and the server but also serves as a trust signal to search engines—a vital SEO ranking factor that can impact a website’s visibility and credibility.

Delving into the nature of SSL and the advantages it presents in the SEO arena, alongside the practical steps for acquiring and deploying an SSL certificate, presents site owners with a clear pathway to enhancing both website security and search engine favorability.

Understanding SSL and Its SEO Benefits

SSL certification emerges as a pivotal component in the realm of modern SEO, reinforcing site security while simultaneously enhancing search engine trust. An SSL-secured website encrypts data exchanged between a visitor’s device and the server, laying a foundation for safe and reliable user interactions, a factor highly favored by search engines in determining rankings.

Professionals at LinkGraph recognize the influence of SSL on SEO outcomes, as search engines now regard site security as a critical parameter when evaluating page quality. By adopting SSL certifications, websites signal their commitment to safeguarding user information, thereby gaining a competitive edge in the search engine results pages through improved credibility and user confidence.

How to Obtain and Install an SSL Certificate

Securing an SSL certificate is the initial step towards reinforcing a website’s trustworthiness and SEO positioning. Business owners can procure these digital certificates through trusted Certificate Authorities (CAs), who verify the legitimacy of the domain and the organization requesting the encryption. Once acquired, the certificate asserts to search engines and visitors alike the site’s commitment to security, potentially bolstering its ranking in search engine results.

Installation of an SSL certificate involves a series of technical adjustments conducted on the web server. This critical path must be tread with care, as webmasters or hosting providers integrate the certificate, ensuring that the website operates under HTTPS protocol, signifying enhanced security. The execution of this process, when done correctly, serves as a clear signal to search engines, effectively contributing to the site’s credibility and SEO value.

Minifying CSS and JavaScript to Improve Load Times

As web developers and SEO experts prioritize the refinement of on-site technical SEO, the optimization of CSS and JavaScript through minification has emerged as a key contributor towards enhancing page speed and overall website performance.

Recognized as a paramount SEO ranking factor, the swift loading of a website page not only elevates user experience but also bolsters the site’s standing in search engine results.

This subsection delves into the necessity of employing tools for minifying website code and addresses the repercussions of code bloat on search engine optimization, two areas where efficient code can lead to significant gains in SERP rankings.

Tools for Minifying Website Code

One of the primary tools to streamline a website’s functionality is minification, a process by which unnecessary characters like whitespace, comments, and line breaks are removed from CSS and JavaScript files. This reduction in file size allows for faster page loads, a critical SEO ranking factor that enhances the user experience.

LinkGraph’s comprehensive suite of SEO services includes a meticulous code optimization procedure, utilizing industry-leading minification tools. These resources automate the minification process, ensuring that both CSS and JavaScript files are condensed to their most efficient form without compromising their functionality:

| Tool Category | Tool Function | SEO Benefit |

|---|---|---|

| Minification Tools | Compress CSS/JavaScript Files | Improves Page Load Speed |

| Automation Software | Streamlines Minification Process | Ensures Consistency Across Files |

Diagnosing Code Bloat and Its SEO Implications

Code bloat, the excessive use of code on a webpage, can inadvertently hamper SEO efforts by inflating page load times and diminishing user experience. The process of diagnosing this bloat begins with an analysis of the web page’s code-to-text ratio, identifying superfluous or redundant scripts that contribute to an unnecessary increase in page size.

LinkGraph’s SEO services employ comprehensive tools that pinpoint code inefficiencies impacting a website’s performance in search engine rankings. The implications of untreated code bloat extend to lower page speed metrics, negatively influencing SEO and, consequently, reducing a site’s ability to compete for top placement on search engine results pages:

- Evaluate existing CSS and JavaScript for potential redundancies.

- Implement minification methods to streamline code efficiency.

- Monitor page load times to observe improvements post-optimization.

Tactics for Image Optimization

Optimizing images is a critical component of on-site technical SEO that directly impacts a website’s loading efficiency and user engagement.

As visual content increasingly dominates the digital landscape, adopting a strategic approach to image file management is non-negotiable.

Effective techniques, including the meticulous selection of file names and formats, employing advanced compression methods to minimize file sizes, and deploying responsive images for optimal display across various devices, ensure fast page loads and enhance the overall user experience—a potent combination that can lead to better search engine rankings.

Best Practices for Image File Naming and Formats

Optimizing image files transcends mere aesthetics, evolving into a sophisticated SEO technique. Crafting descriptive, keyword-rich file names anchors images to the search engine’s understanding of the page content, while the judicious selection of the right image format — be it JPEG for photographs with subtle gradations or PNG for graphics demanding transparency — balances quality with load times.

Blazing a trail in image optimization involves not just visual quality but also agility in page responsiveness. The end goal is a seamless union between top-tier user experience—marked by swift page loads and crystal-clear visuals—and enhanced search engine recognition, propelling a brand’s digital footprint to superior heights.

| SEO Element | Best Practices | Impact on SEO |

|---|---|---|

| File Naming | Utilize descriptive, keyword-focused titles. | Improves image relevance in search results. |

| Image Formatting | Select optimal formats for quality and efficiency. | Enhances page load speed and user experience. |

Using Compression to Reduce Image File Sizes

Embracing image compression is a transformative strategy in image optimization, effectively minimizing file sizes to accelerate webpage loading without compromising visual fidelity. LinkGraph utilizes sophisticated compression techniques that strip superfluous image data, optimizing visual elements to create a nimble digital environment conducive to swift page rendering.

This meticulous approach to image file management by LinkGraph underscores its understanding of website performance intricacies, as it enhances operational fluidity while carefully preserving image quality. The strategic use of compression serves as an indispensable tool, ensuring imagery remains visually compelling and easily digestible by search engine algorithms.

How to Implement Responsive Images

Implementing responsive images is a nuanced task that commands a holistic understanding of device diversity and display capabilities. Web developers must integrate HTML5’s ‘srcset’ attribute to enable browsers to select the most appropriate image size, consequently optimizing page load times and preserving bandwidth across desktops, tablets, and smartphones.

LinkGraph’s proficiency in on-site technical SEO includes the strategic execution of responsive images to ensure user-centric visual experiences. This fervent attention to adaptive image deliverability not only caters to device-specific resolutions but also aligns with Google’s mobile-first indexing, which unmistakably favors websites that provide an optimized viewing experience for mobile users.

HTML Errors and W3C Validation

Maintaining a website devoid of HTML errors and adhering to W3C standards is an indispensable on-site technical SEO factor for any web property seeking optimized search engine placement.

HTML errors, like broken tags or improper nesting, can impair a search engine’s ability to crawl and index content, potentially leading to suboptimal search visibility.

Concurrently, W3C compliance ensures that web pages adhere to international guidelines, facilitating a more predictable and accessible user experience across various browsers and devices.

Engaging with tools such as the W3C Validator equips developers with the resources necessary to diagnose and rectify markup discrepancies, thereby fostering a healthier, more SEO-friendly website environment.

How HTML Errors Can Affect SEO

HTML errors undermine the clarity and integrity of a website’s code, which can stifle search engine crawlers’ ability to efficiently parse and index the content, casting a shadow over the site’s SEO potential. Miscalculations such as improperly closed tags or deprecated elements can lead to an inconsistent user experience, invoking search engine algorithms to dole out penalties in the form of lower rankings.

Ensuring adherence to W3C standards is a cornerstone of professional web development, impacting not only accessibility but also a site’s standing in the eyes of search engines. Clean, compliant HTML code facilitates smooth crawling and indexing, signaling to search engines that the website is well-maintained and trustworthy, attributes that commendably contribute to its SEO stature.

Utilizing W3C Validator for a Healthier Website

Engaging with the W3C Validator is a critical step for webmasters who prioritize the health and SEO-readiness of their site. This tool systematically reviews web pages for markup accuracy against web standards, detecting HTML and XHTML discrepancies that can compromise a site’s search engine visibility.

When the validator uncovers errors, developers are presented with actionable insights to refine their web pages: a systematic process initiated by detailed error reports. Aligning webpage code with W3C standards is not merely a technical exercise, but a strategic SEO move that enhances the site’s credibility in the digital ecosystem:

- Run the website through the W3C Validator to spot HTML errors.

- Address the specified errors and warnings to ensure code standard compliance.

- Revalidate the corrected pages to confirm the absence of markup issues.

Regular use of the W3C Validator can lead to significant long-term SEO benefits, as it ensures a website maintains a consistent quality of code. This consistent maintenance has a ripple effect, enhancing the user experience while signaling to search engines that the site is reliably structured and accessible.

Mobile Responsiveness and SEO

As digital landscapes evolve, the imperative for mobile responsiveness in on-site technical SEO becomes unequivocal for website owners seeking enhanced search engine rankings.

Embedding mobile responsiveness into the web infrastructure caters to the ever-growing segment of users on smartphones and tablets, while concurrently aligning with search engines’ mobile-first indexing strategies.

This section delves into the significance of evaluating website mobile-friendliness and evolving web designs to provide stellar mobile experiences, both of which are pivotal to sustaining competitive advantage in the modern, mobile-centric online sphere.

Testing Your Website’s Mobile Friendliness

Assessing a website’s mobile friendliness is a critical step for ensuring compliance with SEO best practices, as search engines increasingly favor sites optimized for mobile devices. Conducting this evaluation allows developers to identify and rectify issues that hinder navigability or readability on smaller screens, ensuring a website delivers an optimal user experience regardless of the device used.

LinkGraph provides specialized services that measure a website’s mobile responsiveness, utilizing a range of diagnostic tools that simulate the user experience across various mobile platforms. This analysis is vital, as it highlights aspects that require optimization, enabling webmasters to enhance mobile functionality and, as a result, improve the site’s visibility and ranking on search engine results pages.

Adapting Web Design for Optimal Mobile Performance

Adapting web design for optimal mobile performance necessitates a user-centric approach that considers the limitations and capabilities of mobile devices. It is about crafting an interface that is not only visually appealing but also functionally robust, ensuring seamless navigation and interaction for mobile users.

The reconfiguration process includes implementing responsive design principles, streamlining content, and optimizing touch interactions to achieve a fluid and intuitive user experience:

- Employ responsive design to ensure that web pages automatically adjust to the screen size of various devices.

- Simplify content presentation to facilitate easier reading and engagement on smaller screens.

- Enhance touch interactions by designing user interface elements that are easily tappable with a finger.

Such an adaptation strategy positions a site for superior performance in a mobile-driven marketplace, ultimately influencing its search engine ranking and user engagement metrics. By ensuring that web design elements are flexible and context-aware, businesses can cater to the extensive array of devices that populate the market, solidifying their standing in an increasingly competitive digital landscape.

Establishing a Preferred Domain Configuration

Mastering the nuances of on-site technical SEO involves a keen understanding of preferred domain configuration, an element crucial for maintaining consistency and authority across a brand’s digital presence.

Within this framework, setting up 301 redirects stands as a cornerstone practice, ensuring that users and search engines encounter no ambiguity in accessing a site’s content.

Concurrently, the precise configuration of canonical tags carries significant weight, guiding search engines towards the authoritative version of content and preventing issues related to duplicate pages.

An adept maneuvering of these strategies catalyzes the establishment of a robust SEO foundation, pivotal for a website’s competence and success in the intricate tapestry of digital space.

Setting Up 301 Redirects for Domain Consistency

The implementation of 301 redirects is an exercise in precision, obliging webmasters to usher both users and search engines from multiple domain variants to a singular, authoritative destination. This re-routing not only aids in avoiding content duplication but also consolidates domain authority, sharpening a website’s competitive edge in SERPs.

Engaging in the strategic application of 301 redirects, LinkGraph facilitates a seamless transition for all traffic towards the preferred domain configuration. The company’s expert hands ensure this crucial task bolsters a site’s search engine trust, reinforcing the uniformity and credibility of a business’s digital footprint.

How to Configure Canonical Tags Properly

Proper configuration of canonical tags is a disciplined approach to eliminate content duplication and clearly designate the preferred version of a web page to search engines. These tags act as signals to search engine crawlers, pointing to the original, authoritative content, thereby helping maintain the website’s search relevancy and authority.

For optimal implementation, LinkGraph advises clients to carefully assign canonical tags to equivalent pages, ensuring accuracy in reflecting the preferred URL for indexing: a meticulous strategy that preserves the website’s SEO integrity and consolidates its ranking power.

| Step | Action | SEO Benefit |

|---|---|---|

| 1 | Identify Duplicate Content | Prepares for correct canonicalization |

| 2 | Select the Preferred URL | Clarifies the authoritative source |

| 3 | Implement the Canonical Tag | Directs search engines effectively |

Enhancing Site Speed Through Performance Optimization

Optimal site speed is a non-negotiable technical SEO factor, critical for retaining visitor engagement and securing a leading edge in search engine rankings.

Industry leaders acknowledge that milliseconds can mean the difference between a user’s prolonged engagement and swift departure.

To address this, a strategic approach involves assessing current site speed metrics, harnessing the agility of caching and Content Delivery Networks (CDNs), and refining server response times.

Together, these actions form a triad of solutions that elevate website performance and reinforce user satisfaction, ultimately contributing to a brand’s search engine success.

Analyze Site Speed With Relevant Tools

Speed is of the essence in the digital realm, and accurately gauging site speed is a prime technical SEO factor critical for sustaining user interest and improving search rankings. LinkGraph employs state-of-the-art tools to dissect and analyze web page performance metrics, arming SEO specialists and webmasters with the data required to initiate targeted speed optimization interventions.

The precise analysis of site speed begins with powerful tools designed to measure and break down every element contributing to page load times: from server response to rendering efficiency. Utilizing these tools enables brands to pinpoint specific lag-inducing factors and empowers them with actionable insights to streamline performance:

- Employ comprehensive speed testing tools to identify bottlenecks in webpage loading processes.

- Analyze detailed reports to uncover the underlying causes of slow performance.

- Develop a data-driven strategy based on the analysis to enhance site speed and elevate user experience.

Implementing Caching and Content Delivery Networks

Optimizing a website for speed necessitates leveraging advanced techniques such as caching and the deployment of Content Delivery Networks (CDNs). These methods significantly decrease the load times of a website by storing static resources on multiple servers worldwide, thereby facilitating quicker access to content for users regardless of geographical location.

LinkGraph’s expertise in on-site technical SEO encompasses the strategic implementation of caching and CDNs, ensuring that a website’s performance is robust and consistent. By caching frequently accessed resources and distributing them through a CDN, LinkGraph aids in mitigating latency and enhancing the responsiveness of a website, thus contributing to a superior user experience and improved SEO metrics.

Optimizing Server Response Times

Server response times can significantly impact a website’s speed and user satisfaction, thus affecting SEO rankings. Optimizing server response time entails improving the infrastructure and configuration to ensure prompt data delivery.

An efficient server swiftly processes requests, minimizing latency and bolstering overall website performance: factors highly valued by search engines.

- Audit current server performance to identify areas for improvement.

- Upgrade server hardware or opt for a more capable hosting solution if necessary.

- Optimize server software configurations to reduce unnecessary processing delays.

Core Web Vitals and User Experience

In the rigorous domain of on-site technical SEO, Core Web Vitals have crystallized as pivotal metrics, gauging the health and performance of a web presence through the lens of user experience.

These critical signals, designated by Google, offer a holistic view of site stability, interactivity, and loading prowess, thus informing webmasters of potential enhancements to bolster page experiences.

Grasping the nuanced contours of Core Web Vitals metrics, complemented by the strategic application of improvement measures, equips professionals with the means to elevate websites – aligning them with the user-centric standards that dominate search engine algorithms today.

Understanding the Core Web Vitals Metrics

Core Web Vitals are precise measurements that Google utilizes to assess the quality of a user’s experience on a web page. Key factors include Largest Contentful Paint (LCP), which evaluates loading performance; First Input Delay (FID), which measures interactivity; and Cumulative Layout Shift (CLS), which gauges visual stability.

For professionals aiming to perfect a website’s technical SEO, it’s vital to comprehend that these metrics do not simply represent data points, but are critical indicators of a site’s usability. Mastery of Core Web Vitals is synonymous with ensuring a website not only ranks favorably but also provides a seamless, satisfying user experience.

Practical Steps to Improve Core Web Vitals Scores

To amplify a website’s Core Web Vitals scores, webmasters must first focus on optimizing the Largest Contentful Paint (LCP) by refining how quickly the main content loads. This entails minimizing server response times, embracing lazy-loading for images and videos, and removing any non-critical third-party scripts that may hinder prompt content rendering.

Enhancing First Input Delay (FID) involves streamlining interaction readiness by breaking up long JavaScript tasks and prioritizing loading for interactive elements. For the improvement of Cumulative Layout Shift (CLS), ensuring visual stability is key, which can be achieved by specifying size attributes for media and dynamically injected content to prevent abrupt layout shifts during page load.

Frequently Asked Questions

How can sitemaps contribute to the optimization of a website’s SEO?

Sitemaps play a pivotal role in SEO as they guide search engine crawlers through a website’s structure, ensuring all pages are discovered and indexed appropriately. This facilitates improved search engine rankings by clarifying the site’s content hierarchy and highlighting the most crucial information for crawling efficiency.

What are the key considerations when it comes to robots.txt files in relation to SEO?

When exploring the impact of robots.txt files on SEO, it is essential to consider both their ability to guide search engine crawlers through a website’s architecture and the potential risk of inadvertently blocking important pages from being indexed. Ensuring the precise command of which website pages to crawl or ignore is pivotal, as it directly influences the site’s visibility in search engine results.

What are some common crawl errors that can negatively impact a website’s search engine ranking?

Crawl errors can stem from a multitude of issues, notably broken links and misconfigured robots.txt files, which obstruct search engine crawlers from effectively accessing and indexing website pages. Another detrimental factor involves server errors, causing timeouts and subsequently hindering search bots from retrieving the site’s content, posing risks to the website’s visibility in search engine results.

How does URL structure consistency affect SEO and what steps can be taken to ensure it?

Uniform URL structure is pivotal for SEO as it helps search engines understand and index content efficiently, enhancing user experience. To ensure consistent URL structure, firms like LinkGraph recommend creating a logical site hierarchy, using keyword-rich but concise URLs, and implementing comprehensive, sitewide URL guidelines to maintain uniformity across all pages.

Why is SSL certification important for SEO and how does it impact a website’s rankings?

Secure Socket Layer (SSL) certification is pivotal for SEO as it encrypts data transfer, boosting user trust and experience, which search engines reward with higher rankings. This layer of security is deemed a standard by search engines, signaling that a website is trustworthy and merits a favorable position on the search engine results page (SERP).

How does the use of target keywords impact the effectiveness of on-site SEO elements?

Target keywords are crucial in on-site SEO as they help search engines understand the relevance of a page to specific queries. Proper integration of target keywords in content, meta tags, and other on-page elements enhances the page’s visibility and ranking for relevant searches, contributing to overall SEO success.

What role do backlinks play in the context of technical SEO strategies, and how do they influence a website’s search engine ranking?

Backlinks are an integral part of technical SEO strategies as they signal the credibility and authority of a website. Quality backlinks from reputable sources contribute to higher search engine rankings. It’s essential to focus on acquiring natural, high-quality backlinks to improve a website’s overall SEO performance.

How does site architecture impact the success of an eCommerce SEO strategy, and what considerations should be taken into account?

Site architecture is a critical factor in eCommerce SEO as it influences how search engines navigate and understand the structure of an online store. Well-organized category pages, clear product hierarchies, and effective breadcrumb navigation contribute to a positive user experience and improved SEO rankings for eCommerce websites.

What role do breadcrumbs play in enhancing the user experience and SEO performance of a website?

Breadcrumb navigation provides a clear path for users to navigate a website, improving their overall experience. From an SEO perspective, breadcrumbs create a logical structure that search engines can follow, helping them understand the hierarchy and relationships between different pages, ultimately contributing to better rankings.

How can a well-crafted blog post positively impact SEO ranking factors, and what elements should be considered during content creation?

A well-crafted blog post can positively influence SEO ranking factors by providing valuable, relevant content that attracts and engages the target audience. Effective keyword usage, comprehensive information, and proper formatting contribute to higher visibility in search engine results, aligning with search algorithms’ preferences for authoritative and informative content.

What are some common content issues that can hinder a website’s SEO performance, and how can they be addressed?

Content issues such as duplicate content, thin content, or poorly optimized content can negatively impact SEO. Conducting regular content audits, optimizing for relevant keywords, and ensuring content uniqueness are essential steps to address these issues and improve a website’s overall search engine visibility.

How does hreflang attribute implementation contribute to a website’s SEO, especially for multinational or multilingual audiences?

Implementing hreflang attributes is crucial for websites targeting multiple languages or regions. It helps search engines understand the language and regional targeting of specific pages, ensuring the right content is served to the right audience. This enhances the website’s overall SEO performance in diverse geographic and linguistic contexts.

What are the key considerations for advertisers aiming to optimize their websites for search marketing and SEO?

Advertisers need to focus on aligning their content with user intent, utilizing relevant keywords, and optimizing landing pages for better search engine visibility. Additionally, monitoring and refining SEO strategies based on performance data are essential to maximize the impact of search marketing efforts.

How can a comprehensive SEO checklist aid in identifying and resolving technical SEO issues on a website?

A comprehensive SEO checklist serves as a systematic guide to evaluate various technical SEO aspects. It includes tasks such as checking for broken links, optimizing meta tags, and ensuring proper URL structures. Following a well-structured SEO checklist can help address and resolve technical SEO issues systematically, contributing to improved website performance.

What are some advanced technical SEO strategies that can be employed during a technical SEO audit to enhance a website’s search engine ranking?

Advanced technical SEO strategies include optimizing server response times, improving website speed, and implementing structured data markup. These strategies go beyond basic optimization and address intricate technical aspects, ensuring a website meets the highest standards of search engine performance and ranking.

Conclusion

In conclusion, optimizing on-site technical SEO factors is critical for enhancing a website’s search engine ranking and user experience.

Essential practices include constructing and submitting detailed sitemaps which aid search engine crawlers, managing robots.txt files to direct crawlers effectively, and addressing crawl errors to ensure content is accessible.

Moreover, maintaining consistent URL structures and securing SSL certification fortify a site’s credibility and user trust.

Compressing resources such as CSS, JavaScript, and images speeds up page loading times, while ensuring HTML code validity and mobile responsiveness meets evolving user expectations.

Establishing a preferred domain via redirects and canonical tags, alongside optimizing site speed through caching and server optimization, are key for a strong online presence.

Lastly, focusing on Core Web Vitals metrics underlines the importance of a quality user experience, confirming that a meticulous approach to on-site technical SEO is indispensable for a website’s success in today’s digital landscape.