How to Index Your Website on Google

Mastering the Art of Website Indexing on Google Indexing is the lifeline that connects a website to its potential users through Google’s vast search landscape. Grasping the […]

Mastering the Art of Website Indexing on Google

Indexing is the lifeline that connects a website to its potential users through Google’s vast search landscape.

Grasping the nuances of how Googlebot discovers, processes, and adds web pages to its index ensures your site garners the visibility it commands.

Thorough optimization and strategic maneuvering can boost the likelihood of your content rising through the ranks of Google’s search results.

LinkGraph’s SEO services pivot on empowering site owners with the knowledge and tools necessary for conquering the indexing matrix.

Keep reading to unlock the secrets of positioning your website prominently in the Google index.

Key Takeaways

- Efficient Indexing Requires Accessible and Intelligible Website Architecture for Googlebot

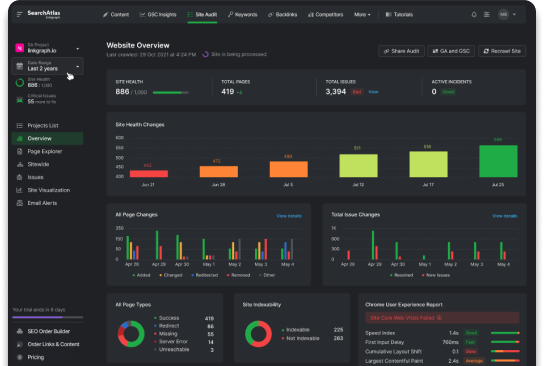

- LinkGraph Uses Tools Like SearchAtlas SEO Software to Enhance Site Indexing and Visibility on Google

- Creating Authoritative Backlinks and Leveraging Social Shares Are Key to Expedite the Indexing Process

- Resolving Indexing Errors and Duplicate Content Issues Is Essential for Maintaining a Website’s SEO Health

- Vigilant Monitoring of Sitemap Status Within Google Search Console Ensures Continuous Indexation and Visibility

Understanding the Basics of Website Indexing

Website indexing stands as a pivotal process in the world of Search Engine Optimization (SEO), bridging the gap between content publication and visibility in Google’s search results.

At the core of indexing lies the intricate workings of Googlebot, a relentless crawler tasked with the discovery and assessment of web pages, determining their merit for inclusion in the vast index that fuels Google’s search engine.

The sitemap, a strategic blueprint of a website’s structure, plays a crucial role in this process, guiding Googlebot’s journey through the site’s pages and ensuring comprehensive notice of the website’s content.

Mastering the indexing process is essential for site owners, SEO professionals, and content creators alike, as it marks the starting line from which a website’s digital success may be launched.

What Is Website Indexing and Why It Matters

Website indexing is the procedure by which search engines like Google organize information before a search to enable fast responses to queries. Users’ access to a website’s content via search results hinges on this critical step, positioning indexing as an essential component for online visibility and searchability.

The significance of website indexing cannot be overstated; it directly influences a site’s ability to appear in Google search results. Indexing constitutes Google’s recognition of a webpage, an acknowledgment that stands as a prerequisite for ranking and is therefore the bedrock of any effective SEO strategy.

How Googlebot Discovers Your Website

Googlebot embarks on its mission to discover websites through an intricate web of digital pathways, initiating contact by following links that point to each new domain. The crawler systematically traverses from one hyperlink to the next, unearthing the content that will be scrutinized for potential addition to Google’s expansive index.

Efficient indexing necessitates that a website’s digital architecture is both accessible and intelligible to Googlebot, with factors such as a clear sitemap URL, thoughtfully structured txt file, and an optimized robots.txt file taking center stage. These elements collectively streamline the indexing process, facilitating Google’s efforts to render and rank the site’s pages in search results.

The Role of a Sitemap in Website Indexing

The sitemap serves as an essential navigator for Googlebot, revealing the structure and pages of a website in a format tailored for search engine comprehension. This document, often in XML sitemap format, is pivotal in ensuring that no section of the site eludes the meticulous scanning of Google’s indexing fleet.

In the confluence of SEO and website architecture, the sitemap emerges as a cardinal element, not only aiding Googlebot but also providing SEO services with a foundation for free SEO audits and optimization strategies. LinkGraph’s cutting-edge SearchAtlas SEO tool harnesses the sitemap to enhance indexing efficiency and elevate a site’s prominence in Google search results.

Setting the Stage for Successful Indexing

Charting a path for successful website indexing is synonymous with preparing a stage for a world-class performance, where each technical aspect must harmonize to invite Googlebot’s scrutiny and inclusion in search results.

The artistry in this digital environment involves ensuring absolute crawlability, a feat achievable through precise management of a website’s robots.txt file.

This mastery extends to the adept use of Google Search Console, a nexus where insights regarding indexing status transform into actionable knowledge, empowering website owners to refine indexing efficacy.

With such foundational practices, LinkGraph’s comprehensive SEO services cultivate a fertile ground for websites to thrive in the competitive ecosystem of Google’s search landscape.

Ensuring Your Website Is Crawlable

In the realm of SEO, ensuring a website’s crawlability is tantamount to laying a well-paved pathway for Googlebot—without impediments, it freely explores and evaluates the site’s pages. LinkGraph’s on-page SEO services meticulously refine a website’s framework to guarantee that each node and connection is primed for seamless navigation by search engine crawlers.

LinkGraph employs white label SEO services and powerful tools like the SearchAtlas SEO software to evaluate and modify a site’s structure, ensuring a transparent, crawl-friendly environ. This meticulous approach to crawlability not only facilitates the indexing process but also sets the stage for higher visibility within the clamor of Google’s search results.

Utilizing robots.txt for Indexing Control

LinkGraph excels in the strategic application of the robots.txt file, an instrumental component granting webmasters the ability to guide Googlebot’s indexing process. This simple yet powerful text document instructs search engine crawlers on which sections of a website to process or exclude, enhancing indexing precision and efficiency.

Through the careful manipulation of robots.txt directives, LinkGraph’s SEO experts provide their clients with a means to safeguard sensitive content, while ensuring that paramount pages are readily accessible to Googlebot. This discerning control facilitates the maintenance of a website’s indexability and safeguards against unwelcome indexing of interim or irrelevant pages.

Using Google Search Console for Indexing Insights

Google Search Console stands as an indispensable tool for garnering indexing insights, equipping website owners with actionable data. This powerful platform provides a lens into how Google views a site, allowing the user to monitor their website’s presence in the Google Search results with precision and depth.

Upon verifying their domain, users can delve into the URL Inspection Tool within Google Search Console to track a specific page’s indexing status. The console illuminates pivotal aspects such as the page’s visibility in search results and any crawl errors that might inhibit successful indexing:

- Verifying the website and ensuring proper communication with Google.

- Utilizing the URL Inspection Tool to check current indexing status.

- Identifying and resolving any crawl errors or issues flagged by the console.

By leveraging these insights, specialists from LinkGraph carefully refine target pages, optimizing the indexing process for enhanced SEO performance. This approach fosters a robust SEO content strategy, augmenting a website’s potential to ascend the ranks in Google search results and capture the attention of millions of users.

Optimizing Your Content for Google Indexing

The cornerstone of prominent visibility on Google hinges on the artful optimization of website content for indexing—a meticulous blend of quality, technical precision, and strategic structuring forms the basis for digital recognition.

Businesses and content creators aiming for the apex of search result pages must infuse their web properties with index-friendly content, tailoring metadata for maximum relevance, and weaving a web of internal links that not only enhance usability for visitors but also anchor the content firmly in Google’s index.

LinkGraph stands at the forefront of this optimization, offering SEO expertise that meticulously aligns content with the discerning algorithms that govern online searchability.

Crafting High-Quality, Index-Friendly Content

Crafting content that resonates with both users and search engines is a nuanced endeavor; it demands an equilibrium between informative, engaging material and strategic placement of keywords. LinkGraph’s SEO content strategy focuses on generating high-quality, index-friendly content that not only captivates the reader but also aligns perfectly with search engine guidelines for maximum indexing potential.

An integral component of LinkGraph’s approach includes the Meticulous Optimization of Metadata. This encompasses title tags and meta descriptions that reflect the core essence of the content and efficiently communicate the subject matter to Google’s indexing algorithms:

- Ensuring targeted keywords are naturally integrated within the title tags and headings for optimal indexing.

- Curating meta descriptions that serve as compelling summaries, encouraging clicks from search results while aiding indexing.

- Structure content with hierarchical headings that streamline the indexing process, clarifying content organization to Googlebot.

By leveraging LinkGraph’s expertise in creating content tailored for indexing, businesses enhance their digital presence, propelling their web pages to the forefront of Google search results where they gain visibility and drive organic traffic.

Implementing Effective Metadata for Pages

In the sphere of website indexing on Google, the primacy of effective metadata cannot be understated. LinkGraph adeptly implements metadata that acts as a beacon to search engines, enabling clear communication of a page’s content and intent, thereby sharpening its indexability and prominence in search results.

LinkGraph’s strategic use of metadata centers on crafting concise, yet expressive title tags and meta descriptions that both captivate and inform. Their deft crafting of these HTML elements ensures each page stands out to both Googlebot and prospective visitors, optimizing the website’s potential for higher rankings and increased click-through rates.

Leveraging Internal Linking Strategies

An intricate web of internal links serves as the scaffold for a website’s SEO architecture, directing Googlebot’s navigation across various pages and dispersing the value of link equity throughout. LinkGraph’s internal linking strategies establish a network of logical pathways, enhancing user experience while signaling the hierarchy and the interrelation of content to search engine algorithms, ultimately strengthening the overall indexing process.

LinkGraph’s tactical approach reinforces the pivotal role of contextually relevant links within content, enabling search engines to discern the topical depth and breadth of a website. Crafting internal links with precision not only boosts the indexing of individual web pages but also holistically amplifies a website’s domain authority, driving its ascendancy in Google search results.

Leveraging Sitemaps for Enhanced Indexing

Embracing the utility of sitemaps marks a decisive step in mastering the indexing process on Google, offering an unparalleled opportunity to streamline how a website communicates its structure to the search engine.

Creating a comprehensive XML sitemap acts as a direct conduit for Googlebot, providing an efficient route for the discovery and indexing of web pages.

Moreover, submitting this carefully curated map through the proper channels and vigilantly monitoring its status within Google Search Console equips website owners with a proactive approach to SEO.

This attention to detail ensures a website’s content landscape is thoroughly represented and easily navigable, proving indispensable for favorable indexing outcomes.

Creating a Comprehensive XML Sitemap

A comprehensive XML sitemap acts as a navigational guide for Googlebot, articulating the structure and priority of pages on a website. Its meticulous construction encompasses every valuable piece of content, ensuring Google’s algorithms can efficiently map out and index the site’s landscape.

LinkGraph harnesses the power of SearchAtlas SEO software to engineer XML sitemaps that are both comprehensive and tailored to promote optimal indexing by Google. This strategy is pivotal, as it not only aids in faster indexing but also ensures that significant updates and content revisions do not escape the keen eyes of the search engine crawlers.

Submitting Your Sitemap to Google

Once a website’s XML sitemap is established and primed, submission to Google is the crucial next step to initiate its indexing journey. LinkGraph’s SEO services provide expert guidance on how to present a sitemap directly through Google Search Console, facilitating a connection between a website’s layout and the search engine’s indexing system.

Through the meticulous process of sitemap submission, LinkGraph aids clients in signaling their readiness for indexing to Google. This pivotal action informs the search engine of new and updated content, ensuring that a website’s most vital pages are discovered and appraised during Google’s indexing evaluations.

Monitoring Sitemap Status in Google Search Console

LinkGraph underscores the importance of vigilance over a sitemap’s status within Google Search Console, ensuring that issues affecting indexing are promptly identified and resolved. This proactive observation plays a crucial role, as it keeps SEO specialists informed about the health and visibility of the sitemap, allowing them to take immediate action on any notifications regarding errors or omissions.

Through diligent monitoring, LinkGraph ensures that a website’s sitemap remains an accurate reflection of its current structure, fostering continuous communication with Google’s indexing system. This ongoing surveillance affords webmasters the confidence that their content is not only indexed but maintained within Google search results, maximizing their online presence and SEO investment.

Accelerating Indexing With Backlinks and Social Signals

As businesses vie for prominence in the digital realm, the power of backlinks and social signals in expediting the website indexing process cannot be ignored.

These elements, reflective of a website’s authority and user engagement, are key accelerants that spur Googlebot’s attention and encourage swift indexing.

Authority-building backlinks signal trustworthiness and relevance, while social shares amplify content’s value, casting a spotlight on its merit.

Engaging in guest blogging expands a website’s reach, offering additional avenues for visibility and indexation.

LinkGraph’s adept utilizations of these dynamic strategies underpin the rigorous pursuit of enhanced visibility in Google’s search results, fostering a landscape where a website’s content swiftly transitions from publication to powerful online presence.

Building Authoritative Backlinks for Indexation

LinkGraph’s seasoned expertise in creating authoritative backlinks is pivotal in expediting the indexing process for clients. Recognizing that robust backlinks from reputable websites serve as strong endorsements to search engines, the firm diligently cultivates these connections to enhance the perceived authority and relevance of a client’s website, thus encouraging more rapid indexing.

Strategically focusing on white label link building, LinkGraph ensures that every backlink is a testament to a website’s quality. By securing these valuable links, the firm not only speeds up the indexing procedure but also fortifies a website’s standing in Google search results, carving out a formidable online presence for its clientele.

Encouraging Social Shares to Signal Content Value

LinkGraph acknowledges the vital role of social signals in augmenting the indexing process, as swift content sharing across social platforms signifies value and relevancy to search engines. The active curation of social media content that resonates with followers can lead to increased shares, effectively amplifying the digital footprint and hastening the indexing process by attracting Googlebot.

In the realm of modern SEO, LinkGraph champions the Strategic Deployment of Social Shares as a tool for signaling content value, thereby influencing a page’s indexability in Google. By fostering authentic user engagement, LinkGraph assists in casting a website’s content into the spotlight, where it garners not only the attention of potential readers but also the indexing algorithms of search engines.

Engaging in Guest Blogging to Increase Visibility

Guest blogging emerges as a powerful tactic within LinkGraph’s repertoire, designed to broaden the exposure of a website and hasten the indexing process on Google. By securing guest posting opportunities on established, relevant platforms, LinkGraph propels its clients’ visibility, ensuring that thought leadership and site authority resonate across the digital expanse, enticing Googlebot to take notice.

Through these strategic collaborations, LinkGraph not only amplifies a website’s reach but also weaves a network of quality backlinks, each serving as a beacon that guides search engines toward swift indexing. This judicious application of guest blogging serves to elevate a website’s profile, augmenting its searchability and presence within Google’s critical search results.

Troubleshooting Common Indexing Issues

Navigating the journey to successful indexing on Google demands vigilance and a proactive approach to counteract potential hurdles that can impede a website’s visibility.

Identifying and resolving indexing errors is a critical step, ensuring that the complex mechanisms of search engines accurately reflect and present website content.

Likewise, addressing duplicate content penalties is integral to maintaining the uniqueness and integrity of a digital presence.

Moreover, understanding and rectifying causes for slow or blocked indexing is essential to streamline the pathway for Googlebot’s efficient access and review.

As websites evolve and grow, the ability to swiftly implement fixes becomes paramount, solidifying a site’s place within the dynamic landscape of Google search outcomes.

Identifying and Resolving Indexing Errors

Identifying errors that impede a website’s indexing on Google begins with a thorough utilisation of tools such as the SearchAtlas SEO software. These sophisticated instruments pinpoint discrepancies and errors, from missing tags to redirect issues that can obscure a site from Googlebot’s perspective.

Following detection, LinkGraph’s team of professionals embarks on resolving these indexing errors with precision. They ensure proper tag implementation, rectify faulty redirects, and remove directives like ‘noindex’ that may be inhibiting a page’s visibility, seamlessly restoring a website’s index status:

| Issue Detected | Impact on Indexing | Action for Resolution |

|---|---|---|

| Missing Title Tag | Reduces relevance signal for indexing | Implement descriptive title tags |

| Faulty Redirects | Disrupts Googlebot’s crawling process | Correct redirect pathways |

| ‘Noindex’ Directives | Prevents indexing of specific pages | Remove or adjust ‘noindex’ directives |

Dealing With Duplicate Content Penalties

Dealing with duplicate content penalties requires a discerning approach to content management, ensuring that all material presented on a website is unique and offers genuine value. LinkGraph excels at resolving these issues by conducting comprehensive content audits, thus preventing the dilution of SEO efforts and conserving a site’s ranking potential.

In the landscape of Google’s indexing protocols, mitigating the risk of duplicate content involves strategic revisions and the use of canonical tags to assert the preferred version of a web page. LinkGraph’s meticulous attention to these details fosters a strong and undiluted online presence, safeguarding clients from punitive measures that could undermine visibility in search results:

| Duplicate Content Issue | Impact on SEO | LinkGraph’s Resolutive Action |

|---|---|---|

| Non-Canonical Duplicate URLs | Potential penalty by search engines for identical content | Implementing canonical tags to indicate preferred URLs |

| Internal Content Replication | Diminishes page’s unique value and SEO weight | Conducting content audits to identify and rewrite duplicate material |

Implementing Fixes for Slow or Blocked Indexing

In the face of slow or blocked indexing, LinkGraph implements innovative solutions to overcome these complications, ensuring their clients’ websites gain the visibility they deserve. Addressing the underlying issues, such as server overloads that impede Googlebot’s efficient access or a suboptimal indexing request strategy, stands as a top priority for the firm’s SEO specialists.

LinkGraph streamlines the indexing process by optimizing web page content and enhancing server responses to facilitate Google’s crawlers. By making precise adjustments to a website’s structural and content elements, the firm guarantees that all pages are index-ready, mitigating factors that would otherwise delay or prevent successful indexing on Google.

Conclusion

Mastering the art of website indexing on Google is crucial for ensuring that a site stands out in the crowded digital landscape.

It necessitates a harmonized blend of accessible site architecture, strategic sitemaps, and effective SEO practices to facilitate Googlebot’s discovery and evaluation of content.

Implementing a robust indexing strategy, complete with authoritative backlinks and a keen eye on social signals, propels a website’s visibility, driving organic traffic and enhancing online presence.

Addressing indexing errors, duplicate content, and other impediments quickly is also vital to maintain this visibility.

By adeptly navigating the complexities of Google’s indexing process, businesses can secure a coveted spot in search results, unlocking their full digital potential.