Most Common Technical SEO Mistakes

Avoiding the Pitfalls: Key Technical SEO Errors to Watch Out For In the intricate world of Search Engine Optimization, navigating the digital terrain without stumbling on technical […]

Avoiding the Pitfalls: Key Technical SEO Errors to Watch Out For

In the intricate world of Search Engine Optimization, navigating the digital terrain without stumbling on technical SEO errors is a must for any thriving online presence.

LinkGraph’s SEO solutions understand that the devil is in the details — from ensuring a secure HTTPS protocol to optimizing site speed for a frictionless user experience.

With SearchAtlas SEO software, businesses unlock the potential of their websites, outmaneuvering common oversights that can sabotage search rankings.

This article arms webmasters with an arsenal of knowledge to identify and rectify key technical SEO pitfalls that hinder online success.

Keep reading to transform technical challenges into triumphs that propel your search visibility to new heights.

Key Takeaways

- Effective Structuring of XML Sitemaps Is Crucial for Search Engine Crawlers to Prioritize and Index Site Content

- Misconfigurations in robots.txt Files and the Implementation of Meta Tags Can Significantly Impact a Website’s Search Rankings

- Mobile-Friendly Optimization Is Essential for Websites Due to the Prevalence of Mobile Device Usage and Search Engines’ Mobile-First Indexing Approach

- Meta Descriptions Are Critical for Improving Click-Through Rates and Engaging Users From Search Engine Results Pages

- Language Localization Practices Enhance SEO Performance by Aligning Website Content With the Linguistic and Cultural Nuances of the Target Audience

Understanding the Role of HTTPS in SEO

In the intricate tapestry of search engine optimization, technical elements play a pivotal role in the shaping of a website’s online presence.

Among these, the importance of HTTPS cannot be overstated, acting as a critical secure communication protocol in an ever-evolving digital ecosystem.

With cyber threats on the rise and search engines favoring user safety, a Secure Sockets Layer, or SSL, does not merely serve as a shield but also as a ranking signal.

Neglecting this facet of technical SEO can lead to significant pitfalls, deterring users and diminishing trust.

Websites transitioning from the standard HTTP to the more secure HTTPS may witness a tangible shift in their SEO rankings, fostering a safer browsing environment and ensuring that both webmasters and users can navigate with confidence.

Why Secure Sockets Layer Is Essential

The incorporation of Secure Sockets Layer (SSL) stands as a non-negotiable cornerstone in upholding not only the security but also the integrity of a website. As digital entities vie for prominence on the search engine results page, SSL encryption becomes a fundamental criterion by which Googlebot and other crawlers assess the credibility and trustworthiness of a site, contributing to enhanced search rankings.

Site users and webmasters alike find reassurance in the SSL certificate’s ability to safeguard sensitive data, conferring a sense of security that translates to higher engagement rates and customer trust. A website adorned with SSL encryption speaks to the commitment of its owner to maintain a secure and user-friendly digital space, a key aspect that is deeply interwoven with the fabric of modern SEO practices.

Consequences of Not Having a Secure Site

Bypassing the implementation of HTTPS is an oversight that can hinder the user experience and search visibility of a website. Users may receive warnings of potential security risks when visiting non-HTTPS pages, leading to increased bounce rates and a tarnished reputation.

Furthermore, a website’s ranking can suffer as search engines prioritize security, penalizing pages that lack HTTPS. This omission can result in a significant drop in traffic, jeopardizing the potential for lead conversion and customer retention.

Transitioning From HTTP to HTTPS

Shifting a website’s protocol from HTTP to HTTPS is a decisive step towards bolstering its technical architecture and SEO ranking potential. This transition is crucial as it involves installing an SSL certificate, thereby initiating a secure exchange of information between the user’s browser and the server.

Completing this upgrade triggers an update in the site’s URLs, a meticulous process during which webmasters must ensure that redirects are correctly implemented to avoid broken links or content issues that could impact search engine trust and user experience:

- Install a valid SSL certificate to authenticate the website’s identity and enable secure connections.

- Update the website’s internal linking structure to reflect the new HTTPS URLs.

- Configure 301 redirects from HTTP pages to their corresponding HTTPS versions to maintain link equity.

- Revise the robots.txt file to confirm that HTTPS pages are crawlable and no vital resources are disallowed.

- Update sitemaps, including XML and HTML versions, to reference the secure URLs and facilitate efficient crawling by search engines.

Ensuring Proper Website Indexation

In the realm of search engine optimization, the action of indexation stands as a testament to a site’s visibility and discoverability by search engines.

A web page impeccably indexed is easily retrievable and stands a better chance of achieving desirable positions within search engine results pages (SERPs).

Yet, this process opens the gateway to a proliferation of technical SEO missteps, where overlooked errors in indexation can shadow a site’s existence, burying its content beneath layers of obscurity.

Professionals should strive to identify common indexing oversights and implement corrective measures swiftly, ensuring that site pages are accessible and primed to capture the attention of searchers and crawlers alike.

What Does Indexation Mean for Your Site

Indexation is the gateway through which a website’s pages become visible and retrievable by search engines. This crucial process involves search engines storing information about website pages, paving the way for their appearance on the search engine results pages when relevant queries are made.

Correct indexation is the bedrock of a site’s online discoverability, enabling both web crawlers and potential customers to find and engage with content efficiently. Without it, the finest content may remain unseen, like uncharted islands in a vast digital ocean:

- A webmaster must first submit an accurate, up-to-date XML sitemap through their search console.

- Auditing and fine-tuning the site’s robots.txt file ensures that search engines are not inadvertently blocked from indexing vital content.

- Resolving any noindex tags improperly applied to pages intended for search visibility is imperative.

Common Indexing Mistakes to Avoid

An all too common oversight among webmasters is the accidental employment of ‘noindex’ directives on pages intended to be indexed. Such errors can leave key pages languishing in obscurity, failing to surface in search engine results pages and thus conceding valuable search traffic to competitors.

Another prevalent misstep is the neglect of consistent, thorough indexing audits, resulting in outdated or orphan pages that are invisible to search engine crawlers. These oversights can fragment a site’s internal link structure, diminishing domain authority and compromising user navigability:

- Regularly review ‘noindex’ directives to ensure only the appropriate pages are excluded from search engine indexes.

- Conduct routine audits to identify and remedy orphan pages, stale content, and broken links.

- Maintain an accurate sitemap that reflects current site architecture, as it guides search engines to index desired content effectively.

How to Fix Indexation Issues

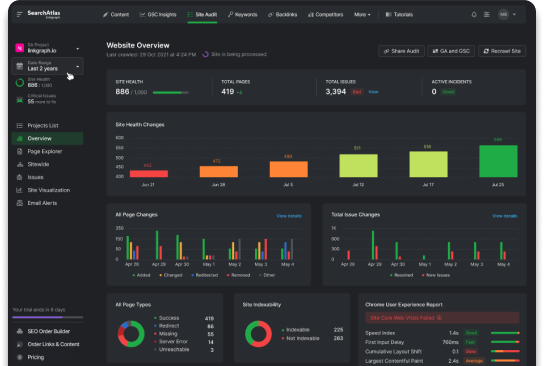

Addressing indexation issues begins with a rigorous site audit, a systematic approach that isolates and rectifies discrepancies hindering a website’s ability to appear on SERPs. Experts at LinkGraph apply their acumen in employing SearchAtlas SEO software to pinpoint and handle problems such as crawlability bottlenecks, inadvertently blocked URLs, or misplaced meta tags that disrupt search engine algorithms.

Once technical SEO problems are identified, LinkGraph’s SEO solutions integrate critical fixes, like the removal of incorrect noindex instructions and the repair of redirect chains that impede the seamless navigation of Googlebot through site pages. Their concerted effort ensures that every valuable piece of content gains its rightful place in the search engine’s index, potentially boosting the site’s overall search visibility.

The Significance of XML Sitemaps for SEO

In the meticulous endeavor of optimizing a website for search engines, the creation and maintenance of an XML sitemap is a task of paramount importance.

Serving as a roadmap for search engine crawlers, the XML sitemap allows websites to highlight the most significant pages, ensuring they stand out amidst a vast sea of online content.

Search engines utilize this tool to navigate and index a site more effectively, rendering it essential in the pursuit of optimal search engine results.

Crafting a well-structured sitemap without errors demands attention to detail, as even minor mishaps can derail search engine crawlers and adversely affect a website’s digital footprint.

Creating an Effective XML Sitemap

Assiduously curating an XML sitemap requires an understanding of the site’s hierarchy and the prioritization of its pages. LinkGraph’s seasoned experts, utilizing the SearchAtlas SEO software, meticulously tailor XML sitemaps to align with search engines’ expectations, optimizing the likelihood of significant content being discovered and properly indexed.

Strategic decluttering of the sitemap by removing any redundant or irrelevant URLs enhances its effectiveness, allowing search engine crawlers to focus on the website’s most pertinent pages. LinkGraph’s precision in sitemap optimization ensures that search engines effortlessly comprehend the structure and significance of a site’s content, bolstering its ranking potential.

How Sitemaps Influence Search Engine Crawling

XML sitemaps serve as a beacon for search engine crawlers, signaling the location of a website’s essential pages and facilitating the systematic exploration of its content. By providing a clear outline of where pertinent information resides, sitemaps expedite the indexing process, ensuring that valuable content is swiftly discovered and appropriately classified within the search engine’s vast repository.

Effectively implemented, sitemaps empower search engines with the ability to prioritize content based on its relevance and freshness, optimizing the crawl budget and reducing the likelihood of overlooking critical site pages. This prioritization by LinkGraph’s SEO solutions, through precise XML sitemap integration, enhances a website’s potential to achieve improved search rankings and increased search traffic.

Common Errors With XML Sitemap Implementation

In the meticulous realm of technical SEO, oversights in XML sitemap implementation can have far-reaching consequences. A common error includes neglecting to update the sitemap after content revisions or site restructuring, leading to dead links that frustrate users and obstruct search engine crawlers.

Additionally, LinkGraph’s professionals caution against overpopulating the XML sitemap with URLs that carry no SEO value, such as those with duplicate content or pages blocked by robots.txt, as this can dilute the sitemap’s efficacy and waste valuable crawl budget.

Crafting an Accurate Robots.txt File

Navigating the intricacies of technical SEO necessitates a thorough understanding of various components that signal search engines on how to interact with a website, with the robots.txt file being a principal player in this dynamic.

This plain text file may seem unassuming, yet it holds tremendous sway over a site’s search engine behavior, instructing crawlers on which pages to access and index.

However, even the slightest misstep in its configuration can lead to improper site crawling and indexing, inadvertently concealing content from search results.

This pivotal segment of the technical SEO toolkit requires a careful and strategic approach to avoid common misconfigurations, and professionals at LinkGraph are adept at scrutinizing and refining the robots.txt setup to bolster a site’s visibility and search efficacy.

Understanding the Role of Robots.txt in SEO

In the landscape of technical SEO, the robots.txt file operates as an essential blueprint for search engine crawlers, providing guidance on which areas of a website to scan and which to bypass. Masterfully constructed by SEO experts at LinkGraph, these files are the unsung heroes of SEO, facilitating the delicate balance between access and restriction to optimize site indexing and user privacy.

LinkGraph’s strategic deployment of the robots.txt file ensures that search engines like Googlebot efficiently navigate a site’s digital terrain, bypassing sections not meant for public indexing while focusing on content that enhances a site’s relevance and authority. With expert insight, the robots.txt file becomes a powerful tool in preventing technical SEO issues that could obstruct a site’s climb up the search rankings.

Typical Misconfigurations of Robots.txt Files

Within the labyrinth of technical SEO, robots.txt files are crucial signposts directing search engine crawlers. However, when these files are misconfigured with overly broad disallow directives, it hinders Googlebot’s ability to access key sections of the site, consequently concealing vital content and crippling SEO performance.

Misunderstandings regarding the file’s syntax often lead to inadvertent blocking of resources that affect page rendering. Such issues can send mixed signals to crawlers, potentially resulting in diminished user experience, negatively influencing search rankings, and impacting the website’s digital footprint.

How to Correct Your Robots.txt Setup

Rectifying the robots.txt configuration begins with a comprehensive audit to assess and correct disallow directives that might be unintentionally inhibiting search engine crawlers from accessing critical content. LinkGraph’s seasoned professionals excel at analyzing these files, ensuring that no vital components of a site are obscured from search engines due to flawed instructions.

LinkGraph harnesses SearchAtlas SEO software to refine robots.txt setups, meticulously revising the file to strike an optimal balance between accessibility and exclusion. This precise calibration of the robots.txt can elevate a site’s indexing proficiency and enable search engines to accurately interpret which pages are paramount for consideration in their rankings.

Avoiding the Pitfalls of Meta Robots NOINDEX Tag

In the rigorous pursuit of optimizing a website for heightened search engine performance, the meta robots NOINDEX tag emerges as a critical paradox.

Intended as a tool to refine crawling and indexing directives, this tag, when misapplied, can unintentionally become a barricade to a site’s visibility on SERPs.

In this section, we will explore how improper implementation of NOINDEX tags can undermine SEO efforts, the importance of detecting unintentional directives that may stifle a website’s reach, and the judicious application of meta robots tags to orchestrate successful indexing strategies.

How NOINDEX Tags Can Hinder SEO Efforts

Used with cautious precision, the meta robots NOINDEX tag serves as a navigation beacon for search engine crawlers, directing them away from content that should not be indexed. However, inadvertent or misinformed application of this directive obscures valuable content from SERPs, impeding a website’s reach and undermining its SEO efforts.

Successful SEO strategies hinge on the visibility and indexability of pivotal content, and the misuse of NOINDEX tags stands in stark opposition to this goal. By inadvertently masking critical website pages, businesses risk a drop in search rankings and diminished organic search traffic, thwarting their digital marketing aspirations.

Identifying Unintentional NOINDEX Directives

Meticulous vigilance is paramount when scouring website pages for unintentional use of the NOINDEX tag. LinkGraph’s technical SEO audit employs the cutting-edge SearchAtlas SEO software to swiftly detect and address any errant NOINDEX directives that could derail a website’s search engine visibility.

LinkGraph’s approach ensures that each meta tag is purposefully deployed, guaranteeing that only selected sections remain hidden from search engines. Such precision prevents the inadvertent suppression of a site’s discoverability and safeguards its digital presence within the competitive realm of SERPs.

Correctly Using the Meta Robots Tags

Correct deployment of meta robots tags is a delicate operation within the SEO arsenal, where accuracy dictates a webpage’s fate in the indexing process. Masterful use by experts at LinkGraph ensures that NOINDEX tags are applied only when necessary, preserving a website’s visibility and supporting its ascent in search engine rankings.

LinkGraph’s professionals implement meta robots tags with strategic care, focusing on a harmonious balance that promotes optimal indexing while excluding specific content that detracts from a site’s SEO objectives. This finesse in application enhances the clarity with which search engines assess and categorize web pages, fortifying a website’s stature in the digital marketplace.

Combating Slow Page Speed to Boost Rankings

As digital landscapes become increasingly competitive, efficient page load times emerge as a critical determinant in the battle for superior search engine rankings.

A website marred by sluggish response times not only sours the user experience but also sends unfavorable signals to search engines, hampering search visibility.

The urgency of addressing slow page speed is accentuated as search engine algorithms continue to evolve, increasingly prioritizing the swift delivery of content.

Within this context, webmasters and digital marketers must diligently diagnose speed impediments and employ targeted, tactical measures to expedite website performance.

By doing so, they position their digital offerings to achieve prominence in the search results, ultimately engaging more visitors and fostering advantageous SEO outcomes.

Why Page Speed Is Crucial for SEO

In the high-stakes game of search engine rankings, page speed is not just a convenience but a commanding factor that influences a site’s SEO performance. Search engines like Google have explicitly incorporated page speed into their ranking algorithms, reflecting the significance of loading time in user satisfaction and engagement.

A site that loads quickly gratifies the user’s need for instant information and stands a better chance of retaining visitors, reducing bounce rates, and improving overall user experience. These metrics are favorable in the eyes of search engines, potentially propelling websites with superior page speed higher up the search results ladder.

Diagnosing Page Speed Issues

Unearthing the root causes behind slow page speed necessitates a comprehensive analytical approach, one that LinkGraph’s tools are expertly configured to perform. With a meticulous examination employing SearchAtlas SEO software, potential bottlenecks such as unoptimized images, cumbersome scripts, or hosting issues can be swiftly identified and targeted for improvement.

LinkGraph’s SEO services provide a thorough diagnosis by evaluating a multitude of elements that contribute to page load times, including server response duration, use of caching, and the efficiency of code execution on the browser. This enables website owners to rectify issues that are instrumental in achieving optimal page performance and heightened user experience.

Tactics for Enhancing Website Speed

Optimizing website speed is a multifaceted endeavor, and LinkGraph’s SEO services advocate a holistic approach: from streamlining code to optimizing media files. This involves minimizing JavaScript and CSS files, which can significantly reduce load times, counseling on the use of compressed images, and implementing lazy loading to enhance the user’s journey across a website.

LinkGraph recognizes the importance of a robust hosting solution and employs its SearchAtlas SEO software to assist clients in choosing a provider that offers fast server response times. Another tactic lies in leveraging browser caching, allowing frequent visitors to store elements of your site locally for faster retrieval upon subsequent visits.

| Tactic | Description | Impact |

|---|---|---|

| Code Streamlining | Minimizing JavaScript and CSS files. | Reduces page load times for a smoother user experience. |

| Media File Optimization | Using compressed images and implementing lazy loading. | Enhances site speed, especially on media-heavy pages. |

| Hosting Assessment | Identifying providers with fast server response times. | Ensures reliable, swift delivery of content to the user. |

| Browser Caching | Storing elements locally for repeat visitors. | Speeds up site for returning users, reducing load on servers. |

Resolving Multiple Homepage Versions for Consistency

As anchors of any website, homepages hold immense influence over user experience and search engine perception.

Yet, an often unforeseen technical SEO error involves the existence of multiple homepage variants, which can sow confusion in the algorithms that determine site rankings.

Embarking on a strategic path to identify duplicate versions and consolidate them into a singular, authoritative entity is not only beneficial for maintaining a coherent online identity but also elemental for fortifying a website’s SEO integrity.

This section delves into the critical steps for unifying homepage versions, laying a foundation for streamlined user engagement and enhanced SEO effectiveness.

Detecting Multiple Homepage Variants

Spotting variants of a homepage is crucial, as they can dilute a site’s SEO strength and confuse both users and search engine crawlers. Analytical rigor applied through advanced SEO tools like SearchAtlas by LinkGraph enables webmasters to uncover these duplications swiftly and accurately.

By canvassing a site for multiple URLs that lead to similar or identical homepages, specialists are able to identify redirects such as ‘www’ and ‘non-www’, as well as ‘http’ and ‘https’ versions that should be consolidated:

- Examine URL structures for discrepancies indicating homepage versions.

- Analyze server responses to identify unintentional homepage duplications.

- Utilize canonical tags to signal preferred homepage versions to crawlers.

SEO Complications From Duplicate Homepages

SEO challenges arise when multiple homepage variants exist, leading to diluted link equity and a fragmented search engine ranking strategy. This scenario creates competing signals that could confuse search engines on which version of the homepage deserves the predominant ranking, potentially splitting traffic and weakening the site’s overall SEO posture.

Furthermore, such variabilities introduce the risk of content duplication, a factor that search engines could misconstrue as manipulative, inviting penalties that hinder a site’s performance. Establishing a single, canonical source mitigates these complications and streamlines the path for search engines to reward the rightful homepage with appropriate search visibility.

- Identify all variants of the homepage presented to search engines.

- Assess the impact of each variant on link equity and user navigation.

- Implement 301 redirects to funnel all homepage traffic to the preferred URL.

- Use the canonical tag to reinforce the selected homepage choice to search engines.

Steps to Unify Your Homepage Versions

LinkGraph champions the consolidation of homepage versions with a strategic approach that assures uniformity across the web presence. This method involves setting up server-side redirects which funnel all homepage traffic to one primary version, typically the HTTPS variant, to enhance security while streamlining user access and solidifying link equity.

During this unification process, LinkGraph meticulously adjusts the website’s internal link structure to reflect the authoritative homepage URL, bolstering the integrity of the domain and providing search engines with a clear signal of the primary entry point. Their adept application of these technical adjustments is designed to enhance a site’s SEO posture and ensure consistency in the user’s navigational experience.

Correct Usage of Rel=Canonical for SEO Benefit

In an ecosystem where duplicity is the bane of digital content’s existence, the rel=canonical tag emerges as a beacon of differentiation, helping search engines to discern the preferred version of similar or identical content pages.

Despite its significant SEO upside, the implementation of this tag is fraught with complexities that can lead to critical errors if not maneuvered with precision.

By exploring the nature of rel=canonical, identifying common missteps, and elucidating best practices for its application, digital marketers can safeguard their search rankings and steer clear of the consequent SEO penalties attributed to improper use.

What Is the Rel=Canonical Tag?

The rel=canonical tag is a pivotal instrument in the technical SEO toolkit, designed to help search engines understand which version of similar content is the principal one. By specifying a preferred URL among duplicates, this tag directs search engine crawlers to focus their attention on the content deemed most relevant, effectively preventing issues like split page authority and content redundancy.

Employed with precision, the rel=canonical tag can be a potent ally for maintaining a clean, well-organized website structure. It informs search engines to consolidate ranking signals for comparable pages, thereby enhancing a site’s overall SEO efficacy and maintaining the integrity of its search presence:

| Function | Description | SEO Impact |

|---|---|---|

| Canonicalization | Indicates the preferred URL among multiple content versions. | Prevents diluted PageRank and content duplication penalties. |

| Signal Consolidation | Directs search engines to combine all ranking signals to a canonical page. | Strengthens the preferred page’s authority and search visibility. |

Common Canonical Tag Mistakes

Among the intricate maneuvers in technical SEO, the misuse of the rel=canonical tag can inadvertently undermine a site’s search engine standing. One prevalent error involves specifying a canonical page that does not closely match the content of the duplicates, leading to confusion for search engines and potentially diminishing the perceived relevance of all associated pages.

Another misapplication frequently encountered is the formation of circular or broken canonical links that disrupt the clarity of directive signals to search engines. Such errors can result in search engines disregarding the intended canonical tag altogether, leaving the site’s pages to suffer in SERP placement and visibility.

Implementing the Canonical Tag Correctly

To ensure the rel=canonical tag serves its intended purpose, it’s crucial to perform comprehensive audits of the site’s content. LinkGraph’s seasoned experts utilize the precision of SearchAtlas SEO software, meticulously verifying that each canonical tag directs search engines to the most authoritative page, thus mitigating risks of content dilution and improving SEO value.

Applying these tags must be a strategic decision supported by in-depth understanding of the content’s purpose and value to the audience:

- LinkGraph’s professionals align canonical tags with the user’s intent, reinforcing the SEO strategy by pinpointing the content versions that resonate most with the target audience.

- Meticulous checks ensure that each canonical URL is accessible and delivers content that mirrors the duplicates closely in relevance and context.

The focus is on clarity and precision, as these qualities are foundational for signaling to search engines the hierarchical importance within a set of similar pages. With the correct application of the rel=canonical tag, search engines can cohesively interpret and present the website’s content, thus bolstering the site’s search rankings and user experience.

Dealing With Duplicate Content Issues

In the multifaceted world of digital marketing, duplicate content stands as a significant obstacle that can derail a website’s search engine optimization efforts.

Webmasters and SEO practitioners must be adept at identifying redundant material across their domains, understanding the negative implications on SEO rankings, and developing robust strategies to address these issues.

As LinkGraph’s experts navigate this complex challenge, they ensure that content is both unique and positioned to rank favorably in search engine results, thereby safeguarding their clients’ online authority and relevance.

Identifying Duplicate Content on Your Site

Discerning the presence of identical or substantially similar content across various pages of a website is a task that LinkGraph’s SEO services approach with meticulous detail. Their methodology employs sophisticated analysis to scan and cross-reference content, thus identifying any duplication that could split page rankings and dilute SEO efforts.

LinkGraph leverages the innovative SearchAtlas SEO software to accurately detect and handle instances of content repetition. The tool’s capabilities ensure that every piece of content on a website is distinct and contributes positively to a cohesive SEO content strategy, enhancing the value offered to users and search engines alike.

How Duplicate Content Affects SEO

Duplicate content can severely impede a website’s visibility and authority in search engine rankings. When identical or exceedingly similar content appears under multiple URLs, it confuses search engines which must decide which version to prioritize, resulting in divided signals that can dilute the perceived value of the content and diminish the website’s SEO standing.

Furthermore, search engines might reduce the search result footprint of pages with redundant content, potentially excluding them from search listings altogether. This not only affects the user experience by limiting access to information but also impacts the website’s organic search traffic and hampers its overall SEO performance.

Strategies for Resolving Duplicate Content

To tackle the challenge of duplicate content, LinkGraph adopts a strategic approach, focusing on prevention and remediation. They initiate content audits to surface duplications, followed by the application of 301 redirects, which seamlessly guide search engines and users to the preferred content source, ensuring that all SEO efforts support the authoritative page:

- Conduct thorough content audits to identify and map all duplicate content across the website.

- Implement 301 redirects from duplicated content pages to the original, authoritative version.

Furthermore, the adept use of canonical tags provides a clear directive to search engines on which versions of content to index, enhancing the site’s SEO integrity. LinkGraph ensures these tags are accurately placed to consolidate ranking signals and preserve content uniqueness, which in turn reinforces search engine trust and the website’s search rankings:

Importance of Alt Tags in Image Optimization

In the precision-oriented realm of technical SEO, image optimization holds a significant place amidst practices pivotal for search engine success.

Alt tags, often overlooked in the visual aspects of website design, carry substantial weight in this process.

They function not only as critical accessibility features but also as essential components for image interpretation by search engines.

Adequate application of alt tags can result in images contributing meaningfully to SEO rankings, while their absence can be a silent yet formidable hindrance.

This introduction sheds light on the repercussions of missing alt tags, outlines methodologies to formulate effective descriptors for images, and reviews the tools necessary to uncover images devoid of this invisible yet powerful element of SEO.

The SEO Impact of Missing Alt Tags

The absence of alt tags on images can significantly hinder a webpage’s SEO effectiveness. Search engines rely on these descriptive markers to index and understand the content of images, with proper tags improving a page’s relevance in search results.

Lacking alt attributes, images on a website become virtually invisible to search crawlers, depriving a page of valuable context and potential keyword associations. This oversight can impede accessibility for visually impaired users, negatively impacting site rankings due to poor user experience:

- Search engines use alt tags to decipher image content and its relevance to a query.

- Without alt tags, images do not contribute to the semantic information of a page.

- Missing alt attributes can lead to decreased search engine visibility for visual content.

How to Craft Effective Alt Tags for Images

Crafting effective alt tags for images is an exercise that requires both accuracy and brevity, a skill that enhances the SEO potential of visual content on a website. Alt tags should be descriptive yet concise, presenting the context of the image while incorporating relevant keywords that align with the overall content theme.

Professionals at LinkGraph understand that alt tags serve as a critical juncture between accessibility and SEO. They ensure that each alt tag distinctly conveys the purpose of the image, thereby aiding search engine crawlers in drawing correlations between visual elements and textual content:

- Ensure that each image alt tag accurately reflects the subject matter of the visual.

- Incorporate target keywords into alt tags to optimize images for relevant search queries.

- Keep alt tags succinct to facilitate ease of reading and indexing by search engine crawlers.

Tools to Identify Images Lacking Alt Tags

Uncovering images without alt attributes necessitates specialized tools that delve into the infrastructure of a website’s image library. LinkGraph equips its SEO experts with the sophisticated capabilities of SearchAtlas SEO software, providing them with the means to methodically scan web pages and detect images that lack these crucial descriptive tags.

Through this innovative technology, not only is the absence of alt tags identified, but the software also aids in the prompt rectification of such oversights, ultimately fortifying the website’s SEO posture against the inadvertent neglect of image optimization.

Identifying and Fixing Broken Links for SEO

In the evolving landscape of search engine optimization, identifying and mending broken links is an essential aspect of maintaining a robust online presence.

These digital dead ends not only frustrate visitors but also act as red flags to search engines, signaling potential neglect and diminishing the quality of a website’s infrastructure.

As organizations seek to elevate their digital authority and ensure seamless navigation, understanding how broken links can negatively impact SEO, locating such links within a website’s framework, and deploying best practices for their rectification are critical steps in honing technical prowess and safeguarding search rankings.

How Broken Links Hurt Your SEO

Broken links can have a deleterious effect on a website’s SEO performance by disrupting the user journey and triggering negative signals to search engines. Their presence on a site suggests a lack of maintenance and oversight, potentially eroding the hard-earned trust and authority that influences search rankings.

These faulty pathways not only compromise the smooth navigation expected by visitors but also obstruct search engine crawlers, thereby impairing the site’s ability to rank well. By impeding the efficient flow of link equity throughout the site, broken links service as silent saboteurs of otherwise sound SEO strategies.

Finding Broken Links Within Your Website

Unearthing broken links within a website is a quintessential aspect of maintaining its technical SEO health. LinkGraph utilizes its innovative SearchAtlas SEO software to meticulously scour a website and pinpoint any non-functioning URLs, effectively flagging areas that hinder smooth navigation and potentially compromise search engine rankings.

By strategically deploying tools capable of detecting discrepancies in link structure and status codes, LinkGraph ensures clients’ websites are purged of these detrimental obstacles. This proactive identification and subsequent fixing of broken links are instrumental in cultivating a seamless user experience and preserving a website’s authority in the eyes of search engines.

Best Practices for Repairing Broken Links

Undertaking the repair of broken links commences with a meticulous examination of outbound, inbound, and internal URLs, with each identified broken link being either updated to point to the correct, live webpage or removed entirely if no longer relevant. LinkGraph’s SEO experts adeptly navigate these intricacies, performing necessary updates and removals with precision to restore and enhance the structural integrity of a website.

LinkGraph’s protocol for mending broken links entails collaborating with webmasters to guarantee that the web page’s content continuity is sustained, addressing any link that has decayed or become obsolete. This restoration not only accentuates the navigational fluidity for users but also reinforces the website’s credibility, contributing positively to its search engine positioning and overall digital health.

Leveraging Structured Data for Enhanced SEO

In the realm of technical SEO, structured data stands as a beacon of precision, enabling search engines to not only crawl but also comprehend and display web content with enhanced clarity.

The strategic implementation of structured data is paramount; it transforms web pages into a rich tapestry of contextual information, ripe for the search engines to parse and present in visually compelling and informative results.

Despite its vast potential to buoy a site’s search presence, common pitfalls can dampen its impact.

As such, it is essential to navigate the intricacies of structured data with care—employing meticulous techniques for markup, avoiding errors that can lead to misinterpretation by search algorithms, and ultimately ensuring that websites capitalize on the full spectrum of SEO benefits structured data affords.

What Is Structured Data and Why It Matters

Structured data is the encoded form of information formatted in such a way that it can be universally understood by search engines. It plays an integral role in SEO by delivering explicit clues about the meaning of a page’s content, empowering search engines to provide more relevant and rich results to users’ queries.

Its significance in the digital marketing landscape stems from the ability to directly communicate content context to search crawlers, leading to enhanced visibility in search engine results pages. Structured data marks up information on web pages, feeding into the complex algorithms that shape the future of search engine optimization and user experience.

Common Mistakes When Implementing Structured Data

The lexicon of structured data is dense with possibilities, yet susceptible to critical misinterpretations. One frequent blunder is the incorrect application of schema types, which can result in erratic and misleading representations of a website’s content across search engines, potentially obfuscating the data’s intended message and effectiveness in SEO enhancement.

Another oversight often encountered is the input of outdated or irrelevant structured data, which neglects current content relevancy and search engine guidelines. Such inaccuracies can impede the utility of structured data, dampening its purported benefits and eroding the credibility of the website in the competitive landscape of search engine results.

Techniques for Effective Structured Data Markup

To harness the full potential of structured data within the realm of search engine optimization, a thorough grasp of schema vocabulary is imperative. Professionals at LinkGraph meticulously implement contextually relevant schemas, ensuring that each markup accurately conveys the unique aspects and content categories present on a client’s web page.

LinkGraph’s SEO services emphasize validation of structured data to avoid the ramifications of incorrect or incomplete implementation. This rigorous verification process mitigates potential indexing errors, thereby fortifying the website’s communicative clarity with search engines:

- Employ schema.org vocabulary tailored to the website’s content specifics.

- Utilize structured data testing tools to validate and preview how search engines interpret the markup.

- Monitor the performance of structured data to refine SEO strategies continuously.

Optimizing for Mobile Devices to Improve Rankings

In today’s rapidly evolving digital terrain, mobile optimization has solidified its role as a critical factor in search engine optimization strategies.

As most users now access the internet via mobile devices, the significance of creating mobile-friendly websites cannot be underestimated.

A website, impeccably primed for mobile accessibility, enjoys enhanced search rankings and improved user engagement.

Conversely, failure to address common mobile SEO errors can result in a decline in digital presence and audience reach.

Following established best practices for mobile optimization is more than a mere recommendation; it is a necessary endeavor for businesses aiming to thrive within the competitive online marketplace.

The Importance of Mobile Optimization for SEO

Mobile optimization has ascended to the forefront of SEO considerations, with search engines prioritizing websites that offer seamless experiences on handheld devices. For a website, attaining a mobile-friendly interface ensures a competitive edge by catering to the burgeoning number of users who prefer accessing the internet on the go.

This emphasis on mobile readiness is not merely a response to user preference but an integral component of a site’s search engine ranking. Google and other search engines have shifted towards mobile-first indexing, making the mobile version of a website the primary benchmark for indexing and ranking:

- Ensure responsive web design for optimal display across various screen sizes and resolutions.

- Trim down page loading times to meet the expectations of mobile users seeking quick information.

- Implement user-friendly navigation elements tailored for touch controls to enhance mobile user experience.

Common Mobile SEO Errors to Avoid

Ignoring the mobile experience often leads to cumbersome navigation and illegible text on small screens, deterring visitors and hurting a website’s SEO standings. Sites failing to adopt responsive design may display poorly on various devices, which not only frustrates users but also triggers search engines to lower the site’s ranking.

Another frequently encountered mobile SEO oversight involves overlooking touch-screen functionality, resulting in interactive elements like buttons being too small or too close together, complicating the user experience. This misstep not only diminishes usability but can also negatively influence a website’s search performance as search engines scrutinize such factors when assessing page quality.

Best Practices for Mobile-Friendly Websites

To ensure optimal performance on mobile devices, website designers should adopt a fluid layout that adapts content and structural elements to fit various screen sizes. LinkGraph underscores the importance of mobile-optimized websites, implementing user-centric design principles that prioritize readability and ease of navigation, reinforcing the site’s functional appeal and SERP placement.

Incorporating touch-friendly interfaces is another fundamental best practice spotlighted by LinkGraph’s comprehensive SEO solutions. This approach involves designing tactile-responsive menus and call-to-action buttons to facilitate effortless interaction, a detail that enhances user engagement and adheres to the meticulous criteria set forth by search engines for mobile optimization.

Writing Meta Descriptions to Maximize Click-Through Rates

In the intricate landscape of technical SEO, meta descriptions hold consequential sway, influencing click-through rates with remarkable potency.

These succinct summaries, residing beneath a page’s title in search results, serve to entice users, steering their curiosity toward the content within.

A well-crafted meta description acts as a strategic beacon, guiding potential visitors to engage, while its absence or inadequate execution can inadvertently stave traffic away from a website.

With a focus on optimizing these descriptive snippets, professionals can pivot towards capitalizing on their full potential to amplify user engagement and enhance site traffic.

Crafting Compelling Meta Descriptions

Crafting compelling meta descriptions is an art that necessitates a blend of conciseness and persuasion. These snippets are the elevator pitch of a webpage, persuading users to click through among a sea of search results.

To galvanize the target audience, every meta description should encapsulate the essence of the content and promise value, utilizing action-oriented language and compelling hooks that resonate with the searcher’s intent:

| Element | Description | Significance |

|---|---|---|

| Conciseness | Brief yet powerful description of the page’s content. | Ensures clarity and immediate understanding for readers. |

| Relevance | Alignment with the searcher’s intent and page content. | Boosts click-through rates by meeting user expectations. |

| Call-to-Action | A persuasive imperative that encourages user engagement. | Drives click-throughs by suggesting immediate value. |

Employing strategic keywords within the meta description further enhances its appeal, ensuring alignment with the search query while steering clear of the pitfall of overstuffing. These carefully chosen terms should naturally flow within the narrative, subtly guiding the user towards engaging with the content.

How Neglecting Meta Descriptions Can Cost You Traffic

The oversight of not furnishing web pages with tailor-made meta descriptions is a significant technical SEO error that can severely restrict a website’s visibility. Without this essential snippet, search engines may arbitrarily select content from the page which may not effectively capture the user’s attention or reflect the page’s focal message, leading to lower click-through rates and diminished opportunity to draw in potential visitors.

Search engines attribute considerable weight to these descriptive elements, and their absence can result in a lackluster presentation in search results, weakening the impetus for users to proceed to the website. Meta descriptions are indeed a website’s first interaction with a user in the digital marketplace; neglecting them can undervalue the page’s content, thereby stifling traffic and opportunities for engagement right at the search engine results page.

Tips for Optimizing Your Meta Descriptions

Excellence in meta description crafting means tapping into the psyche of the searcher. Professionals at LinkGraph carefully sculpt meta descriptions with compelling and actionable language, ensuring they mirror the page content’s core message and seamlessly weave in target keywords without tipping the scale towards keyword stuffing, thereby fine-tuning relevance and maximizing engagement potential.

LinkGraph’s vigilance extends to staying within the optimal character limit for meta descriptions, typically around 155-160 characters, which prevent truncation in search engine results pages. Their experts also recommend A/B testing alternate versions for key pages to refine the messaging based on user response, continuously optimizing for the highest click-through rates and carving a path for increased organic traffic.

Aligning User Language Preferences With Website Content

In the labyrinth of technical SEO, the harmony between user language preferences and website content is a delicate yet powerful determinant of a site’s ability to connect and resonate with its intended audience.

As global digital spaces shrink distances, the imperative to precisely match users’ linguistic expectations has never been more pronounced.

Addressing language targeting intricacies, swiftly recognizing issues of language mismatch, and adopting profound localization strategies are pivotal in averting SEO pitfalls that could otherwise alienate users and stifle a website’s international reach and search rankings.

The SEO Impact of Language Targeting

Language targeting extends beyond mere translation; it involves the strategic alignment of website content with the cultural nuances and search behaviors of the target audience. Correctly implemented, language targeting can significantly boost a website’s relevance and appeal within local markets, thus enhancing search visibility and improving search rankings in those regions.

Misalignments between user language preferences and website content can lead to suboptimal user experience and lower engagement rates. When content does not resonate with the cultural context or vernacular of the audience, bounce rates increase, and the efficacy of SEO efforts is compromised, resulting in poorer performance within the targeted local search engine results.

| Aspect of Language Targeting | Benefit for SEO | Risk of Neglect |

|---|---|---|

| Cultural Nuances | Boosts local relevance and user engagement. | May alienate local audience leading to higher bounce rates. |

| Search Behavior Alignment | Improves visibility in local search results. | Results in missed opportunities in local market penetration. |

| Vernacular Resonance | Enhances user experience through familiarity and relevance. | Weakens the site’s appeal and reduces engagement metrics. |

Identifying Language Mismatch Issues

Pinpointing language mismatch issues within a website’s content is a nuanced process, requiring LinkGraph’s experts to delve into user engagement metrics and search traffic origins. Discrepancies in these areas often illuminate potential misalignments between the language utilized on the site and the linguistic expectations of its audience, prompting a reevaluation of content strategies to ensure cultural and linguistic congruence.

LinkGraph’s meticulous approach detects inconsistencies by analyzing bounce rates, time on page, and conversion data relative to specific language segments. These indicators help determine if content is resonating with the intended user base or if adjustments are necessary to better align with the vernacular nuances distinctive to each target market, thereby refining the website’s global SEO footprint.

Implementing Correct Language Localization Practices

Implementing correct language localization practices necessitates a meticulous strategy that extends beyond mere translation; it requires a deep understanding of regional dialects, colloquialisms, and cultural nuances. Experts at LinkGraph meticulously tailor website content to reflect the linguistic intricacies of each targeted audience, ensuring that the tone, idioms, and nuances resonate locally, thereby maximizing the site’s relevance and SEO potential within the designated market.

LinkGraph’s professionals adeptly apply localization techniques that go hand in hand with technical SEO optimization. By integrating hreflang tags, they provide clear signals to search engines about the language and geographical targeting of content, enabling precise delivery to users based on their linguistic preferences, which in turn increases site engagement and amplifies its performance in localized search results.

Conclusion

In conclusion, avoiding key technical SEO errors is essential for maintaining a strong online presence and achieving high search engine rankings.

Technical oversights such as neglecting HTTPS implementation, failing to index pages properly, handling structured data incorrectly, and overlooking mobile optimization can severely impede a website’s performance and visibility.

By diligently installing SSL certificates, ensuring accurate sitemap submissions, fixing broken links, and crafting descriptive meta elements, websites can fortify their SEO standing.

Moreover, adopting localization techniques to align with user language preferences and leveraging structured data effectively can further enhance a site’s relevance and authority.

Vigilance against these common pitfalls is critical for any digital entity aiming to thrive in the competitive landscape of search results.