SEO and Duplicate Content: What You Need to Know

Understanding SEO and Duplicate Content Implications In the complex arena of search engine optimization, duplicate content is a prevalent issue that can significantly diminish a site’s search […]

Understanding SEO and Duplicate Content Implications

In the complex arena of search engine optimization, duplicate content is a prevalent issue that can significantly diminish a site’s search visibility and link equity.

This content conundrum not only baffles website owners but also hampers the efforts of search engine optimizers bent on climbing the search engine rankings.

To navigate these choppy waters, one must understand the intricate nature of SEO, distinguish between harmless content similarities and deleterious duplication, and adopt a robust content strategy.

LinkGraph’s unparalleled SEO services offer clarity and tactical solutions for these challenges.

Keep reading to unravel the complexities of duplicate content and leverage LinkGraph’s proven strategies for pristine, high-ranking web pages.

Key Takeaways

- Duplicate Content Can Dilute Link Equity and Negatively Impact Search Engine Rankings

- Canonical Tags Help Search Engines Identify Original Content Sources and Consolidate Ranking Power

- Employing Unique Product Descriptions and Blog Posts Is Crucial for Reducing Content Duplication

- LinkGraph Offers SEO Services and Tools Like SearchAtlas to Effectively Manage and Resolve Duplicate Content Issues

- Active Monitoring and Legal Protections Such as DMCA Takedowns Are Essential for Defending Original Content Online

Defining Duplicate Content in SEO Terms

As search engine optimizers carefully craft strategies to amplify search visibility, they must navigate the multifaceted issue of duplicate content with precision.

Defining duplicate content involves understanding its varied and complex nature, as well as its potential impact on SEO.

Essentially, it is the presence of identical or substantially similar content across multiple URLs—whether within the same domain or spread over several.

Content issues such as these can lead to diluted link equity, making it difficult for search engines to discern the original version of the content to display in search engine results pages (SERPs).

The pursuit of clarity in this domain lays the foundation for learning how search engines distinguish between duplicates and the subsequent measures content creators and site owners can utilize to mitigate any negative implications.

Understanding the Basics of Duplicate Content

In the realm of search optimization, duplicate content refers to instances where similar or identical material appears on multiple web pages, both within a single domain and across different websites. This repetition of content poses a significant challenge for website owners, as it can hinder the search engine’s ability to index and rank pages effectively.

Webmasters must discern the nuances of content duplication, considering how search engines like Google use sophisticated algorithms to detect identical text, images, and even HTML or other code structures. These duplications can sap a webpage’s search ranking potential, given that search engines prioritize unique, original information to present to users.

How Search Engines Identify Duplicates

Search engines employ advanced algorithms to detect duplicate content across the internet. These mechanisms analyze page elements, including text, media, and code structures, to identify overlaps that may exist between various web pages.

When identical or extremely similar content is found, Google, for instance, may use canonical tags and other signals to determine the more relevant, original source to showcase in its SERPs. The search giant’s relentless pursuit to offer users the most accurate search results drives its continuous refinement of duplication analysis.

The SEO Risks of Having Duplicate Content

In the intricate ecosystem of search engine optimization, the presence of duplicate content stands as a formidable obstacle for site owners aiming to sustain and improve their search engine rankings.

This troublesome issue not only confuses search engines as they attempt to index content accurately, but also has a tangible impact on a site’s link equity and page authority.

Addressing duplicate content is paramount, as it can directly result in a dilution of rankings, with search engines struggling to determine which version of the content is the most authoritative and deserving of a prominent placement in the SERPs.

How Duplicate Content Can Dilute Rankings

Duplicate content can lead to diminished search rankings as search engines grapple with assigning authority to multiple identical pages. If search engines allocate crawl budget to several versions of the same content, it can result in none of these pages achieving prominent visibility.

One detrimental consequence of content duplication is the splitting of link equity among different pages rather than consolidating it to bolster a single, authoritative page. This dilution undercuts the potential impact of backlinks and weakens the perceived relevance and trustworthiness of the content in the eyes of search algorithms.

The Impact on Link Equity and Page Authority

The repercussions of duplicate content on link equity and page authority are substantial. Search engines allocate less value to non-original pages, spreading potential link equity thin across multiple pages rather than concentrating it on the original source.

- Link equity dispersal impedes the strength of inbound links, reducing the consolidating power that authoritative backlinks afford a single page.

- Page authority suffers when search engines struggle to assign credibility due to multiple instances of the same content, hindering the ability of any one page to rise in the rankings.

This diffusion of link power diminishes a webpage’s capacity to compete effectively in the SERPs. A page’s perceived reliability by Googlebot and users becomes muddied, undermining its potential for higher search engine rankings.

Strategies to Avoid Duplicate Content Issues

To fortify their site’s integrity and maintain robust search rankings, creators must adopt vigilant strategies that tackle the complexities of content duplication.

Precise steps are imperative for crafting unique, high-value pages that stand out amidst the vast expanse of the web.

Prioritizing unique product descriptions, category pages, and blog posts, implementing canonical tags judiciously, and strategizing content dissemination across different URLs can shield websites from the adverse effects duplicate content wields on an SEO landscape.

Groundbreaking approaches like these empower creators to navigate the intricate web of SEO, ensuring their content resonates uniquely with both Googlebot and the discerning visitor.

Creating Unique Content for Each Page

Efforts to drive unique content creation for each page are critical for any content strategy aiming to enhance search engine rankings. By tailoring each product description, blog post, and landing page with unique and original copy, website owners underscore the value and individuality of their content in the eyes of search engines and users alike.

Employing a writer who delivers distinct and engaging content resolves many duplicate content issues, fortifying the site’s SEO foundation. This practice ensures that each piece of content, be it on a category page, product page, or affiliate site, enriches the user experience with relevant, meaningful information:

| Content Type | Strategy | SEO Benefit |

|---|---|---|

| Product Page | Custom product descriptions | Enhanced search visibility |

| Category Page | Original category overviews | Reduced content duplication |

| Blog Post | Engaging, topical articles | Increased user engagement |

Integrating the unique value proposition of each product or service into the corresponding web page content not only assists in avoiding the pitfalls of duplicate content but also positions the retailer or service provider as a knowledgeable source in their domain. Thus, website owners leverage originality to not only circumvent SEO challenges but to also elevate their brand’s authority and credibility.

Implementing Canonical Tags Correctly

LinkGraph’s SEO services emphasize the importance of implementing canonical tags correctly to manage duplicate content effectively. This HTML tag signals to search engines which version of a URL is the definitive source to index and rank, thereby consolidating link equity and preserving page authority.

Correct application of canonical tags involves a strategic approach, ensuring that variations of a URL, for instance, those generated through URL parameters like session IDs or tracking codes, are canonicalized to point towards the original content page:

- Utilize canonical tags to indicate the preferred URL to search engines, especially when identical content appears across multiple URLs.

- Employ the tag on each duplicate page to funnel search ranking power back to the original, enhancing the search visibility of that primary version.

- Ensure proper implementation by seeking expert advice from search engine optimizers, such as those at LinkGraph, to avoid missteps that could lead to a duplicate content penalty.

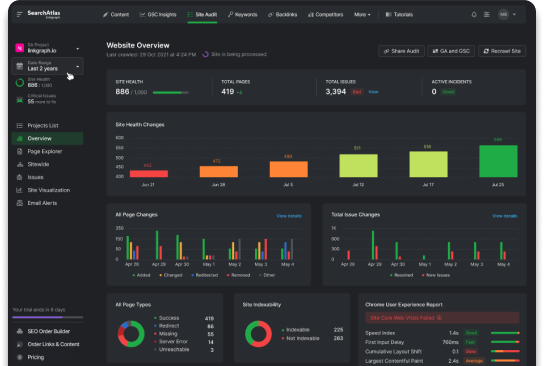

Seasoned developers and SEO specialists at LinkGraph understand that webmasters can navigate the complexities of content duplication with the judicious use of canonical tags. Aided by tools like SearchAtlas, they implement bespoke solutions to bolster a site’s technical SEO and safeguard its content strategy.

Managing Similar Content Across Multiple URLs

Effectively managing similar content across multiple URLs is a nuanced task that necessitates a clear understanding of the nuances and functionalities of an ecommerce or affiliate site’s CMS. LinkGraph’s SEO experts excel in configuring advanced settings to resolve content issue challenges, such as setting up appropriate directives in robots.txt files or amending URL parameters to prevent the unnecessary proliferation of duplicate pages.

Moreover, LinkGraph’s adept deployment of their SearchAtlas SEO tool enables site owners to identify and address scraped content or copyscape alerts. This level of meticulous attention ensures that every landing page or product description maintains its uniqueness, boosting overall search ranking while steering clear of potential duplicate content penalties from search engines.

Tools to Detect Duplicate Content on Your Website

Site owners and content creators understand the critical importance of identifying and resolving duplicate content to preserve the integrity of their search engine rankings.

A diverse array of tools is available to facilitate the detection and management of content overlap issues.

From exploring the insights available through Google Search Console to utilizing advanced plagiarism checkers and leveraging specialized SEO software for systematic detection, webmasters can maintain content originality and reinforce their SEO efforts.

These solutions arm creators with the necessary resources to ensure each web page contributes positively to their overall search visibility and ranking aspirations.

Utilizing Google Search Console for Insights

Google Search Console provides indispensable insights for detecting duplicate content on a website. By analyzing the Index Coverage report, website owners can spot URLs flagged by Googlebot as duplicates, enabling them to take proactive steps in addressing content issues.

Moreover, the tool’s functionality allows for the monitoring of user-generated duplicate content such as comments and forum posts. This tracking empowers site owners to refine their content strategy continuously, ensuring that each web page serves to enhance the domain’s search engine standings.

Leveraging Plagiarism Checkers Online

LinkGraph’s SEO toolkit includes sophisticated plagiarism checkers, allowing website owners to quickly scan and pinpoint instances where their content might inadvertently echo that found elsewhere.

By utilizing these online resources, creators and webmasters can ensure the originality of every piece published, effectively mitigating any negative impact on their website’s search engine rankings due to duplicate content.

Using SEO Software to Automate Detection

LinkGraph’s SearchAtlas SEO tool stands as a beacon of sophistication within the realm of SEO software, offering automated detection capabilities that streamline the identification process of duplicate content concerns. This software enables webmasters and content creators alike to preemptively intercept and rectify duplication, thereby reinforcing the originality and SERP stance of their site’s content.

Through the integration of advanced algorithms, the SearchAtlas SEO tool not only expedites the process but also heightens the accuracy in pinpointing similar content across various pages. This level of precision is pivotal for sustaining search engine rankings and reflects LinkGraph’s commitment to facilitating robust and successful SEO campaigns for their clients.

Handling External Duplicate Content Proactively

In a digital landscape where content is often the currency of relevance, safeguarding the integrity of one’s original material is imperative.

Externally duplicated content not only poses a significant issue for website proprietors but also for the health of their SEO endeavors.

Understanding content scraping and its effects, deploying effective strategies for protecting original content, and comprehending the role of copyrights and DMCA takedowns form a trifecta of defensive measures against the unauthorized replication of digital content.

These proactive steps are essential in maintaining the individuality of the content that underlines the credibility and ranking of a website.

Understanding Content Scraping and Its Effects

Content scraping is the practice of extracting information from websites, typically through automated bots, and republishing it without permission. This act not only infringes on copyright but also introduces duplicate content across the internet, muddying the digital footprint and search engine perception of the original creator’s work.

This nefarious activity bears direct consequences on a website’s SEO profile, as search engines like Google may struggle to discern the originator of the content, potentially dispersing the ranking power and diluting the search visibility that the authentic creator rightfully deserves.

Strategies for Protecting Original Content Online

LinkGraph’s suite of SEO services offers effective strategies for protecting original content online, a key facet in maintaining a site’s search ranking integrity. Rather than passively reacting to instances of content replication, website owners can employ proactive approaches such as adding detailed copyright notices, utilizing digital watermarking, or implementing advanced security features that deter scrapers from lifting content.

Moreover, engaging in active monitoring of the web through LinkGraph’s SearchAtlas SEO tool enables early detection and swift action against potential content infringements. This vigilant surveillance, coupled with prompt implementation of copyright claims or handling unauthorized content through legal channels, ensures the protection of a brand’s digital assets while mitigating the SEO risks associated with external duplicate content.

The Role of Copyrights and DMCA Takedowns

In today’s digital environment, copyrights serve as a critical safeguard for creators, granting them legal leverage to protect their original work from unauthorized use. When a third-party replicates content without consent, the original content’s copyright holder has the right to issue a DMCA takedown notice—a powerful tool compelling the infringer’s hosting service to remove the infringed material.

By effectively utilizing DMCA takedowns, website owners not only reclaim their content’s exclusivity but also fortify their website against potential SEO repercussions:

- Initiating a DMCA takedown restores the distinctiveness of the content directly associated with the original creator or manufacturer, reinforcing the material’s value to the users.

- Resolving instances of content theft swiftly through these legal measures protects the website’s search rank integrity, ensuring its maintained stature within search engines.

Recovering From the Aftermath of Duplicate Content

Reclaiming digital territory in the aftermath of duplicate content is a strategic process requiring swift and informed actions by site owners.

It is an endeavor that begins with the meticulous identification and subsequent revision of redundant material within one’s digital estate.

Continual dialogue with search engines through timely reindexing requests is essential to expedite the restoration of a site’s credibility and search rankings.

Vigilance in monitoring the site’s ascent in search engine results pages (SERPs) post-recovery provides a quantitative measure of these rectification efforts.

This foundational recovery process is a testament to the resilience and adaptability required within the dynamic sphere of Search Engine Optimization (SEO).

Identifying and Revising Duplicate Content on Your Site

Site owners embark on the recovery path by first deploying tools to meticulously sweep their digital properties for duplicate content. With LinkGraph’s precise, data-driven solutions like SearchAtlas, identifying overlapping content becomes an exercise in efficiency, paving the way for constructive revisions.

Revamping identified duplicate material entails a scrupulous editing process, targeting each piece to infuse it with unique and distinctive elements. Through this dedicated reworking of content, professionals at LinkGraph ensure every web page aligns with SEO best practices and contributes positively to the overall integrity of the site’s search engine standing.

Communicating With Search Engines via Reindexing

Upon revising duplicate content, communication with search engines is critical to ensure content is correctly indexed. This step is achieved via reindexing, a process where site owners request search engines to crawl their pages afresh, considering the updated unique content.

The reindexing process effectively signals to Google and other search engines that changes have been made, which in turn prompts a re-evaluation of the page’s relevance and authority in the search rankings:

- Submitting a reindex request through tools like Google Search Console helps expedite the recognition of revised content by search algorithms.

- As Googlebot crawls the updated content, any previous negative impact of duplicate content on search visibility begins to dissipate, setting the stage for improved SERP performance.

Once reindexing is initiated, it is incumbent upon the site owner to track the updates in the indexing status, ensuring that the fresh, unique content aligns with search engines’ expectations and ranking criteria.

Monitoring Your Site’s Recovery in SERPs

In the professional arena of SEO, steadfast vigilance is required to observe a website’s recovery journey within search engine results pages. Post-implementation of corrective measures for duplicate content, it is imperative for site owners to harness the analytics at their disposal, examining ranking fluctuations with a keen eye to gauge the efficacy of their SEO strategies.

Their diligent scrutiny of performance metrics, such as impressions and click-through rates in SERPs, informs them about the audience’s engagement with the reestablished content. Through continuous monitoring, proprietors maintain an informed perspective on their site’s ascent, ensuring that revisions translate to concrete improvements in search engine visibility.

Conclusion

Understanding the implications of duplicate content is crucial for SEO success.

Identical or strikingly similar content across multiple URLs can confuse search engines and dilute rankings by splitting link equity.

Proactively employing strategies like creating unique content for each page, correctly implementing canonical tags, and managing similar content across different URLs can mitigate these risks.

Additionally, tools like Google Search Console, plagiarism checkers, and SEO software like SearchAtlas help detect and manage duplicate content.

Taking action against external duplication, including understanding scraping effects and leveraging copyrights and DMCA takedowns, is also vital.

In instances of content duplication, efficiently identifying, revising, communicating with search engines for reindexing, and monitoring recovery are key steps to restore a site’s search credibility.

Therefore, a strong grasp of SEO and the consequences of duplicate content is essential to maintain a unique digital presence and achieve optimal search engine rankings.