SEO Technical Crawl Frequency: Best Practices

Optimizing SEO Technical Crawl Frequency: Essential Best Practices Optimizing a website’s crawl frequency is like tuning a sophisticated engine; each adjustment can significantly impact its visibility in […]

Optimizing SEO Technical Crawl Frequency: Essential Best Practices

Optimizing a website’s crawl frequency is like tuning a sophisticated engine; each adjustment can significantly impact its visibility in the vast digital landscape.

At the core, technical SEO ensures that a site’s structure and content are primed for search engine crawlers to navigate and index efficiently.

By scrutinizing current crawl rates and refining accessibility elements, webmasters can create a hospitable terrain for search bots, potentially bolstering a site’s prominence.

Comprehensive mastery over factors like robots.txt, crawl budget, and internal links can be a game-changer.

Keep reading to uncover the technical intricacies that can lead your site to be crawled more frequently, ensuring your content stays fresh in the competitive race for rankings.

Key Takeaways

- Crawl Frequency Is Critical for SEO Visibility and Is Influenced by Multiple Factors Such as Content Updates, Site Structure, and Server Load

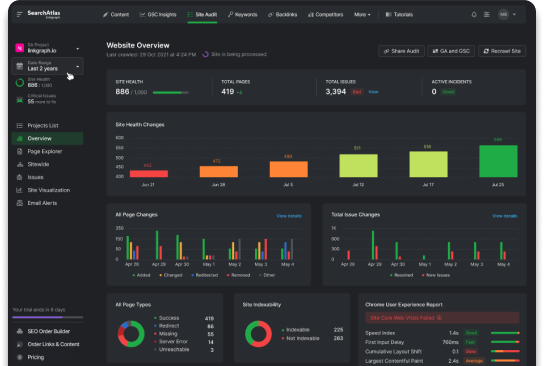

- LinkGraph Uses SearchAtlas SEO Software and Other Advanced Tools to Optimize Websites for Search Engine Crawlers, Enhancing SERP Rankings

- A Strategically Configured robots.txt File and a Well-Structured Sitemap Are Crucial for Guiding Search Engine Crawlers Efficiently

- Regular Content Updates Signal to Search Engines That the Site Is Active, Prompting Re-Crawling and Potential SERP Elevation

- Vigilant Adjustment to Search Engine Algorithm Changes Is Essential for Maintaining Optimal SEO Technical Crawl Frequency

Understanding SEO Technical Crawl Frequency

Within the intricate realm of search engine optimization, SEO technical crawl frequency emerges as a pivotal element that underpins the visibility of web content in search engine results.

At the core of how often and meticulously search engines scrutinize a website lies the concept of ‘Crawl Frequency,’ a parameter of significant interest to SEO professionals.

LinkGraph’s suite of SEO services meticulously addresses crawl frequency, ensuring that the digital footprints of their client’s websites are regularly indexed.

This Engenders a Competitive Edge by conforming to the nuanced criteria search engines deploy to establish crawl prioritization, hence optimizing a website’s discoverability and relevance.

What Is Crawl Frequency?

Crawl frequency refers to the regularity with which search engine algorithms, or crawlers, visit and survey a website’s pages to index new or updated content. This frequency determines how quickly changes on a website are recognized and reflected in the search engine results pages (SERPs).

Strategically influencing this aspect, LinkGraph leverages the capability of its SearchAtlas SEO software, enabling their clients’ sites to maintain optimal visibility. By ensuring content is indexed efficiently, LinkGraph’s adept SEO services nurture a site’s relationship with search engines, keeping it fresh and relevant in the digital landscape.

| SEO Aspect | Key Significance | Impact on Crawl Frequency |

|---|---|---|

| Content Updates | Indicates to search engines that the website is active and evolving. | Potentially increases crawl frequency to capture timely content changes. |

| Site Structure | Facilitates the crawler’s ability to navigate and index the site. | Enhances the efficiency of the crawl, encouraging more frequent visits. |

| Server Load | Affects the crawler’s ability to access the site without disrupting user experience. | A balanced server load can accommodate regular crawling without causing downtime. |

How Do Search Engines Prioritize Crawl Frequency?

Search engines employ a complex algorithm to determine the crawl frequency for each website, which hinges on factors such as the site’s update frequency, inherent value, traffic levels, and link profile. LinkGraph’s SEO services harness these determinants, aligning them with the search engines’ criteria to boost crawl rates, thereby enhancing content relevancy and search presence.

Moreover, LinkGraph employs advanced SEO reporting metrics and compelling SEO tools to analyze and refine the technical aspects of a website that contribute to its crawlability by search engine bots. Their meticulous approach ensures that a website’s architectural framework is conducive to more frequent and comprehensive crawls, a cornerstone for maintaining up-to-date SERP rankings.

Assessing Current Crawl Rates and Identifying Issues

Unveiling the dynamics of search engine crawl rates is a critical step in optimizing a website’s SEO potential.

LinkGraph’s SEO services employ meticulous techniques to evaluate current crawl frequencies, which act as a window into the search engine’s interactions with a site.

By analyzing server log files, the team at LinkGraph gleans valuable insights into the search engine crawlers’ behavior, pinpointing the frequency and depth of their visits.

In parallel, potential crawl errors are identified and systematically addressed, eliminating barriers that might impede a search engine’s ability to access and index web content effectively.

This foundational analysis is instrumental in crafting strategies that adhere to the complexities of search engine algorithms, thus ensuring a robust digital presence.

Analyzing Server Log Files for Crawl Insights

Analyzing server log files offers a pivotal glimpse into the actions of search engine crawlers on a website. LinkGraph’s adept SEO team sifts through this data, unpacking the intricacies of crawl patterns to optimize for both frequency and efficiency in indexing:

| Log Analysis Area | Insight Gathered | SEO Impact |

|---|---|---|

| Crawl Dates and Times | Understanding when search bots visit pages allows for strategic content updates. | Improves potential for fresher SERP listings and prioritized indexing. |

| HTTP Status Codes | Detects server responses indicating successful crawls or errors encountered. | Facilitates the resolution of crawl blocks, enhancing site accessibility for bots. |

| Resource Consumption | Pinning down the resources used during crawls assists in managing server load. | Helps prevent site overloads, ensuring continuous crawler access and uptime. |

Armed with insights into crawler behavior, LinkGraph tailors a website’s infrastructure to encourage more frequent and thorough inspection by search engines. This not only fine-tunes crawl frequency but also strengthens the website’s overall SEO foundation.

Identifying Potential Crawl Errors

Identifying potential crawl errors is a critical step in the optimization process, a task that LinkGraph’s SEO experts approach with precision. They Meticulously Monitor a myriad of factors, from incorrect directives in a robots.txt file to the nuances of 404 error pages, as these can significantly obstruct search engine crawlers and dampen a website’s visibility.

With LinkGraph’s comprehensive SEO services, the detection and rectification of these errors are handled with expediency, ensuring that every aspect of a client’s digital presence is configured to promote optimal crawl frequency. This attention to technical detail is part of their commitment to building a seamless pathway for search engine crawlers, culminating in improved SERP positioning and digital authority.

Enhancing Site Structure for Improved Crawl Efficiency

In the context of fortifying SEO technical crawl frequency, the architecture of a website serves as a critical determinant.

Effective site structure and navigation are not only pivotal for user engagement but also play a substantial role in how search engines interact with and assess a digital entity.

A well-organized site facilitates the efficient traversal of search engine crawlers, ensuring that no content is overlooked during indexing processes.

To this end, LinkGraph’s SEO services emphasize the refinement of site structures and the strategic implementation of sitemaps, endorsing the importance of simplified navigation in establishing structured crawl paths that align with search engines’ sophisticated algorithms.

Simplifying Navigation for Better Crawlability

In the quest for superior SEO efficiency, LinkGraph recognizes that straightforward site navigation is fundamental to ensuring optimal crawlability by search engines. Their deft employment of SEO services works to refine navigation paths, effectively reducing complexity and enabling search engine crawlers to chart a course through site content with unparalleled ease.

LinkGraph’s SEO experts focus keenly on Streamlining Navigational Elements, from logically structured menus to intuitive link placement, crafting a digital environment that fosters swift and systematic page indexing. This strategic approach underscores the significance of accessibility, thereby bolstering a site’s potential for enhanced crawl frequency and subsequently, improved search rankings.

Utilizing a Sitemap for Structured Crawl Paths

A sitemap acts as a roadmap for search engine crawlers, detailing the structure of a website and the interconnection among its pages:

- By submitting an XML sitemap to search engines, LinkGraph ensures that their clients’ websites are comprehensively mapped out, thereby smoothing the path for crawlers to access all relevant pages.

- This critical SEO service by LinkGraph accentuates the importance of a sitemap, prompting search engines to index content efficiently, which is paramount for maintaining current SERP rankings.

LinkGraph’s utilization of sitemaps extends beyond mere submission; it involves the Strategic Organization of URLs and the prioritization of content, enabling crawlers to identify the most valuable pages swiftly. Their tactical approach guarantees that essential sections of a website are frequently visited by search engine bots, correlating with an increased potential for higher search rankings.

Leveraging Robots.txt to Guide Search Engine Crawlers

In the labyrinth of search engine optimization, meticulously directing search engine crawlers through a website’s myriad of pages is a subtle art.

The robots.txt file emerges as a maestro in this regard, deftly orchestrating crawler access to enhance a site’s SEO technical crawl frequency.

LinkGraph’s SEO services expertly calibrate robots.txt files, crafting tailored directives that not only streamline crawl efficiency but also protect the integrity of a site’s bandwidth and content priority.

This precise control underscores the importance of robots.txt in steering the digital traversal by search engine bots, laying the groundwork for the subsequent exploration of crafting effective robots.txt rules.

The Role of Robots.txt in Crawl Frequency

The robots.txt file stands as a crucial component in modulating SEO technical crawl frequency, serving to inform search engine crawlers which segments of a website should be accessed or ignored. LinkGraph’s SEO services excel at configuring this text file to communicate effectively with search bots, ensuring that they spend their crawl budget on pages that enhance a website’s SEO standing.

By optimizing rules within robots.txt, LinkGraph directs crawler traffic to the most influential areas of their clients’ websites, effectively boosting the frequency with which these key pages are indexed. Such strategic guidance provided by LinkGraph’s seasoned SEO professionals optimizes crawl efficiency, contributing to more accurate and timely search engine rankings.

Crafting Effective Robots.txt Rules

LinkGraph’s approach to refining the robots.txt file comprises a meticulous calibration of directives that precisely guide search engine crawlers. Their SEO experts craft rules that adeptly balance the need for thorough indexing with the conservation of server resources, ensuring that the most pertinent sections of a website receive the crawlers’ undivided attention.

Distinct from a one-size-fits-all methodology, LinkGraph ensures that each robots.txt rule is fashioned to complement the unique characteristics of the client’s website. This individualized attention to the nuances of a site’s content and architecture fosters a more efficient crawl process, thereby positively influencing the frequency and scope of search engine indexing activities.

Managing Crawl Budget for Larger Websites

Fostering a robust online presence for sprawling websites involves Navigating the Complexities of ‘Crawl Budget’—a concept that holds critical implications for SEO effectiveness.

As larger sites contend with a myriad of pages and resources, understanding and optimizing the allocation of search engine crawlers’ time and attention becomes paramount.

Crawl Budget intricately combines the rate of crawl frequency with the depth of pages inspected, inevitably shaping a website’s capacity to be indexed and ranked.

Therefore, LinkGraph’s comprehensive SEO services place particular emphasis on employing strategic measures that refine the utilization of this Crawl Budget.

Mastering these techniques not only bolsters the efficiency of search engines in surveying large-scale digital terrains but also seeks to maximize the desired impact in search result standings.

What Is Crawl Budget and Why It Matters

The term ‘Crawl Budget’ refers to the finite number of pages a search engine crawler can and will index within a given timeframe on a particular website. It stands as a crucial SEO parameter for larger websites, where the abundant plethora of content presents unique challenges for both webmasters and search engine algorithms in terms of efficient resource allocation.

Understanding the significance of Crawl Budget is essential as it directly influences a website’s ability to maintain current, comprehensive, and visible listings in search results. It is imperative that SEO services such as those offered by LinkGraph meticulously manage this aspect to ensure that high-value and strategically important pages gain the crawler’s attention, fostering enhanced online visibility and authority.

Strategies to Optimize Your Crawl Budget

LinkGraph’s adept SEO services often focus on eliminating duplicate content and Refining on-Page SEO Elements, such as creating concise, descriptive URL structures. By doing so, they prioritize valuable content for search engine crawlers, ensuring a website’s most critical pages receive the attention they deserve within the scope of a carefully managed crawl budget.

Furthermore, LinkGraph emphasizes the Acceleration of Page Load Times and the enhancement of the overall user experience to ensure swift crawler processing. Their dedication to optimizing the technical performance of a website prevents unnecessary crawl budget expenditure, thereby maximizing the potential for improved search rankings.

Maximizing Page Speed for Faster Crawling

In pursuit of fortifying a website’s SEO technical crawl frequency, page speed emerges as a cardinal factor.

Succinct load times are instrumental for search engine crawlers, as agility in accessing and indexing pages directly correlates with SEO outcomes.

Embracing this principle, industry professionals, including those at LinkGraph, recommend a comprehensive audit to unearth any underlying speed-related hurdles.

Followed by the strategic implementation of enhancements, such measures are not only geared towards providing a superior user experience but equally serve to expedite the crawling process – a critical endeavor in the competitive terrain of search visibility.

Audit Your Site for Speed Issues

In the sphere of SEO technical crawl frequency, diagnosing site speed issues is a necessity for businesses aiming to bolster their online footprint. LinkGraph’s SEO services provide meticulous site speed audits, a process designed to pinpoint areas that impede swift page loading, which can stall search engine crawlers and affect overall user experience.

Upon identifying bottlenecks in page performance, LinkGraph implements targeted optimizations to enhance page responsiveness. These refinements encompass compressing large image files, minimizing code bloat, and leveraging caching technologies—critical steps toward achieving the rapid page speeds that both users and search engine crawlers favor.

Implementing Speed Enhancements

LinkGraph’s expertise in SEO extends to implementing speed enhancements that catalyze a website’s performance. Their specialized team conducts meticulous code optimization, stripping down unnecessary elements that bog down loading times, consequently providing a frictionless pathway for search engine crawlers.

Through the application of advanced compression algorithms and the strategic use of content delivery networks (CDNs), LinkGraph’s tailored SEO services significantly reduce latency, offering a swift digital experience that is advantageous for both site visitors and crawler efficiency.

Updating Content Regularly to Encourage Recrawling

Ensuring a consistent cadence of content updates stands as a linchpin in optimizing SEO technical crawl frequency.

Engaging in regular modification and enhancement of on-site material not only signals to search engines that a site is active and current but also plays a central role in bolstering SEO performance.

Through a focus on maintaining the freshness of content, LinkGraph’s comprehensive SEO services strategically propel the recrawling process, iterating a continuous cycle of review and indexing that sharpen’s a site’s competitive edge in the digital ecosystem.

Frequency of Content Updates and SEO Impact

Renewing and Refreshing Online Content Regularly is a potent catalyst in signaling to search engines that a website is dynamic and persistently engaged with its subject matter. LinkGraph’s SEO services understand this dynamic, advocating a stringent schedule for content updates which, in turn, beckons search engine crawlers to re-assess and re-index web pages, thereby potentially elevating a site’s standing in SERPs.

Content revision serves as more than periodic maintenance; it’s a strategic maneuver in the competitive domain of SEO. The level of impact such updates render is substantial, as LinkGraph’s strategic initiative on content frequency translates directly to keeping a site’s information both pertinent and authoritative, effectively reinforcing its SEO footprint and ensuring sustained digital relevancy.

Ways to Keep Your Content Fresh

LinkGraph’s SEO services facilitate the periodic incorporation of topical, high-quality content tailored to audience demands, ensuring that websites remain vibrant and attract regular search engine attention. Through the strategic introduction of relevant articles, blog posts, and updates, client websites retain a state of perpetual revitalization that search engines consider favorable for more frequent indexing.

Moreover, LinkGraph champions the integration of multimedia enhancements and interactive features to sustain audience engagement and encourage deeper content exploration by search engine crawlers. This approach not only injects a refreshing variety into web pages but also signals to search engines an active and diverse content profile worthy of regular recrawling and indexing.

Monitoring and Adapting to Search Engine Algorithm Changes

In the ever-evolving landscape of search engine optimization, vigilance against the fluctuations of search engine algorithms is indispensable for maintaining and enhancing SEO technical crawl frequency.

Rigorous monitoring and swift adaptation to algorithmic shifts serve as the bedrock for optimizing a website’s accessibility to search crawlers.

By keeping abreast of the latest changes and recalibrating tactics accordingly, LinkGraph’s SEO services are tailored to navigate the complexities of algorithm updates, ensuring that strategies remain robust and aligned with the current digital milieu for ongoing optimization of clients’ online endeavors.

Keeping Track of Algorithm Updates

Remaining vigilant to the changing tides of search engine algorithms is pivotal for professionals aiming to safeguard and polish SEO technical crawl frequency. LinkGraph’s SEO services are underpinned by an unwavering commitment to detect and interpret the nuances of these algorithmic shifts, ensuring that their clients’ websites are optimized with the latest guidelines to retain search engine favor.

LinkGraph stands at the forefront, expertly navigating the ebb and flow of search engine algorithms with a proactive stance. Their SEO specialists keep their finger on the pulse of industry developments, meticulously adjusting their strategies to align with real-time updates—garnering the agility to capitalize on new opportunities that secure and elevate their clients’ digital standing.

Adjusting Strategies for Ongoing Optimization

In response to the mercurial nature of search engine algorithms, LinkGraph’s SEO services dynamically refine and recalibrate strategies to remain apropos. Their persistent optimization endeavors encompass vigilant monitoring, deftly adjusting content and technical SEO facets to align with the most current algorithmic standards.

These strategic adjustments are not mere reactionary measures but are part of a continual enhancement process that LinkGraph’s seasoned SEO professionals employ. Clients benefit from a proactive partnership where their digital presence is regularly fortified against the prevailing algorithmic winds, ensuring consistently high crawl frequency and search engine visibility.

Building a Consistent Internal Linking Structure

In the intricate ecosystem of SEO, the importance of a well-orchestrated internal linking network cannot be overstated, especially when it comes to facilitating SEO crawl frequency.

A meticulous array of internal links serves not just as the connective tissue of a website, guiding visitors seamlessly through a digital journey, but also as navigational beacons for search engine crawlers.

By implementing a structured framework of internal links, LinkGraph’s adept SEO services refine a website’s informational hierarchy, propelling the efficiency and depth of the SEO crawling process.

This subsection delves into the pivotal role of internal links for SEO crawling and unfolds the techniques to curate an impactful linking architecture.

The Importance of Internal Links for SEO Crawling

A robust internal linking framework is a keystone in optimizing SEO technical crawl frequency, as it streamlines the path search engine crawlers take through a website. LinkGraph’s comprehensive SEO services strategically deploy internal linking to reinforce the contextual relationships between pages, enhancing the search bots’ ability to discover and index content with improved precision and speed.

Internal links not only aid in establishing a site’s architecture but also distribute page authority throughout the interconnected web of content, a factor that the algorithms of search engines keenly evaluate. By optimizing internal linking structures, LinkGraph ensures that vital content is indexed more frequently, boosting the site’s visibility and prominence in SERPs.

Techniques to Create an Effective Linking Framework

To establish an Effective Linking Framework, LinkGraph employs precision in the placement of internal links to ensure a logical flow of information across a website. They focus on crafting links that are contextually relevant to the content within each page, thereby strengthening the thematic connections across the site and improving its organizational structure.

Understanding the impact of anchor text in internal links, LinkGraph meticulously selects words that are descriptive and aligned with the target page’s primary keywords. This strategic choice not only clarifies the destination content for users but also reinforces keyword relevance for search engine crawlers:

- Identify key pages that serve as authoritative hubs within the site’s structure.

- Create a blueprint for internal linking that considers user journey and the flow of PageRank.

- Implement links that naturally fit into the page’s content, avoiding over-optimization or irrelevant connections.

LinkGraph’s approach to internal linking extends beyond mere connectivity; it rigorously ensures that each link serves a dual purpose of enhancing user engagement and improving crawl efficiency for search engines.

Conclusion

Optimizing SEO technical crawl frequency is fundamental for strengthening a website’s search engine visibility and maintaining up-to-date SERP rankings.

By carefully managing crawl frequency factors—such as content updates, site structure, and server load—LinkGraph’s SEO services ensure regular indexing of their clients’ websites, providing them with a competitive advantage.

Analyzing server log files and identifying crawl errors enable the crafting of strategies tailored to search engine algorithms, enhancing discoverability and relevance.

Simplifying site navigation, using sitemaps, and guiding search bots with robots.txt files further improves crawl efficiency.

Large websites benefit from managing crawl budgets wisely, while maximizing page speed is crucial for faster crawling.

Regular content updates encourage recrawling, keeping the site’s information fresh.

Additionally, continual adaptation to search engine algorithm changes and a consistent internal linking structure are vital for sustained SEO success.

Ultimately, these best practices contribute significantly to a website’s ability to rank well and remain relevant in the ever-changing digital landscape.