The ABCs of Technical SEO

Understanding the Foundations: The ABCs of Technical SEO Technical SEO lays the cornerstone for websites to flourish in Google’s intricate realm, weaving through an expanse of algorithms […]

Understanding the Foundations: The ABCs of Technical SEO

Technical SEO lays the cornerstone for websites to flourish in Google’s intricate realm, weaving through an expanse of algorithms and ranking signals.

It embarks on a meticulous journey, beginning with the optimization of site speed, ensuring the seamless crawlability for search engine bots, and progressing towards proficient indexing practices.

This domain requires a mastery over the elements like structured data, canonical tags, and a mobile-responsive design—all integral for bolstering a brand’s digital presence.

Security measures such as implementing HTTPS play a pivotal role in safeguarding user data, which in turn, enhances trust and authority.

In this article, explore how LinkGraph’s SEO Services and SearchAtlas SEO software become pivotal allies in navigating the vast, often opaque sea of technical SEO strategies.

Key Takeaways

- Technical SEO Ensures a Website Is Configured for Optimal Crawling and Indexing by Search Engine Bots

- Site Speed and Mobile Optimization Significantly Impact User Experience and Search Engine Rankings

- Secure Sites With HTTPS Gain Ranking Advantages Due to Prioritizing User Security

- Canonical Tags and Structured Data Are Crucial in Managing Content and Enhancing SERP Presence

- XML Sitemaps Assist Search Engines in Discovering and Indexing All Valuable Content Efficiently

Demystifying Technical SEO for Beginners

Embarking on a journey into the intricate world of Technical SEO, aspirants and professionals alike confront the challenge of optimally aligning their digital assets with the meticulous scrutiny of search engines.

At the intersection of website optimization and the algorithms that determine search performance, Technical SEO emerges as a fundamental pillar in securing a robust online presence.

It is integral to distinguish it from its counterparts, on-page and off-page SEO, as each serves unique, yet interwoven functions in a comprehensive strategy.

Understanding the nuances and intricacies of Technical SEO lays the groundwork for websites to achieve not just visibility, but also to provide search engine bots with an impeccable experience, analogous to the clear, seamless journey that users deserve.

Understand What Technical SEO Entails

Technical SEO is the specialized aspect of optimization that focuses on enhancing the infrastructure of a website. It ensures a site is accessible, indexable, and comprehensible to search engine bots, facilitating better crawling and indexing.

In essence, Technical SEO concerns itself with a myriad of elements ranging from site speed to security protocols:

- A robust site architecture and seamless navigation to aid search engine crawlability

- Optimized page speed for swift loading, impacting user engagement and search engine rankings

- Implementation of SSL to secure user data and instill trust

- Efficient management of content through canonical tags to prevent issues of duplicate content

The Role of Technical SEO in Search Performance

The significance of Technical SEO in determining search performance cannot be overstated. It directly influences a website’s capability to not only appear in search results but to rank commendably, ensuring visibility to the appropriate audience.

Such optimization fine-tunes a website to communicate effectively with search engines, leading to more accurate indexing and securing placement in the search engine results pages (SERPs) that align with the searcher’s intent.

| Technical SEO Element | Impact on Search Engine Ranking |

|---|---|

| Site Architecture | Facilitates efficient search engine crawling and indexing |

| Page Speed | Improves user experience and reduces bounce rates |

| SSL (Secure Sockets Layer) | Ensures security, earning trust from users and search engines |

| Canonical Tags | Prevents duplicate content issues, clarifying the original source to search engines |

Differences Between Technical, on-Page, and Off-Page SEO

Grasping the distinctions among Technical, on-Page, and Off-Page SEO is pivotal for crafting a comprehensive optimization strategy. Technical SEO lays the foundation with a focus on a website’s backend, ensuring its architecture permits search engines to crawl and index efficiently, whereas on-Page SEO pertains to the content and elements within web pages that can be optimized for search engines and users.

On the other hand, Off-Page SEO revolves around external elements not housed on the actual website, predominantly involving link building and establishing domain authority. These efforts are aimed at reinforcing the site’s reputation and significance in the broader digital ecosystem, thereby enhancing its appeal to both users and search engine algorithms:

| SEO Type | Focus Area | Optimization Tactics |

|---|---|---|

| Technical SEO | Website Backend | Site architecture, crawlability, site speed, SSL |

| On-Page SEO | Content & User Experience | Keyword optimization, headers, meta descriptions, images |

| Off-Page SEO | External Signals | Backlinks, social media presence, guest blogging |

Starting With Site Speed Optimization

Entering the realm of Technical SEO, one quickly discovers the criticality of site speed optimization.

Quick load times stand as an essential element in the tapestry of SEO, directly correlating to user satisfaction and subsequent search engine rankings.

Businesses and experts alike must employ reliable tools to gauge page speed, uncovering areas poised for enhancements.

With an array of strategies available to Accelerate Website Performance, prioritizing speed optimization is an effective step toward fortifying a website’s position in the competitive digital landscape.

Importance of Quick Load Times

Site speed serves as a decisive factor in a visitor’s decision to stay or abandon a web page. The modern user expects immediacy, and a delay in loading can lead to increased bounce rates and lost traffic.

A website with rapid page load times significantly enhances user experience and can improve search engine rankings, as Google considers page speed a ranking factor:

- Reduced page load times correlate with higher user retention and engagement.

- Quick loading is essential for mobile users who may be on limited data plans or have less patience for slow content delivery.

- Optimizing site speed is a proactive measure against the competition, particularly in industries where a split-second can define user loyalty.

Tools for Measuring Page Speed

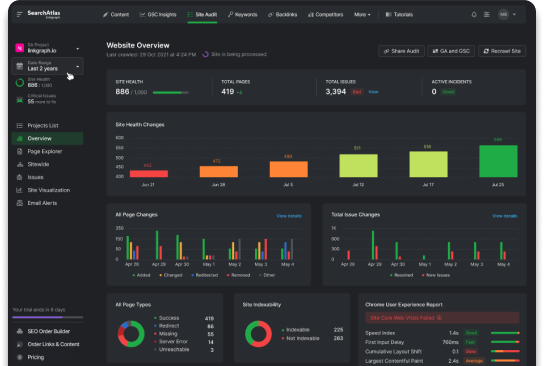

Evaluating a website’s page speed blossoms into a pivotal assessment for those keen on refining their Technical SEO framework. Professionals turn to advanced tools such as SearchAtlas SEO software, adept in dissecting every millisecond of load time to provide actionable insights. These tools represent more than mere number crunchers; they serve as critical audit partners, guiding one through the intricate web of optimization possibilities.

| Page Speed Analysis Tool | Features | Benefits |

|---|---|---|

| SearchAtlas SEO software | Comprehensive page speed metrics | Insightful data for targeted optimizations |

Armed with precise measurements from SearchAtlas SEO software, one can instigate improvements that transcend simple page experience enhancements. The results garnered from such in-depth analysis not only spotlight strengths and weaknesses but also funnel through to improved search engine standings, where speed is a heralded metric, ever so consequential in the pursuit of SEO excellence.

Tips to Improve Website Speed

One strategic method to enhance website speed is through the Compression of Images and Files. As a professional measure, this approach ensures that large assets do not burden loading times, simplifying the path for search engine bots and maintaining a brisk browsing experience for visitors.

Another critical tactic entails the Minimization of HTTP Requests by streamlining code and consolidating multiple JavaScript or CSS files into singular amalgamated ones. This prioritized refinement reduces the amount of data transferred between the server and browser, culminating in markedly improved page speed metrics.

Ensuring Your Site Is Easily Crawlable

Grasping the complexities of Technical SEO begins with the fundamental task of ensuring that a website is presented in a way that search engine bots can effortlessly traverse and understand its content.

The architecture of a website must be meticulously crafted to accommodate the non-human visitors that hold the keys to a site’s visibility.

Insights into these automated explorers’ behaviors guide the creation of effective robots.txt files, critical for controlling their access, and underscore the importance of a clean URL structure, which provides clarity and direction in the vast digital maze.

Each element operates symbiotically to signal search engines, amplifying the capacity for pages to be crawled with precision and purpose.

Understanding How Search Engines Crawl Pages

Within Technical SEO, search engines deploy bots—Google’s famed Googlebot being a prime example—to navigate the digital expanse of the internet. These automated crawlers systematically evaluate and index web pages by following links from one page to another.

As these search engine bots perform their indexing, they adhere to a predefined set of directives outlined in a website’s robots.txt file: this protocol outlines which areas of a site are accessible and which are meant to remain obscure from indexing efforts:

- Crawlers begin by requesting the robots.txt file to identify allowed and disallowed paths within the site.

- Following these directives, bots traverse a site, assessing links, content, and site structure for relevance and indexing.

- The crawler’s findings contribute to the construction of the search engine’s index, which becomes the basis for serving search queries.

Crucially, the efficiency and comprehensiveness of this process depend on the clarity of the signals a website sends, from meta tags to structured data, all orchestrating a harmonious crawl experience.

Creating an Effective robots.txt File

An effective robots.txt file acts as a gatekeeper that dictates the pathways search engine crawlers may access and which they should avoid. By meticulously crafting this file, site administrators direct the flow of Googlebot and other search engine bots, enabling a more streamlined and efficient indexing process.

Proper utilization of the robots.txt file enhances a site’s SEO by preventing the crawling of irrelevant or duplicate sections, ensuring that only content-rich and significant pages are indexed. Adherence to this protocol by bots ensures that crawl budget is allocated optimally, amplifying a site’s visibility and relevance in search rankings.

- Robots.txt files guide search engines in prioritizing important content for crawling.

- Strategic use of these files conserves the crawl budget by limiting access to non-essential areas.

- Effective robots.txt management contributes to a cleaner, more focused indexing of a website.

The Significance of a Clean URL Structure

A pristine URL structure is a cornerstone of a website’s foundation, dictating the ease with which search engine bots can parse and index its contents. Reflecting a site’s hierarchy and content categorization, clear and logical URLs act as navigational beacons, leading bots smoothly through the digital terrain and bolstering the indexing process with strategic efficiency.

Forging URLs with a keen eye on brevity and descriptiveness not only benefits the mechanical spiders but also resonates with users, crafting a bridge between technical compliance and intuitive, user-centered design. These SEO-friendly URLs thus become instrumental in harmonizing the dual objectives of delighting the visitor and satisfying the algorithm’s voracious appetite for order and clarity.

Why Indexing Is Crucial in Technical SEO

In the tapestry of Technical SEO, the thread of indexability is paramount; it is the metric by which the accessibility and readability of a website are judged by search engines.

As businesses strive to bolster their online presence, understanding the mechanics behind how search engines catalogue their web pages is essential.

In this exploration of indexability, the focus shifts to devising robust strategies to maximize a site’s indexing potential, along with untangling the common indexing issues that can hinder site performance.

Effective resolution of these pitfalls is the lynchpin in cementing a website’s authority and relevance in the eyes of search algorithms and user queries alike.

Defining Indexability for Your Website

Indexability within the framework of Technical SEO is the measure of a website’s capacity to be analyzed and stored within a search engine’s database. A meticulous approach to indexability ensures that every valuable page rises to the notice of search engine algorithms, enhancing the potential for high rankings on the Search Engine Results Pages (SERPs).

When professionals enhance indexability, they are essentially paving the way for content to be discovered and served up in response to relevant queries. The intricacies of indexability involve several stages, each one a critical step in the journey from user search to website visit:

- Ensuring that pages are not blocked by the robots meta tag or the robots.txt file.

- Employing clean, descriptive URLs to facilitate the search engine’s understanding of page content.

- Utilizing sitemap files to highlight new content or pages of particular importance to the crawlers.

Strategies to Enhance Your Site’s Indexing

LinkGraph’s SEO Services posits that the implementation of XML sitemaps serves as a critical strategy for enhanced indexing, offering search engine bots a blueprint of a site’s most valuable pages. This encourages a thorough and orderly indexing process, reinforcing a website’s visibility in search engine rankings and facilitating users in connecting with the content most pertinent to their search needs.

Another strategy advocated by top-tier SEO experts involves a rigorous, ongoing SEO audit process. With the assistance of tools like SearchAtlas SEO software, SEO specialists can continuously monitor and refine a site’s technical elements. This constant vigilance ensures search engines have unhindered access to index-worthy content, solidifying a site’s standing in the competitive digital marketplace.

Common Indexing Issues and How to Resolve Them

Indexing obstacles can significantly thwart a website’s SEO efforts, obscuring content from appearing in Google’s search results. One prevalent issue is the inadvertent blocking of pages through improper use of the noindex directive in robots meta tags or errors in the robots.txt file, which can bar search engines from indexing vital pages.

Rectifying These Indexing Challenges requires a strategic approach: firstly, a meticulous audit must identify any improperly configured directives that prevent indexing. Then, corrective measures, such as updating the robots.txt file or altering meta tags, must be precisely executed to restore the search engine’s access.

- Conduct an extensive SEO audit to detect and appraise indexing issues.

- Adjust the robots.txt and meta tags to ensure they accurately guide search engine bots.

- Validate changes through tools like SearchAtlas SEO software to confirm correct crawling and indexing.

Unlocking the Potential of Structured Data

Within the extensive domain of Technical SEO lies the game-changing concept of structured data, a powerful tool that primes web pages for enhanced interpretation and performance in the digital ecosystem.

Structured data is crucial for understanding the content’s context, leading to more intelligent and featured presentations within search results.

It consists of implementing schema markup, a collaborative endeavor fostering richer search experiences.

This coding language serves as a beacon to search engines, broadcasting the nature of the content in a machine-readable format.

The utilization of structured data effectively amplifies the prominence of web pages, enabling enhancements like rich snippets which capture user attention and improve click-through rates.

LinkGraph’s expert SEO services underscore the importance of leveraging structured data as part of a cutting-edge SEO content strategy, inviting businesses to revolutionize how their information is crawled, indexed, and displayed across search engines.

What Is Structured Data and Its Benefits

Structured data orchestrates content in a format that search engines can effortlessly digest, utilizing standardized schemas to convey context. This potent form of data communication enhances a site’s integrity in the digital space, providing a clear-cut interpretation of page contents.

The wondrous benefit of structured data lies in its ability to elevate a website’s content to the forefront of SERPs through rich snippets, knowledge graphs, and other advanced features. These augmented results not only attract users’ attention but also offer a precise portrayal of what to expect, increasing the likelihood of engagement:

- Rich snippets embellish search listings with additional visual cues such as star ratings and images.

- Structured data informs knowledge graphs, presenting users with a quick reference and understanding of the topic at hand.

- Implementing schema markup facilitates a direct path to higher visibility and interaction within SERPs.

Implementing and Testing Schema Markup

Implementing schema markup is a savvy move by webmasters, acting as a signal booster in the cacophony of the internet. By inserting specific codes that detail elements like events, products, and reviews, websites provide search engines with a detailed map of their content landscape.

Testing schema markup is equally critical, ensuring that the implemented code is accurately understood by search engines. Tools like SearchAtlas SEO software offer validation features that confirm the operational efficacy of schema, encapsulating a meticulous approach to enhancing a website’s communicative clarity:

- Analyze the website’s content to identify the most relevant schema markup types.

- Add the appropriate schema markup to the website’s HTML.

- Utilize testing tools to validate and debug the markup for optimal search engine recognition.

Boosting SEO With Rich Snippets

Rich snippets serve as potent enhancements to a website’s search engine results, captivating potential visitors with a preview of the information available, beyond the standard title and description. By embedding this structured data, websites gain a competitive edge, as rich snippets can significantly increase the real estate a listing occupies on a SERP, driving higher click-through rates and boosting SEO performance.

Embracing the incorporation of rich snippets, LinkGraph’s SEO services enable businesses to communicate the essence of their content directly within search listings. This strategic presentation sets the stage for a website’s content to stand out, making it not only more visible but also more appealing to users who rely on these enriched cues when making the decision to click through to a website.

Mastering the Use of Canonical Tags

As professionals delve into the sophisticated realm of Technical SEO, the concept of canonicalization stands out as a cornerstone of managing web content.

Prudent use of canonical tags is essential to ward off the detrimental effects of duplicate content, a common plight that can dilute a brand’s SEO potency.

It is paramount for SEO practitioners to grasp the intricacies of setting canonical URLs and to proactively address canonicalization errors.

Understanding these elements plays a quintessential role in shaping a website’s authority and ensuring its messaging remains unequivocal in the eyes of search engines.

Prevent Duplicate Content Issues

In the arena of Technical SEO, duplicate content often emerges as a formidable foe, undermining the integrity of a website’s SEO standing through content redundancy. Through the strategic application of canonical tags, SEO specialists instruct search engines on the prime version of similar or identical pages, thus focalizing the value and mitigating the risks of dilution in authority.

Canonicalization remains a cardinal function in maintaining a website’s thematic coherence, steering clear of inadvertent competition between pages that could confuse search engines and splinter SEO efforts. By consistently applying canonical tags across web content, practitioners bolster the clarity of their site’s narrative, ensuring that only the most pertinent versions are apprehended and displayed in search results:

| SEO Concern | Canonical Tag Function | Outcome |

|---|---|---|

| Duplicate Content Risk | Clarifies primary content version | Consolidates page authority and relevance |

| SEO Effort Fragmentation | Prevents competition between similar pages | Enhances focused indexing and search performance |

How to Set Canonical URLs

Establishing canonical URLs is a vital task for ensuring that search engines interpret web content correctly. It begins with the selection of a preferred URL as the canonical version for pages with duplicated or highly similar content: an action that signals to search engines the URL to consider as the original or most relevant page for indexing and ranking purposes.

Once a canonical URL has been chosen, it is crucial to communicate this choice to search engines by properly implementing canonical tags in the HTML head of each duplicate or similar page. This tag, in the form of a simple line of code, points effectively to the chosen URL, thereby consolidating search signals and preserving the positional integrity of the content.

| Action | Purpose | Benefit |

|---|---|---|

| Select canonical URL | Identify original content | Prevent SEO issues from duplicated content |

| Implement canonical tag | Guide search engines to preferred URL | Consolidate search ranking signals |

Identifying and Fixing Canonicalization Errors

LinkGraph’s SEO services emphasize the pivotal step of identifying canonicalization errors as a beacon for maintaining a flawless SEO strategy. Through meticulous inspections of web content, their experts uncover any missteps in canonical tag usage—errors that, if uncorrected, could lead to content being disregarded by crawlers and, as a result, diminished search engine rankings.

Upon discovering discrepancies within canonical tags, the team at LinkGraph spring into action, swiftly rectifying any inaccuracies to ensure that search engines are directed towards the appropriate, authoritative content. Their precision in correcting these errors is essential in preserving the site’s SEO integrity, allowing businesses to maintain a consolidated and potent online presence.

The Importance of a Mobile-Friendly Website

In today’s digital landscape, adapting to the ubiquitous nature of mobile devices is non-negotiable, particularly within the ambit of Technical SEO.

A mobile-friendly website is no longer a mere advantage but a fundamental necessity, as the surge in mobile traffic undeniably influences a website’s search engine ranking.

Exploring this facet of Technical SEO requires a focus on mobile optimization and its seismic impact on SEO, a comprehensive approach to testing for mobile responsiveness, and the integration of best practices for mobile SEO to ensure that the mobile user’s journey is as fluid and satisfactory as the desktop experience.

The Impact of Mobile Optimization on SEO

Mobile optimization is a critical aspect of Technical SEO that has a significant impact on search engine visibility and user engagement. With the majority of searches now performed on mobile devices, Google has moved to mobile-first indexing, confirming the pivotal role of mobile-friendly websites in SEO strategies.

| SEO Component | Mobile Optimization Impact |

|---|---|

| Search Engine Ranking | Enhanced by mobile-friendly user experiences |

| User Engagement | Increased through optimized mobile site speed and design |

| Indexing | Prioritized by search engines for mobile-responsive content |

Ensuring that websites are optimized for mobile not only caters to user preferences but also aligns with search engine algorithms that reward responsive designs. This optimization directly affects a site’s search engine results page (SERP) performance, as mobile compatibility becomes an indisputable element in achieving favorable SEO outcomes.

Testing for Mobile Responsiveness

Testing for mobile responsiveness is an imperative process for ensuring that a website delivers a consistent user experience across all device formats. This level of responsiveness is critical to retaining user engagement and demonstrating the versatility of a web presence to search engines.

Professionals leverage a suite of sophisticated tools and protocols to simulate various screen sizes and resolutions, confirming that design and functionality remain intact regardless of the user’s device. The ability to deliver a seamless experience on mobile is indicative of a website’s commitment to accessibility and is rewarded by search engines with favorable ranking considerations:

| Aspect of Mobile Responsiveness | Tool Usage | SEO Significance |

|---|---|---|

| Design Adaptability | Testing across multiple devices | Improved user interface and navigation |

| Functionality Consistency | Interactive simulations of user interactions | Maintained efficient user experience |

Best Practices for Mobile SEO

Adhering to mobile SEO best practices is paramount in ensuring an optimal browsing experience that reflects the growing predominance of mobile users. Key practices include implementing a responsive design that dynamically adjusts to various screen sizes and integrating touch-friendly navigation that enhances usability on mobile devices.

Moreover, focusing on mobile loading speed by Optimizing Images, leveraging browser caching, and minimizing code is crucial, as mobile users often browse on-the-go with expectations for quick information access. Aggregating content that is concise and easily consumed on small screens further advances the user’s mobile experience and contributes to SEO success.

| Mobile SEO Best Practice | Purpose | Impact on SEO |

|---|---|---|

| Responsive Design Implementation | Provides consistent user experience across devices | Improves usability and site engagement, positively affecting rankings |

| Touch-Friendly Navigation | Facilitates easier interaction for mobile users | Decreases bounce rates, signaling quality to search engines |

| Optimized Mobile Loading Speed | Enhances performance and accessibility on mobile networks | Directly correlates with user retention and search engine favorability |

| Concise and Accessible Mobile Content | Presents information efficiently for mobile consumption | Encourages engagement and sharing, improving SEO visibility |

Security Enhancements With HTTPS

In the foundational landscape of Technical SEO, the role of website security is no mere afterthought but an essential component, epitomized by the adoption of HTTPS.

Recognizing secure sites as a benchmark of trust and quality, Google has unequivocally prioritized the safety of user data in its ranking algorithm.

As businesses transition from HTTP to the more secure HTTPS protocol, the process involves not only a meticulous implementation but also diligent upkeep, including the consistent renewal and maintenance of SSL certificates.

This proactive approach to cybersecurity is a testament to the integral nature of secure connections in fostering a trustworthy environment for users, subsequently influencing a website’s search engine standing.

Why Secure Sites Matter to Google

Google’s prioritization of secure sites is not without reason; it firmly places user security and trust as non-negotiable elements within its ranking criteria. Recognizing the prevalence of data breaches and online threats, Google rewards websites that implement HTTPS, the secure form of HTTP, with a tangible boost in search rankings.

To ensure a safer browsing experience, Google’s algorithm favors sites with HTTPS, using this as a signal of credibility and reliability within its search results. This strategic emphasis incentivizes website owners to adopt security measures that protect user data against interception or alteration by attackers:

- User protection becomes a building block of Google’s mission to provide a secure internet landscape.

- HTTPS adoption signifies an investment in privacy, bolstering a website’s authority and trustworthiness in the eyes of both users and the search engine itself.

Transitioning From HTTP to HTTPS

Transitioning from HTTP to HTTPS is a crucial step in reinforcing a website’s security profile. This migration is not merely a preference but a strategic necessity, enhancing the encryption and integrity of data as it transfers between users’ browsers and web servers. The move towards HTTPS is indicative of a company’s dedication to safeguarding visitor information and upholding stringent security standards.

LinkGraph’s SEO Services play an instrumental role in guiding businesses through this seamless transition. Their technical expertise ensures that all aspects of the switch, from installing SSL certificates to updating internal links and adjusting server settings, are handled with precision. This meticulous attention to detail sustains the website’s SEO value while establishing a fortified, secure browsing environment for users.

Keeping Your SSL Certificate Updated

Maintaining an up-to-date SSL certificate emerges as a critical aspect of website security, acting as a stark delineator in the perception of a website’s credibility. LinkGraph’s SEO Services acknowledges that the continuous renewal of SSL certificates is a fundamental task, reinforcing the trust users and search engines place in a secure and authentic web environment.

The vigilance in updating SSL certificates cannot be understated, as it prevents the lapse of encrypted connections—ensuring that sensitive data remains protected from vulnerabilities. Focused on the intricate balance of SEO and cybersecurity, LinkGraph holds expertise in integrating SSL updates seamlessly, thereby upholding the end-user’s assurance and a website’s standing in search engine evaluations.

Crafting a Logical Site Architecture

In the intricate realm of Technical SEO, the construction of a logical site architecture stands as a pivotal endeavor, underpinning the usability and search engine discoverability of a website.

A well-organized site hierarchy not only streamlines navigation for users but also facilitates search engines in comprehending the layout and relevance of content, which can notably affect a site’s SERP positioning.

By delving into effective techniques for structuring website architecture and implementing breadcrumb navigation, businesses can sculpt an intuitive and conducive digital environment that enhances user experience and amplifies SEO efforts.

Benefits of an Intuitive Site Hierarchy

An intuitive site hierarchy is not merely a pathway for users to navigate through a website; it’s an intricate map that search engines employ to determine the relationship between pages and content value. This organization of information hierarchically is imperative as it ensures that users find what they need efficiently, while also allowing search engines to prioritize the importance of pages, leading to improved visibility in search rankings.

The establishment of a logical site structure has profound implications for user engagement: it confers a sense of predictability and familiarity, enabling users to navigate with ease and confidence. This consistency in user experience can significantly bolster the likelihood of conversion, transforming casual visitors into steadfast customers:

- An orderly site architecture elevates the user’s ability to locate desired content quickly.

- A clear hierarchy amplifies the engagement by minimizing the complexity of user interactions.

- Intuitive navigation is associated with increased dwell time and lower bounce rates, translating to positive SEO signals.

Techniques for Effective Website Architecture

Mastering the implementation of effective website architecture is a nuanced art that is central to the success of Technical SEO. Emphasis on a cohesive and logical structure ensures that users and search engine bots alike can navigate a website with relative ease, contributing to an optimized page hierarchy that supports improved search engine rankings.

Professionals in the field advocate for maintaining a shallow depth in website architecture, where key pages are accessible within just a few clicks from the homepage. This method not only simplifies the user’s quest for information but also streamlines the indexing process for search engines, ultimately fortifying the website’s visibility and accessibility in the digital ecosystem.

How to Use Breadcrumb Navigation Effectively

Effective use of breadcrumb navigation augments site architecture by providing straightforward pathways for users to trace their journey back to the homepage or other key areas of the site. Breadcrumbs serve as an elemental guide, allowing visitors to understand their current location within the site’s structure and to navigate to previously viewed pages with simplicity and precision.

LinkGraph’s SEO Services advocate for breadcrumbs as a vital tool in reinforcing both the user experience and a site’s SEO structure. By methodically integrating breadcrumb trails, a website’s navigational clarity is elevated, encouraging user engagement and facilitating the indexing process for search engine bots:

- Implementing breadcrumb navigation improves site usability and discoverability.

- Strategic placement and design of breadcrumbs enhance user orientation and contribute to a cleaner URL structure.

- Search engines reward sites with intuitive navigation features with improved rankings.

Creating and Submitting XML Sitemaps

In the intricate choreography of Technical SEO, the creation and submission of XML sitemaps plays a critical role in ensuring that search engines can not only find, but also intelligently navigate a website’s content.

As foundational components, sitemaps act as comprehensive guides that lead search engine bots through the myriad pathways of a site’s structure.

Mastering the art of sitemap generation and submission is a strategic move that enhances the visibility and indexation of web content, making it indispensable for professionals seeking to elevate a site’s SEO framework.

The Role of Sitemaps in Technical SEO

XML sitemaps are intrinsic to Technical SEO as they present a coherent structure of a website’s content, aiding search engines in efficient crawling. By denoting the organization of the site’s pages and the relationships between them, these sitemaps ensure comprehensive coverage by Googlebot and its counterparts, reinforcing the discoverability of the site’s key sections.

Through strategic creation and submission of XML sitemaps, LinkGraph’s SEO services enhance a site’s potential to be fully assessed, fully indexed, and appropriately ranked. This process streamlines the indexing phase for search engines, ensuring that recently updated or critical content is recognized promptly, and no valuable page is overlooked in the vast digital landscape of the web.

How to Generate an XML Sitemap

Generating an XML sitemap is a meticulously driven process, vital for showcasing website content favorably to search engine bots. Professionals initiate this task by leveraging SearchAtlas SEO software, adept at meticulously cataloging every page within a site’s domain and generating a structured, comprehensive XML file that serves as a map for search engines.

Once an XML sitemap is crafted, the imperative next step is its submission directly to search engines through their respective Webmaster Tools, such as Google Search Central. This deliberate act informs search engines of the sitemap’s existence, thus expediting the process of content discovery, crawling, and indexing, which is essential for maintaining the current and accurate presence of a website’s information in search results.

Submitting Your Sitemap to Search Engines

Upon the construction of an XML sitemap, its submission to search engines marks a critical milestone in Technical SEO. This step ensures that the intricate layout of a website’s content is readily accessible for efficient crawling and indexing.

LinkGraph’s SEO Services streamline the process of submitting sitemaps, assisting clients in navigating to search engine webmaster portals and uploading their sitemap files. This action solidifies the site’s communication with search engines, paving the way for enhanced content visibility and bolstered search rankings:

- Navigate to the search engine’s webmaster tools interface.

- Locate the sitemap submission section within the platform.

- Upload the XML sitemap file and confirm its successful submission.

Completion of these submissions is essential, not merely as procedural adherence but as a strategic SEO maneuver. It announces the readiness of the website for comprehensive scrutiny by search engine algorithms, an effort enhancing the likelihood of accurate indexing and representation within search results.

Conclusion

Understanding the fundamentals of Technical SEO is crucial for any website aiming to improve its online presence and search engine rankings.

It involves optimizing a website’s infrastructure to ensure it is accessible and indexable by search engine crawlers, which significantly enhances the site’s visibility to target audiences.

Key components such as site speed, secure connections (HTTPS), logical site architecture, and effective use of canonical tags and structured data provide a solid foundation for search engines to crawl and index content more efficiently.

A mobile-friendly design and the creation and submission of XML sitemaps further guarantee that the site adapts to modern user behaviors and remains comprehensively mapped for search engines.

In essence, grasping Technical SEO’s ABCs is indispensable for achieving a robust and competitive digital stance in today’s search-driven world.