Two Examples of How One Line of Code Could Kill Your SEO Case Studies

Catastrophic Code: How a Single Line Can Destroy SEO – Two Case Studies The delicate balance of search engine optimization hinges on myriad factors, one of which […]

Catastrophic Code: How a Single Line Can Destroy SEO – Two Case Studies

The delicate balance of search engine optimization hinges on myriad factors, one of which is the often-overlooked influence of a website’s underlying code.

Missteps in coding, seemingly innocuous to the untrained eye, can unleash catastrophic effects on a site’s SEO performance, plummeting its rankings overnight.

Through examining two stark cases of such coding calamities, LinkGraph reveals the undeniable correlation between a single line of code and the potential unraveling of diligent SEO efforts.

Grasping the intricacies of these scenarios equips businesses with the foresight to avert digital disasters.

Keep reading to uncover how precision in coding is not just a best practice, but an SEO imperative.

Key Takeaways

- Catastrophic Code Can Severely Impact a Website’s SEO Performance and Visibility

- Vigilant Code Reviews and Audits Are Critical to Preventing and Rectifying SEO-detrimental Errors

- LinkGraph’s SEO Services Provide Comprehensive Strategies and Tools for Recovery From Coding Errors

- Integrating SEO Best Practices Into Development Processes Is Crucial for Maintaining Search Engine Rankings

- Balancing Code Efficiency With SEO Objectives Is Key for a Website’s Long-Term Digital Success

Understanding the Impact of Flawed Code on SEO

The delicate balance of search engine optimization can be swiftly undermined by the slightest error in a website’s source code, making the concept of ‘catastrophic code’ a pivotal concern for professionals aiming to maintain digital prominence.

While search engines scrutinize web content to deliver the most relevant results, the underlying code functions as the foundation for SEO efficacy.

With an understanding centered on the potential for flawed code to negatively influence SEO, one must acknowledge not only the need for immaculately crafted code but also the reliance of modern SEO on such precision.

This examination of catastrophic code serves to underline the inherent link between clean code and search engine algorithms, setting the stage for a deeper exploration into how SEO can be compromised by overlooked coding inaccuracies.

Defining Catastrophic Code

Catastrophic code epitomizes the type of coding errors that can wreak havoc on a website’s search engine optimization efforts. It encompasses any piece of code, from an errant noindex tag to an improperly implemented canonical link, that inadvertently instructs search engines to overlook or misinterpret vital content, with grave consequences for online visibility.

This form of detrimental coding is especially insidious because it often goes unnoticed until substantial damage to the site’s SEO rankings has occurred. Detectable through meticulous code audits, catastrophic code is a silent saboteur of digital success, demanding vigilance from developers and SEO professionals alike.

How SEO Relies on Clean Code

The resilience of a website in search engine rankings frequently hinges on the meticulous construction of its code. Search engines are predicated on algorithms that parse the structural integrity and semantic clarity provided by clean, well-organized code, thereby rewarding those digital properties that adhere to web standards and best practices with superior visibility.

Search engine crawlers depend on clear and concise coding to effectively index and rank web pages. The employment of proper HTML5 tags, judicious use of title tags, and precise implementation of alt attributes for images are essential components that facilitate search engines in comprehending content and ensuring it reaches the intended audience.

| SEO Factor | Impact of Clean Code | Consequences of Catastrophic Code |

|---|---|---|

| Web Standards Compliance | Enhances search engine trust and credibility. | Leads to indexing issues and diminished search presence. |

| Structured Data Implementation | Empowers rich snippets and improved visibility in SERPs. | Results in missed opportunities for enhanced search features. |

| Site Speed Optimization | Boosts user experience and reduces bounce rates. | Increases loading times, negatively affecting user retention. |

Case Study 1: A Disastrous Noindex Directive

In the labyrinth of search engine optimization, even a minor misstep can escalate into a substantial setback.

The tale of a single noindex directive serves to exemplify this precariously fine line.

Ostensibly inconsequential, the noindex tag, misplaced within the source code, can act as the inadvertent gatekeeper, preventing search engines from showcasing a website’s content.

This case study unfolds the sequence of events from identifying the hidden error to witnessing the swift and punishing impact it levied against a site’s SEO stature.

The narrative does not end at the plunge of metrics, but leads into the adaptive measures that reclaimed the site’s rankings, imparting valuable insights to shield against similar digital pitfalls.

Identifying the Seemingly Innocuous Error

Within the realm of digital marketing, the impact of a noindex tag in the wrong place is akin to a concealed mine in the battlefield of SEO: potent and unexpected. It was during a routine website audit that LinkGraph’s SEO services unveiled such a code anomaly lurking within the meta tags of their client’s well-frequented blog section.

The discreet position of the noindex directive belied its destructive nature, commanding search engines to ignore the content that was meant to be a cornerstone for attracting organic traffic. The error, although diminutive, had effectively cloaked pages from the probing eyes of Google’s search bots, a revelation that underscored the need for Meticulous Website Analysis, such as that performed by LinkGraph’s comprehensive SEO services.

| SEO Analysis Phase | Discovery | Consequences | Corrective Action |

|---|---|---|---|

| Initial SEO Audit | Uncovering the misplaced noindex tag. | Dramatic drop in website traffic and visibility. | Immediate removal of the noindex directive. |

| Post-Audit Monitoring | Assessment of crawl errors and indexation status. | Potential loss of ranking for key keywords. | Continued refinement of meta tags and monitoring SERPs. |

The Immediate SEO Fallout Observed

The inadvertent inclusion of a noindex tag has immediate ramifications, as evidenced by plummeting visibility in Google’s search results. This inadvertent code signals to search engines that the content is not intended for indexing, leading to a swift erasure of the afflicted pages from search engine results pages, thereby reducing the site’s ability to attract new visitors.

Metrics post-implementation of the flawed tag revealed a sharp decline in user engagement, with significant dips in both page visits and session durations. For the client, this translated into an erosion of digital traction, demonstrating the devastating speed at which coding errors can translate into tangible SEO devaluation.

Steps to Recovery and Lessons Learned

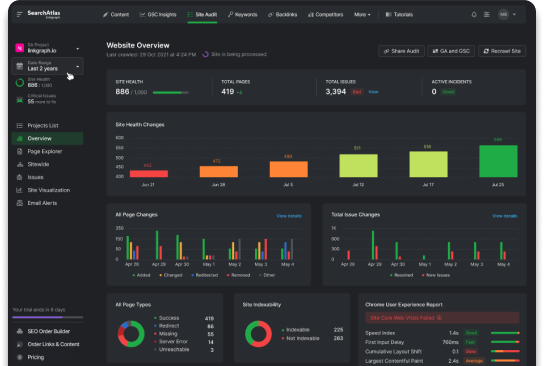

Recovering from the debacle of a misplaced noindex tag necessitated swift navigation through SEO restoration practices. LinkGraph’s rigorous re-evaluation and correction of meta tags, complemented by their Explore SearchAtlas SEO software, prompted recrawling and subsequent reindexing, catalyzing a resurgence in search visibility and traffic metrics for the client’s site.

The valuable lesson distilled from this incident accentuates the critical nature of ongoing surveillance and prompt remedy of SEO elements. The meticulous approach employed by LinkGraph Ensures that such errors are not only corrected but also that strategies are in place to prevent recurrence, safeguarding a consistent trajectory towards SEO excellence.

Case Study 2: The Hidden Robots.txt Blunder

An often-overlooked aspect of search engine optimization is the robots.txt file, an unassuming text document that wields colossal influence over a website’s relationship with search engine crawlers.

In the second case study, we dissect a perplexing scenario where a single line within the robots.txt file precipitated an unforeseen and substantial SEO crisis.

Through this deep dive, we will shed light on the scourge of one miscoded directive, measure its profound effect on a site’s online prominence, and chart the meticulous journey towards reviving SEO health that LinkGraph’s SEO services expertly orchestrated.

This case reflects the subtle yet significant power of robots.txt in shaping web visibility and illuminates the critical nature of precision in each strand of SEO’s intricate web.

Unearthing the Single Line That Caused Havoc

The calamitous impact of a seemingly minor robots.txt misconfiguration became painfully evident when LinkGraph’s SEO services uncovered a single disallow directive that mistakenly blocked search engines from accessing critical sections of a client’s website. This tiny but formidable line of text was acting like a prohibitive barrier, one that had inadvertently veiled important resources from the algorithmic gaze of search spiders.

This blunder, unearthed during a methodical SEO audit, brought to light the comprehensive nature of the oversight, thereby stressing the importance of thorough reviews of even the smallest SEO factors. LinkGraph’s adept analysis spotted the problematic instruction and promptly initiated corrective measures, underscoring their commitment to identifying and solving complex SEO issues that can hinder a website’s search engine performance.

Quantifying the Impact on Web Visibility

The fallout from the robots.txt oversight revealed itself in the form of suppressed content and diminished search presence, both quantifiable by LinkGraph’s advanced SEO reporting insights. A precise and swift analysis showed a direct correlation: as the site vanished from search engine view, vital metrics such as organic traffic and keyword rankings experienced a corresponding decline.

- Conduct an in-depth investigation to determine the breadth of content affected by the robots.txt file.

- Analyze the downturn in keyword rankings and organic traffic metrics.

- Implement immediate updates to the robots.txt directives to regain search engine visibility.

Through LinkGraph’s metrics-driven approach, the client’s visibility in Google search results was no longer shrouded in mystery, allowing the identification of specific areas for recovery. The tangible evidence of the misconfiguration emphasized the necessity for vigilant monitoring and the need for an adept SEO service that can expertly navigate and resolve such critical issues.

Navigating the Path to Restoring SEO Health

LinkGraph’s adept prowess in SEO service provision was instrumental in rectifying the robots.txt catastrophe, with their SEO experts deploying an incisive protocol tailored to reestablish the site’s standing in search engine results. Through deliberate adjustments to the file and expert liaison with search engine representatives, LinkGraph ensured a prompt revocation of the prohibitive directives and facilitated a swift recovery of the site’s digital footprint.

The commitment to precision and detail demonstrated by LinkGraph’s SEO team expedited the client’s journey back to prominence in Google search results. Strategic oversight and persistent follow-up in the wake of the revisions to the robots.txt file culminated in reclaimed search rankings, underscored by a renewed surge in organic traffic – a testament to the efficacy of professional SEO intervention.

The Subtle Dangers of Copy-Paste Coding Practices

In the labyrinth of web development, the practice of copying and pasting code is ubiquitous, yet it harbors the risk of magnifying small errors into systemic failures that can have catastrophic consequences for search engine optimization (SEO).

Precision is paramount when configuring vital indexing settings, as an innocuous slip-up in a seemingly trivial line of code can cascade into a serious impediment, obstructing a website’s ability to communicate effectively with search engine algorithms.

This section delves into the importance of vigilance in coding practices and the necessary precautions to thwart inadvertent indexing blunders, ensuring the digital edifice stands robust against the inadvertent tempest of copy-paste errors.

How Small Errors Propagate in Large Systems

In the intricate web of web development, a minuscule coding error can propagate with surprising ferocity, disrupting systems at scale. It begins as a ripple—a misplaced character, an erroneous command—but swiftly amplifies, distorting the intended flow of data and communication with search engines.

These small discrepancies, once set into motion, respect neither scale nor complexity, infiltrating the larger machine of a website’s infrastructure. The impact is akin to a structural flaw in architecture: what seems trivial at the draft stage can lead to profound instability when the system endures the dynamic stresses of live interaction with search engine algorithms.

- Examine initial code for latent errors—those small yet potentially expansive flaws.

- Trace the spread of the error from the point of origin to system-wide SEO disruptions.

- Address coding practices to preemptively halt the potential error propagation.

Preventing Accidental Indexing Mishaps

The prevention of indexing missteps pivots on adherence to stringent coding standards and the implementation of robust quality checks. In the ever-evolving landscape of SEO, where a website’s prominence in search engine results depends on flawless indexation, an inadvertent ‘disallow’ in a robots.txt file or a misconfigured ‘noindex’ tag can spell disaster, making meticulous Code Reviews and audits an essential staple of SEO hygiene.

LinkGraph’s SEO services emphasize the practice of continuous vigilance and comprehensive testing, a strategy that mitigates the risks associated with copy-paste coding practices. By incorporating automated checks supplemented by expert manual reviews, LinkGraph ensures that each line of code contributes positively to a website’s overall SEO narrative, thus circumventing the errors that lead to accidental de-indexing:

| Error Type | Detection Method | Prevention Mechanism |

|---|---|---|

| Improper Use of ‘noindex’ | Free SEO audit and manual code review | Clear documentation and code standards adherence |

| Robots.txt Misconfigurations | Free backlink analysis and SearchAtlas SEO software insights | Regular audits and update protocols |

| Copy-Paste Code Errors | On-page SEO services and white-label link building scrutiny | Code validation tools and pre-deployment testing |

SEO Recovery: Turning Catastrophe Into Opportunity

When catastrophic code strikes, it presents more than just an obstacle; it is a clarion call for resilience and strategic acumen in the realm of search engine optimization.

As businesses grapple with the aftermath of coding errors, analyzing the extent of damage becomes the first critical step on the path to recovery.

LinkGraph’s SEO services becomes instrumental in devising tailor-made, strategic plans aimed at code correction, ensuring that each fix is a precision strike against the root of the issue.

Monitoring the recovery process, post-fix implementation, offers a transparent view into the recovery trajectory, instilling confidence in revitalized SEO performance and turning a former catastrophe into a springboard for future opportunities.

Analyzing the Extent of Damage to SEO

In the wake of catastrophic coding errors, a comprehensive analysis is imperative to measure the degree of impact on SEO. LinkGraph’s SEO services meticulously assess the extent of the damage, providing clients with clarity on the repercussions of code-related oversights on search engine rankings and website traffic.

Understanding the multi-layered consequences of SEO setbacks fuels LinkGraph’s targeted approach to recovery efforts. The team’s analysis illuminates specific areas requiring attention and shapes the strategic blueprint to navigate out of the SEO quagmire:

- Mapping the SEO performance drop to pinpoint the coding error’s influence

- Examining the correlation between site visibility and user behavior post-error

- Identifying ranking fluctuation patterns to determine the recovery milestones

Crafting a Strategic Plan for Code Correction

Devising a strategic plan for code correction entails not merely the Rectification of Errors but also the implementation of a sustainable framework to prevent future infractions. With this focus, LinkGraph’s SEO services construct meticulous plans that marry technical SEO repair with strategic foresight, ensuring the comprehensive reinforcement of a website’s digital architecture.

LinkGraph’s SEO services lay the groundwork by identifying the root causes of the SEO setback, from there crafting a timeline and protocol for the necessary corrections. The strategy is grounded in Thorough Analysis and the application of industry-standard practices, setting the stage for durable SEO success:

- Isolate the specific code anomalies impacting SEO factors including indexing and site speed.

- Articulate a clear set of coding standards and best practices to guide the correction process.

- Deploy corrections through a controlled rollout, coupled with monitoring to gauge efficacy and prevent recurrences.

Monitoring SEO Recovery Post-Fix Implementation

The vigilant eye of LinkGraph’s SEO services remains keenly focused on monitoring the trajectory of SEO recovery following the Implementation of Coding Corrections. By tracking real-time data and SEO performance indicators, the team can discern the effectiveness of their intervention, ensuring that the website’s digital health progresses steadily towards predefined targets.

As the corrective measures take root, LinkGraph leverages their cutting-edge SearchAtlas SEO software to observe changes in search engine rankings and user engagement. This relentless surveillance permits timely adjustments, fine-tuning the approach as needed, and solidifying the website’s ascent back to competitive prominence.

Prevention Is Better Than Cure: Code Audits for SEO

In the meticulous realm of SEO, ensuring the integrity of a website’s code is tantamount to safeguarding its digital presence.

As the architectural blueprint of online entities, code forms the bedrock upon which search engines build their understanding of site content and hierarchy.

Recognizing this critical relationship, professionals extend their vigilance beyond content and design to delve into the very code that weaves the web of online visibility.

With case studies highlighting the dire consequences of coding missteps, the impetus for preventive measures becomes clear.

Hence, addressing the urgent necessity for proactive defenses against catastrophic code, the industry turns its focus toward implementing regular code reviews and leveraging advanced tools and techniques for code auditing.

These precautionary efforts aim not just to detect and rectify errors but to instill a layer of imperviousness to coding flaws that could inadvertently throttle a website’s ascent in search engine results.

Implementing Regular Code Reviews

In the continuous pursuit of SEO integrity, the practice of implementing regular code reviews emerges as an essential guardrail. These systematic examinations by LinkGraph’s SEO experts serve to proactively identify and resolve latent errors that could, if left unchecked, cascade into catastrophic SEO consequences.

By integrating these comprehensive code reviews into the fabric of maintenance routines, LinkGraph solidifies the SEO foundation of a website. The process actively safeguards against the inadvertent introduction of harmful directives which can lead to a precipitous decline in search engine rankings.

Tools and Techniques for Code Auditing in SEO

In the domain of SEO, the advent of sophisticated tools for code auditing heralds a new era of precision and preemption. LinkGraph’s arsenal includes an array of Diagnostic Tools Such as Their SearchAtlas SEO software, equipped to detect anomalies that could potentially hinder SEO performance.

The delicacy of SEO calls for advanced techniques, and LinkGraph champions this with their methodical use of automated scans combined with expert manual code review. Their comprehensive approach ensures that every aspect of the Source Code Is Aligned with search engine best practices, safeguarding against detrimental SEO impacts.

- Employ automated scanning tools to conduct preliminary code audits for common errors.

- Integrate manual code reviews by SEO experts to provide deeper insights into complex code structures.

- Utilize SearchAtlas SEO software for advanced diagnostic reporting to facilitate proactive correction and optimization.

Training Developers With an SEO Perspective

The intersection of development and search engine optimization presents a unique set of challenges and opportunities.

By instilling developers with a foundational understanding of SEO, businesses equip their technical teams to preemptively address potential SEO pitfalls within their codebase.

Ensuring that SEO concepts permeate the educational fabric of a developer’s workflow is critical, as it fosters an environment where coding practices inherently support and enhance SEO initiatives.

As we delve into the significance of incorporating SEO best practices into the development process, we acknowledge the profound impact that well-informed coding decisions can have on a website’s search engine rankings and overall digital success.

Essential SEO Concepts for Developer Education

Incorporating SEO awareness in the development process is crucial, with LinkGraph’s SEO services championing this educational shift. Their training programs foster a deep appreciation for The SEO Implications of Coding Decisions, thereby equipping developers with the knowledge to build SEO-friendly architectures from the ground up.

An astute developer, cognizant of SEO fundamentals, is an asset in the tech-driven market, positioning client websites for search engine excellence. LinkGraph ensures that each string of code is not only functional but also optimized to meet the sophisticated requirements of modern search algorithms.

Integrating SEO Best Practices Into Development Workflow

LinkGraph’s commitment to elevating SEO content begins with the cultivation of a developer’s workflow that integrates SEO best practices by design. Development teams trained to approach every project with an SEO lens ensure that the final product is optimized for search engines from the first line of code.

| Development Phase | SEO Integration Activity | Expected Outcome |

|---|---|---|

| Planning | Teams identify SEO goals and requirements before coding begins. | A cohesive roadmap that aligns development with SEO objectives. |

| Implementation | Code is crafted in compliance with SEO standards, ensuring the use of structured data and meta tags. | A robust codebase that inherently supports SEO performance and user experience. |

This transformative practice mandates a paradigm shift: developers are not just code creators but vital contributors to a site’s SEO success. LinkGraph’s client projects thus benefit from a harmonized effort where SEO and Development Are Not Sequenced but Synchronized to produce optimized web content.

Evaluating the Long-Term SEO Implications of Code Changes

In the complex ecosystem of digital marketing, code changes function as double-edged swords, possessing the capacity to enhance functionality while simultaneously threatening SEO stability.

Analyzing past case studies reveals the cautionary tales that emerge from seemingly benign code updates, drawing attention to the delicate equilibrium between code efficiency and SEO objectives.

These narratives serve as potent reminders that the quest for sleeker code should never compromise a website’s visibility or undermine its performance in search engine results.

As such, these case studies beckon developers and SEO professionals to prioritize long-term strategy over short-term expediency in their coding decisions.

Case Studies as a Cautionary Tale for Code Updates

Scrutinizing past case studies involving catastrophic code reveals the pitfalls of seemingly minor code alterations that radically undermine SEO efforts. These cautionary narratives exemplify the inherent risk involved in altering code without fully considering the SEO ramifications, emphasising the undeniable impact a singular line of code can have on a website’s search engine ranking trajectory.

An in-depth review of these incidents underscores the necessity for developers and SEO experts to collaborate closely, ensuring code updates enhance site functionality while maintaining, if not bolstering, the site’s SEO strength. The case studies stand as stark reminders of the need to balance innovation with vigilance, lest a single line of amended code precipitate a severe setback in search engine visibility.

Balancing Code Efficiency and SEO Objectives

In tackling the nexus between code efficiency and SEO objectives, businesses must carefully navigate the potential rift between streamlined functionality and search engine visibility. It is imperative that every code enhancement, whether aimed at reducing load times or refining user interactions, is weighed for its potential influence on a website’s search engine rankings.

LinkGraph’s SEO services excel at striking this delicate balance, ensuring that all code refinements serve to bolster, not hinder, a client’s digital footprint. They not only optimize website performance but also align every technical upgrade with SEO best practices, guaranteeing that the site remains both agile and discoverable in the competitive digital arena.

Conclusion

In conclusion, the “Catastrophic Code” case studies underscore the fragility of SEO to the perils of coding errors.

Even a single flawed line of code, such as a misplaced ‘noindex’ tag or a misconfigured directive in the robots.txt file, can drastically disrupt a website’s search engine ranking and visibility.

These incidents highlight the crucial need for meticulous code audits, ongoing monitoring, and correction strategies implemented by SEO professionals.

LinkGraph’s SEO services emphasize the importance of preventive measures and education, showing that regular code reviews and integrating SEO best practices into the development process are vital for maintaining digital health.

Their approach demonstrates that while coding errors can have severe SEO repercussions, careful management and strategic recovery planning can turn potential catastrophes into opportunities for optimization and improvement.