Website Absence in Google Search

Website Absence in Google Search: Troubleshooting Guide When a business website languishes in obscurity, failing to surface in Google’s search results, the cause is often a complex […]

Website Absence in Google Search: Troubleshooting Guide

When a business website languishes in obscurity, failing to surface in Google’s search results, the cause is often a complex web of SEO oversights.

Owners might find themselves confronting a bewildering array of issues, from indexing woes to unseen penalties, all conspiring against their digital presence.

However, with a structured approach to evaluating and enhancing site attributes, ranging from content optimization to robust backlink profiles, visibility can improve dramatically.

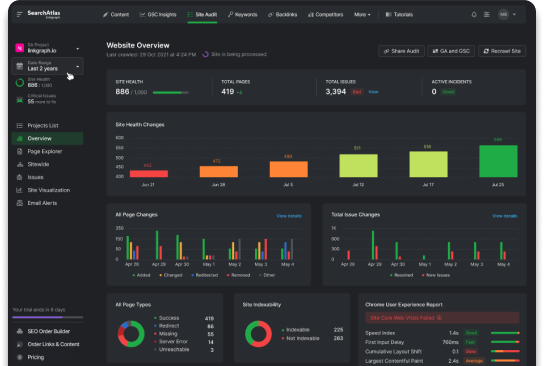

Crucial to this process is grasping the nuances that tip the scales in favor of search engine optimization—where LinkGraph’s comprehensive SEO services and SearchAtlas SEO software become invaluable assets.

Keep reading to uncover how precise adjustments and strategic actions can elevate your website from the shadows of the internet into the search spotlight.

Key Takeaways

- Proper Visibility Settings and Addressing Errors in Google Search Console Are Vital for a Website’s Search Engine Discoverability

- Regularly Auditing and Rectifying Server Errors, Broken Links, and Removing Duplicate Content Are Essential for SEO Success

- Establishing Site Authority Through Quality Backlinks Is Crucial for Higher Search Engine Rankings

- Mobile-First Design and Monitoring Algorithm Changes Are Key to Maintaining Web Presence and Relevance

- Employing Canonical Tags and Rewriting or Redirecting Content Ensures Search Engines Clearly Understand Content Hierarchy

Understanding Why Your Website Is Not Showing Up on Google

When a business website fails to appear in Google search results, it begins a discovery process that may uncover various underlying issues.

Ensuring that a website is accessible and visible to search engines is a fundamental step in leveraging the vast potential of digital marketing.

To troubleshoot this critical concern, one begins by verifying the website’s visibility settings, a precaution often overlooked by website owners.

Simultaneously, engaging with Google Search Console becomes essential; this platform provides alerts and insights that guide users through technical SEO challenges affecting search engine visibility.

A strategic approach to these aspects can significantly enhance a website’s capacity to be indexed, thereby improving its presence in Google’s search landscape.

Checking Your Website’s Visibility Settings

Upon confronting the predicament of a missing business website in Google’s search results, one must scrutinize the site’s visibility settings. These settings play a pivotal role, as they act as gatekeepers that either permit or obstruct search engine crawlers from indexing the site.

Conducting a meticulous assessment of the website’s visibility involves evaluating the robots.txt file and inspecting meta tags for directives like ‘noindex’, which could inadvertently prevent Googlebot from adding pages to its database. Rectifying These Permissions may resolve search engine visibility issues, restoring the website’s rightful place in search queries.

Reviewing the Google Search Console for Alerts

Exploring the efficacy of a website’s performance in Google’s search results often necessitates a critical examination of alerts in Google Search Console. When a website does not appear in Google search results, this tool acts as a diagnostician, shedding light on urgent issues such as crawl errors, security troubles, and directives that exclude content from the search engine’s scope.

Upon identifying alerts in Google Search Console, action must be taken promptly. Notifications regarding manual actions, problems with indexing, and any breaches of Google’s quality guidelines serve as a clarion call to website owners, compelling them to implement Corrective Measures to regain their standing in the digital marketplace:

| Challenge | Tool | Action Required |

|---|---|---|

| Crawl Errors | Google Search Console | Review and Resolve URL Issues |

| Security Issues | Google Search Console | Identify and Mitigate Threats |

| Manual Actions | Google Search Console | Rectify Compliance Breaches |

| Indexing Issues | Google Search Console | Ensure Content Meets Indexing Requirements |

Assessing if Your Site Is Indexed by Google

Determining whether a website is part of Google’s vast index is paramount for ensuring its search engine visibility.

Entrepreneurs and digital marketing specialists often rely on concrete methods to verify indexing, including the ‘site:’ search query and thorough inspections within Google Search Console.

These tactics serve as a foundation for a comprehensive SEO strategy, enabling the identification and remediation of indexing anomalies that could be hindering a website’s performance in search rankings.

Using the ‘Site:’ Search Query

Embarking on an investigation into whether a website has been indexed, industry professionals may utilize the ‘site:’ search operator within Google. This operator generates a comprehensive list of all pages from the specified domain that Google has indexed, offering immediate clarity about the site’s visibility in search engine results.

By inputting ‘site:’ followed by the domain name into Google’s search box, stakeholders can swiftly gauge the extent of their digital footprint. The outcome provides a visual confirmation of a website’s indexed pages, guiding further analysis and Optimization Efforts.

Inspecting the Index Status in Google Search Console

To delve deeper into the indexing status of a website, one must not overlook the capabilities of Google Search Console. This service provides a detailed report on the individual pages that have been indexed and highlights any obstacles that may impede a site’s visibility in search engine results.

The process of reviewing index coverage is straightforward and reveals critical insights:

- Access Google Search Console and navigate to the ‘Coverage’ section.

- Examine the report for errors and warnings that indicate indexing issues.

- Take note of any excluded pages and understand the cause for exclusion.

Armed with this information, one has a clearer path to enhancing a site’s presence on Google, ensuring that their target audience can easily discover their content.

Ensuring Your Website Isn’t Blocking Search Engines

Ensuring a website’s visibility on Google’s search engine results page is contingent on dispensing with any barriers that might be obstructing search engines from indexing site content.

Essential to this process are keen evaluations for inadvertent use of ‘noindex’ tags and a thorough review of the robots.txt file.

These aspects constitute the bedrock of search engine accessibility, and as such, demand a meticulous approach to avert any impediments to a website’s discoverability.

Checking for Noindex Tags in Your Site’s Code

Scouring through a site’s HTML code is a critical step for Digital Marketing Experts aiming to unmask potential barriers to search engine indexing. Particular attention is paid to ‘noindex’ tags, which can act as a blockade to search engine crawlers, preventing essential website pages from surfacing in search engine results.

Proactive Scrutiny of a Site’s HTML Code for any ‘noindex’ directives ensures that content is primed for discovery by Googlebot. Addressing these tags when found is imperative, as doing so removes hindrances to a site’s visibility, fostering better placement in search outcomes and user accessibility.

Reviewing Your robots.txt File

Key to unlocking a website’s potential for high search engine rankings is a careful examination of the robots.txt file. This plain text file instructs search engine crawlers on which parts of a website should or should not be processed and indexed.

Professionals swiftly navigate to the root directory of a website to scrutinize the robots.txt file: this critical step ensures no directives are inadvertently disallowing vital website pages from the indexing eye of search engines. It is here where the delicate balance between accessibility and privacy is maintained, guiding the visibility trajectory of the site:

| Visibility Factor | Location | Impact on Indexing |

|---|---|---|

| Robots.txt Directives | Website Root Directory | Guides Search Engine Crawlers |

| Noindex Meta Tags | Individual HTML Pages | Potentially Blocks Indexing |

| sitemap.xml Reference | Robots.txt File | Assists in Efficient Crawling |

Diagnosing and Fixing Crawling Issues

When a website remains elusive to Google’s indexing process, the emphasis shifts to enhancing the infrastructure that facilitates search engine crawling.

Addressing such concerns necessitates a clear understanding of the website’s sitemap submission and ensuring the site is free of server errors and broken links.

As businesses strive to improve their web presence, refining the groundwork that supports search engine crawlers becomes vital, setting the stage for topics like ‘Enhancing Crawlability With a Sitemap’ and ‘Resolving Server Errors and Broken Links’.

Enhancing Crawlability With a Sitemap

An effectively structured sitemap acts as a navigational aid for search engine crawlers, enabling a more efficient discovery and indexing of website pages. By succinctly listing URLs and metadata about the updates and the relevance of pages on a site, sitemaps facilitate Googlebot’s task, streamlining the crawling process and expediting visibility in search results.

Incorporating a comprehensive sitemap presents a clear blueprint of the site’s hierarchical architecture to search engines, which can dramatically alleviate difficulties in locating and indexing content. Assiduous submission of an up-to-date sitemap via Google Search Console is imperative for any website owner seeking optimization in search engine visibility.

Resolving Server Errors and Broken Links

Server errors and broken links can significantly impede a site’s ability to rank in Google’s search results, effectively making it invisible to potential visitors. Addressing these errors is a critical aspect of search engine optimization, as they not only affect user experience but also a site’s reputation with search engines.

By Performing Regular Audits using tools like LinkGraph’s SEO services, website owners can detect and mend broken links, and a diligent approach to server maintenance ensures stability and accessibility. Such remedies not only recover lost visibility but also reinforce the foundation for a stronger web presence:

| Issue Type | Consequence | Action Required |

|---|---|---|

| Broken Links | Deteriorates User Experience | Utilize LinkGraph’s Free Backlink Analysis |

| Server Errors | Disrupts Search Engine Indexing | Conduct Regular Server Audits |

These technical difficulties represent barriers that, when left unaddressed, can detrimentally affect a site’s ability to increase its domain authority and enhance overall search engine visibility. Effective Management and Prompt Resolution shape a resilient and SEO-friendly digital ecosystem.

Addressing Potential Google Penalties on Your Site

At times, despite diligent efforts to optimize a website’s search engine optimization (SEO) profile, performance in Google’s rankings can abruptly decline.

This conundrum often signals the imposition of penalties by Google’s algorithms, triggered due to non-adherence to established webmaster guidelines.

As businesses navigate the complexities of digital visibility, identifying and resolving manual actions listed in Google Search Console becomes a mission-critical step to reclaiming search rankings.

Moreover, the urgency to purge any black-hat SEO tactics from a website’s strategy is paramount in restoring good standing with search algorithms, thereby reinstating the site’s reputation and visibility in search results.

Identifying Manual Actions in Google Search Console

Unearthing manual actions via Google Search Console remains a pivotal factor for website owners intent on resolving infractions that lead to penalties in search rankings. It is through this incisive tool that notifications about non-compliance with Google’s quality guidelines become transparent, allowing for a swift course of remedial action.

Insights gleaned from Google Search Console afford webmasters the opportunity to pinpoint the precise nature of manual penalties affecting their site. By identifying these actions, professionals can undertake the necessary steps to address the infractions, setting the stage for the reinstatement of the website’s search visibility and credibility.

Cleaning Up Black-Hat SEO Tactics

Eradicating black-hat SEO tactics is essential to restoring a website’s integrity in the eyes of search engines. LinkGraph’s SEO services specialize in identifying and eliminating these practices, ensuring that a site adheres to the ethical standards promoted by Google.

The discovery and correction of manipulative strategies not only ameliorate the risk of penalties but also pave the way for sustainable growth in organic traffic:

- Forensic analysis uncovers hidden infractions within a website’s SEO practices.

- Meticulous cleansing of black-hat techniques re-aligns the site with Google’s quality guidelines.

- Proactive vigilance safeguards against future lapses in SEO strategy.

Renouncing such deceitful tactics, with assistance from LinkGraph’s white label SEO services, translates into enhanced credibility and a solid foundation for climbing the search engine results pages.

Improving Site Content to Meet Search Intent

In the intricate dynamics of search engine optimization, content refinement stands as a critical component for aligning a website with the expectations set by user search intent.

Recognizing this, domain authorities and content creators turn their focus to the meticulous analysis of top-ranking pages and the crucial updates needed for content to resonate effectively with user queries.

This endeavor not only exemplifies a commitment to understanding search behavior but also underscores the significance of precision and relevance in keyword optimization, ensuring that web offerings closely mirror the searcher’s quest for information.

Analyzing Top-Ranking Pages for Keyword Optimization

LinkGraph’s SEO experts recognize that to elevate a website’s ranking, it is essential to dissect the content strategies employed by top-performing pages within search results. Thorough analysis of these pages uncovers patterns in keyword usage, topic relevancy, and user engagement that are instrumental for crafting compelling content strategies that align with search intent.

By understanding the nuances that govern the performance of these pages, SEO professionals can reverse-engineer success by infusing their content with a similar blend of critical keywords and topics. This strategic emulation of best practices drives content optimization, ensuring that web assets are positioned favorably amidst the competitive landscape of search engine results:

- Decompose the content elements that contribute to high-ranking pages’ success.

- Extract and implement strategic keyword placement demonstrated by market leaders.

- Optimize content relevance to mirror the user’s search intent and query context.

Updating Content to Align With User Queries

Fortifying a website’s content necessitates an in-depth understanding of the specific questions and needs fueling a user’s search query. As part of the SEO services provided by LinkGraph, experts Meticulously Revise and Enrich Content, ensuring it speaks directly to the motivations behind each user’s online search activities.

This nuanced approach typically involves enhancing the precision and depth of information to satisfy users who land on the site, expecting resolution to their inquiries:

- Analyzing user behavior to comprehend the context of search inquiries.

- Refining content to address the nuances of queries with clear, accurate answers.

- Monitoring user engagement metrics to continually adapt content and maintain alignment with search intent.

With the help of LinkGraph’s comprehensive SEO tools and strategies, businesses can translate the insights gained from user search behavior into actionable updates. This leads to not only high-quality content but also an elevated user experience that aligns seamlessly with the searchers’ needs, driving increased relevance and trust with Google’s algorithms.

Boosting Site Authority Through Quality Backlinks

In the pursuit of enhancing a business website’s discoverability within Google search results, establishing site authority through the acquisition of quality backlinks emerges as a non-negotiable strategy.

Elevated site authority signals to Google an endorsement of content quality and relevance, directly influencing search rankings.

To achieve this, website owners and SEO professionals embark on backlink audits, analyzing the present link profile’s strength and sourcing opportunities for acquiring authoritative links.

These deliberate moves not only solidify the foundations of a website’s online credibility but also pave the way for increased visibility and organic traffic from the search engine’s results pages.

Conducting a Backlink Audit

Embarking on a backlink audit is a discerning step to ascertain the integrity and efficacy of a website’s link profile. LinkGraph’s SEO services offer a free backlink analysis, delineating the quality, relevance, and diversification of backlinks that are pivotal in establishing site authority.

This Rigorous Process involves the evaluation of existing backlinks to weed out any detrimental or low-quality links that may be suppressing a website’s domain authority. Such analytical measures serve to reinforce a robust SEO foundation, fostering improved visibility within Google’s rigorous search rankings.

Strategies for Gaining Authoritative Links

LinkGraph’s Expertise in white label link building provides businesses with a clear roadmap for acquiring authoritative backlinks. This involves identifying influential domains related to the company’s niche and engaging in expert guest posting services to secure valuable links: these collaborative efforts not only enhance credibility but also drive referral traffic to the website.

| Link Acquisition Method | Description | Benefits |

|---|---|---|

| Guest Posting | Contribute high-quality content to reputable sites in exchange for backlinks. | Boosts domain authority and exposes your brand to wider audiences. |

| Influencer Collaboration | Partner with industry leaders for shared content initiatives. | Leads to high-quality, natural backlinks and heightened brand visibility. |

Another successful approach for building a healthy backlink profile involves leveraging SearchAtlas SEO software, which facilitates free backlink analysis and aids in creating effective personal branding strategies. By utilizing advanced tools to pinpoint backlink opportunities, businesses can systematically construct a powerful network of backlinks, augmenting their site’s authority and its standing in the hierarchy of search engine results.

Eliminating Duplicate Content to Avoid Confusion

A prevalent issue contributing to a website’s invisibility in Google search results is the existence of duplicate content.

This problem not only confuses search engines but can also lead to diminished search rankings due to the perceived splitting of link equity.

As such, webmasters must prioritize the elimination of confusing redundancies by employing strategies such as utilizing canonical tags effectively and rewriting or redirecting duplicate pages.

These solutions are designed to clarify the website’s content hierarchy for Google’s crawlers, thus optimizing the site’s potential to rank prominently in search engine results.

Utilizing Canonical Tags Effectively

Implementing canonical tags serves as a strategic measure to signal to search engines which version of similar or identical content is the master copy. By specifying a preferred URL through the rel=’canonical’ link element, webmasters effectively streamline indexing and avert the potential pitfalls associated with duplicate content.

Incorporating canonical tags with precision ensures that search engines consolidate link signals for comparable pages, enhancing the likelihood of the designated page garnering higher search rankings. This practice harmonizes the content’s visibility efforts and complements a robust SEO campaign driven by tools like LinkGraph’s SearchAtlas SEO software.

Rewriting or Redirecting Duplicate Pages

Confronting the repercussions of duplicate content requires decisive action; rewriting or redirecting duplicate pages is crucial. Rewriting involves the careful reformulation of content to ensure uniqueness, while redirection, especially using 301 redirects, guides search engines and users to a single, authoritative page.

When selecting between rewriting and redirecting, webmasters consider the specific circumstances and strategic goals of their website:

- Rewriting is often favored when each piece of content can stand alone with a unique perspective or added value.

- Redirecting is the preferred choice if the content’s purpose is better served by consolidating user traffic to a principal page.

In implementing these tactics, the clarity of content hierarchy is optimized, effectively disentangling any indexing confusion and bolstering the site’s SEO performance.

Leveraging the Benefits of a Mobile-Friendly Website

In today’s technologically driven marketplace, a mobile-friendly website is no longer a convenience but a necessity.

With search engines like Google placing substantial emphasis on mobile usability, the adaptation to mobile-first design principles becomes essential for maintaining visibility and relevance.

Businesses and their digital strategists must employ Google’s array of tools to test page responsiveness, ensuring their website’s compatibility with various devices.

The focus on a mobile-friendly interface underscores the evolution of user behavior, as mobile devices have become the primary access point for internet browsing and information discovery.

Testing Page Responsiveness With Google’s Tools

Addressing mobile responsiveness is critical, and Google’s suite of tools offers a streamlined process for evaluation. Companies benefit from using tools like Google’s Mobile-Friendly Test, which analyzes a web page’s URL to determine its ease of use on mobile devices, an outcome which becomes pivotal in optimizing for higher search result placement.

Experts at LinkGraph harness these insights to refine a website’s interface, ensuring content is responsive and accessible regardless of device type. This comprehensive approach to mobile optimization is a cornerstone of their SEO services, designed to secure a website’s visibility and cater to the modern user’s browsing habits.

Implementing Mobile-First Design Principles

Embracing mobile-first design principles necessitates a shift in approach where the primary design and development phase focuses on optimal user experience for smaller screens. This strategy ensures that essential features and content are seamlessly accessible on smartphones and tablets, forming the foundational layer upon which the desktop experience is enhanced.

LinkGraph’s seasoned professionals employ this methodology to deliver robust SEO content strategies, where site architecture and design begin with the mobile experience in mind. The following steps outline the mobile-first adoption process employed by experts:

- Commence design with mobile constraints to prioritize content and functionality critical to mobile users.

- Incrementally scale up the design to create enriched experiences for larger screens without compromising mobile usability.

This progressive enhancement ensures that as a website scales, it retains the clarity, speed, and usability that are indispensable for mobile optimization, thus affirming search engine visibility and user engagement across all devices.

Stay Updated With Google Algorithm Changes

Remaining attuned to the ever-evolving landscape of Google’s algorithm is imperative for the longevity and visibility of any business website.

Search engine algorithms are in constant flux, and these changes can significantly impact a site’s ranking and visibility in search results.

As industry professionals acknowledge this dynamic, it becomes essential to not only follow SEO news and Google updates closely but to also refine and recalibrate website strategies in alignment with the latest search engine developments.

This proactive stance empowers website owners to anticipate shifts in digital marketing, ensuring their websites maintain optimal performance amidst the search engine’s algorithmic tide.

Following SEO News and Google Updates

Staying abreast of developments in SEO and Google’s frequent algorithm updates is a proactive measure to safeguard a website’s search engine rankings. Professionals immerse themselves in the latest discussions, studies, and official announcements to discern the direction of Google’s algorithm adjustments and adapt their strategies accordingly.

Implementing the latest Google guidelines ensures that SEO efforts are not only responsive to algorithmic changes but are also forward-thinking. As Google’s algorithms grow more sophisticated, so too must the tactics used by businesses to maintain and improve their search engine visibility.

Adjusting Website Strategies Accordingly

With the landscape of Google’s algorithm perpetually shifting, the impetus falls on website owners and SEO managers to refine their digital strategies. This ongoing recalibration involves aligning on-page and off-page optimization endeavors with the latest search engine guidelines, ensuring that their website’s presence in Google search results sustains its prowess.

The adaptation of SEO campaigns to align with algorithm updates transcends mere reactive changes; it requires a proactive audit of content, backlinks, and technical SEO elements to ensure compliance. Strategic retooling in response to Google’s shifts becomes an assured path to maintaining search visibility and user relevance:

| SEO Element | Focus Area | Adjustment Strategy |

|---|---|---|

| On-Page Content | Keyword Optimization | Harmonize with Trending Search Queries |

| Backlink Profile | Link Quality | Eradicate Toxic Links; Fortify with High-Authority Ones |

| Technical SEO | Website Performance | Enhance Mobile-Friendliness and Page Load Speed |

Conclusion

In summary, resolving a website’s absence in Google search results is crucial for maximizing digital visibility and leveraging the full potential of online marketing.

By meticulously checking the website’s visibility settings, such as the robots.txt file and ‘noindex’ tags, and by employing Google Search Console for alerts on crawl errors, security issues, manual actions, and indexing problems, website owners can take corrective actions necessary to enhance their search presence.

Conducting regular backlink audits and implementing strategies for gaining authoritative links, as well as ensuring a mobile-friendly user experience, are vital to boosting site authority and meeting Google’s ranking criteria.

Additionally, constant vigilance in identifying and addressing potential Google penalties, eliminating duplicate content, and keeping content aligned with user search intent is key for a robust SEO strategy.

Lastly, staying informed about Google’s algorithm updates and proactively adjusting website strategies in response to these changes is pivotal.

This integrated approach supports websites in achieving and maintaining their visibility on Google, driving traffic, and succeeding in a competitive online environment.